Calculate surface normals from depth image using neighboring pixels cross product

As the title says I want to calculate the surface normals of a given depth image by using the cross product of neighboring pixels. However, I do not really understand the procedure. Does anyone have any experience?

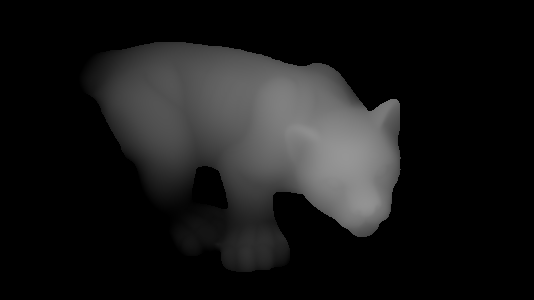

Lets say that we have the following image:

what are the steps to follow?

Update:

I am trying to translate the following pseudocode from this answer to opencv.

dzdx=(z(x+1,y)-z(x-1,y))/2.0;

dzdy=(z(x,y+1)-z(x,y-1))/2.0;

direction=(-dxdz,-dydz,1.0)

magnitude=sqrt(direction.x**2 + direction.y**2 + direction.z**2)

normal=direction/magnitude

where z(x,y) is my depth image. However, the output of the following does not seem correct to me:

for(int x = 0; x < depth.rows; ++x)

{

for(int y = 0; y < depth.cols; ++y)

{

double dzdx = (depth.at<double>(x+1, y) - depth.at<double>(x-1, y)) / 2.0;

double dzdy = (depth.at<double>(x, y+1) - depth.at<double>(x, y-1)) / 2.0;

Vec3d d = (dzdx, dzdy, 1.0);

Vec3d n = normalize(d);

}

}

Update2:

Ok I think I am close:

Mat3d normals(depth.size(), CV_32FC3);

for(int x = 0; x < depth.rows; ++x)

{

for(int y = 0; y < depth.cols; ++y)

{

double dzdx = (depth.at<double>(x+1, y) - depth.at<double>(x-1, y)) / 2.0;

double dzdy = (depth.at<double>(x, y+1) - depth.at<double>(x, y-1)) / 2.0;

Vec3d d;

d[0] = -dzdx;

d[1] = -dzdy;

d[2] = 1.0;

Vec3d n = normalize(d);

normals.at<Vec3d>(x, y)[0] = n[0];

normals.at<Vec3d>(x, y)[1] = n[1];

normals.at<Vec3d>(x, y)[2] = n[2];

}

}

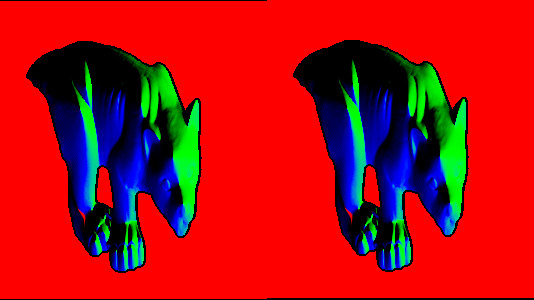

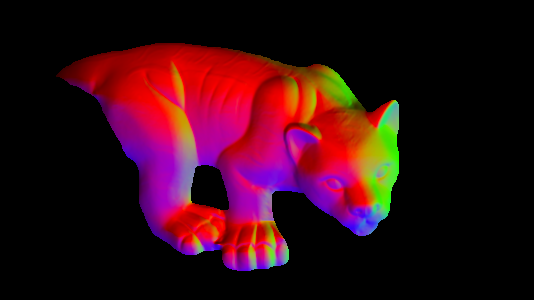

which gives me the following image:

Update 3:

following @berak's approach:

depth.convertTo(depth, CV_64FC1); // I do not know why it is needed to be transformed to 64bit image my input is 32bit

Mat nor(depth.size(), CV_64FC3);

for(int x = 1; x < depth.cols - 1; ++x)

{

for(int y = 1; y < depth.rows - 1; ++y)

{

/*double dzdx = (depth(y, x+1) - depth(y, x-1)) / 2.0;

double dzdy = (depth(y+1, x) - depth(y-1, x)) / 2.0;

Vec3d d = (-dzdx, -dzdy, 1.0);*/

Vec3d t(x,y-1,depth.at<double>(y-1, x)/*depth(y-1,x)*/);

Vec3d l(x-1,y,depth.at<double>(y, x-1)/*depth(y,x-1)*/);

Vec3d c(x,y,depth.at<double>(y, x)/*depth(y,x)*/);

Vec3d d = (l-c).cross(t-c);

Vec3d n = normalize(d);

nor.at<Vec3d>(y,x) = n;

}

}

imshow("normals", nor);

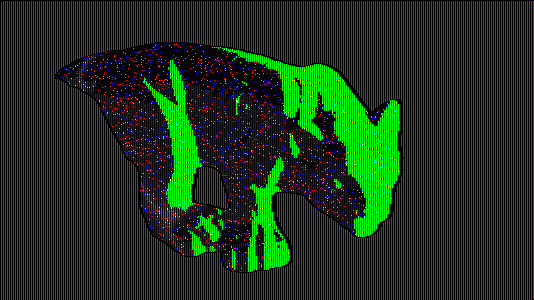

I get this one:

which seems quite ok. However, if I use a 32bit image instead of 64bit the image is corrupted:

I see problem like this : In image plane M1(x1,y1,-f) f focal Optical center at coordinate (0,0,0) depth of M1 is d1 so there is a physical point P1 (vector equationà OM1=aOP1 you can find P1 coordinates and repeat procedure for M2(x1-1,y1,-f) and M3(x1,y1,-1) you have got a plane and you find normal to this plane...

@berak I do not see your answer.

^^ sorry, i deleted the comment, because it was far too naive, but , for the fun, here it is again (just don't take it too serious ...)

so, the naive way would be just:

n = (t-c).cross(l-c);// normal at pixel cbad thing about is, it contains a bias, depending , which neighbours you choose for the cross product

@berak no problem, I am quite new in the field as well so I am trying to figure it out. However, why are you taking only the left and top neighbor pixels. Shouldn't the right and bottom ones be included as well, or even the diagonals.

@LBerger I did not really get what you want to say, maybe if you could try to explain it a bit more.

Moreover, searching a bit I found this post in SO which describes a quite nice procedure. I am trying to figure out now how to implement it.

Have you got xyz of all image points?

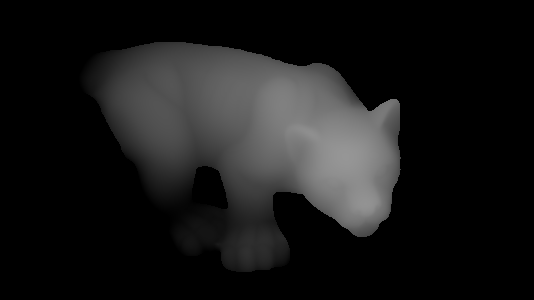

Yes I have this information. In the actual depth image of the image I have above what you see as black is NaN values (no depth info) and the gray-ish pixels representing the panther is double distance values.

also there is this other link but I still cannot understand. How I do calculate the

Kmatrix that is mentioned there.K matrix is only intrincec parameters. You must have this parameter in same unit of you depth map. Don't you want to use point cloud?

Ok I see I do not have the info for this matrix. My only input is the depth image and from that one I want to get the normals using the cross product of the neighbors.

That's not possible without this matrix. Imagine a constant depth map : it's not a plane but a cylindrical because it's stenope model... You have to estimate some values. I think this method is only a shortcut of my first comment.