2.4.3.2: How can I fix bad stereo alignment?

I set up a stereo rig with two PS3-Eye cameras that were changed to infrared. With that setup I acquire a set of 25 frames with the asymmetrical point pattern visible.

- With calls to

calibrateCameraI calibrate the cameras individually. - The resulting camera matrices and dist coefficients are then fed into

stereoCalibrate.

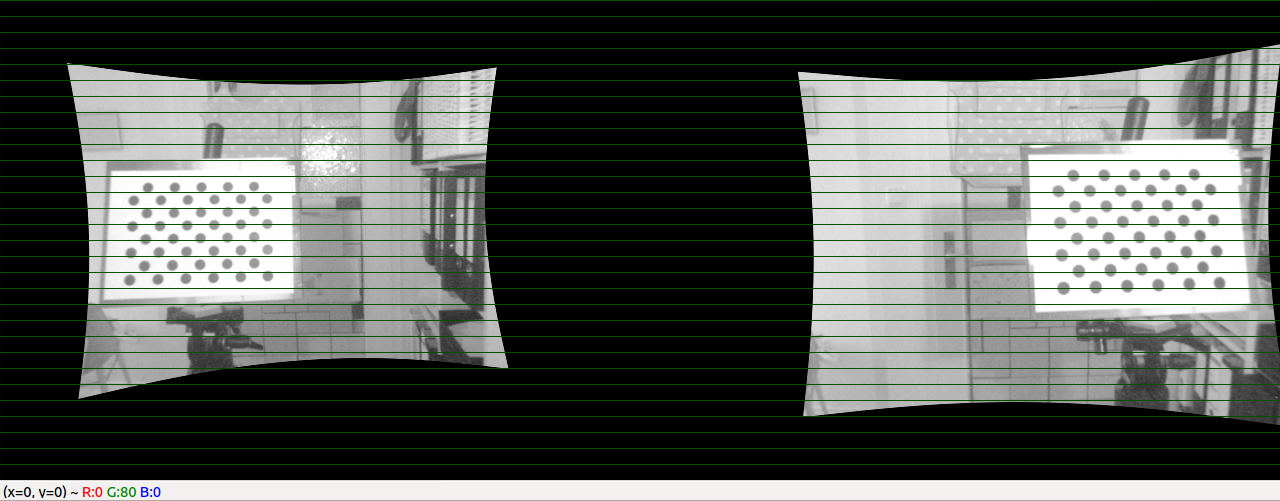

1) gives me RMS of ~0.4, 2) results in RMS of 0.96. The outcome you can see in the image below:

This is a side by side rendering with the rectified (with initUndistortRectifyMap and remap) images. What I don't understand is why the two views have a different scaling. Normally the lines shown should be epipolar and thus hitting the same dots in both images.

So how do I fix the bad stereo alignment?

Here are the calls and flags of the most important parameters used:

// This is done for both cameras.

vector<Mat> rvecs, tvecs;

vector<float> reprojErrs;

double rms = calibrateCamera(objectPoints, imagePoints, imageSize,

cameraMatrix, distCoeffs, rvecs, tvecs,

s.flag | CV_CALIB_FIX_K4 | CV_CALIB_FIX_K5);

// This is done with the resulting Mat's from above

Mat map1_1, map1_2, map2_1, map2_2;

Mat R, T, E, F;

double rms = stereoCalibrate(objectPoints, imagePoints1, imagePoints2,

cameraMatrix1, distCoeffs1,

cameraMatrix2, distCoeffs2,

imageSize, R, T, E, F,

TermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 100, 1e-5),

CV_CALIB_FIX_ASPECT_RATIO +

CV_CALIB_ZERO_TANGENT_DIST +

CV_CALIB_SAME_FOCAL_LENGTH +

CV_CALIB_RATIONAL_MODEL +

CV_CALIB_FIX_K3 + CV_CALIB_FIX_K4 + CV_CALIB_FIX_K5);

// Undistortion maps are calculated for both cameras.

initUndistortRectifyMap(cameraMatrix1, distCoeffs1, R1, P1, imageSize, CV_16SC2, map1_1, map1_2);

initUndistortRectifyMap(cameraMatrix2, distCoeffs2, R2, P2, imageSize, CV_16SC2, map2_1, map2_2);

// Finally from captured view matrices v1 and v2 the resulting views r1 and r2 are calculated.

remap(v1, r1, map1_1, map1_2, CV_INTER_LINEAR);

remap(v2, r2, map2_1, map2_2, CV_INTER_LINEAR);

Things I noticed:

In this answer @Michael_Koval noted that the order of the pattern plays a role. In my workflow the pattern points are generated in two different steps of the workflow but with the low resulting RMS I doubt that this is a problem. Is it?

When I use initUndistortRectifyMap with R1, P1 does this mean the image points will be warped to a common point space or will this transform the points into the view of the other camera? The example that I used as a reference (from samples/cpp/stereo_calib.cpp) has the same calls so I don't quite understand my results.

Update:

stereoRectify outputs the translation matrix with an accuracy of <1% so I guess the internal calculations are correct which leaves me even more puzzled. Also the ROIs for both views are calculated correctly.