Get the 3D Point in another coordinate system

Hi there!

I have a system which uses an RGB-D Camera and a marker.

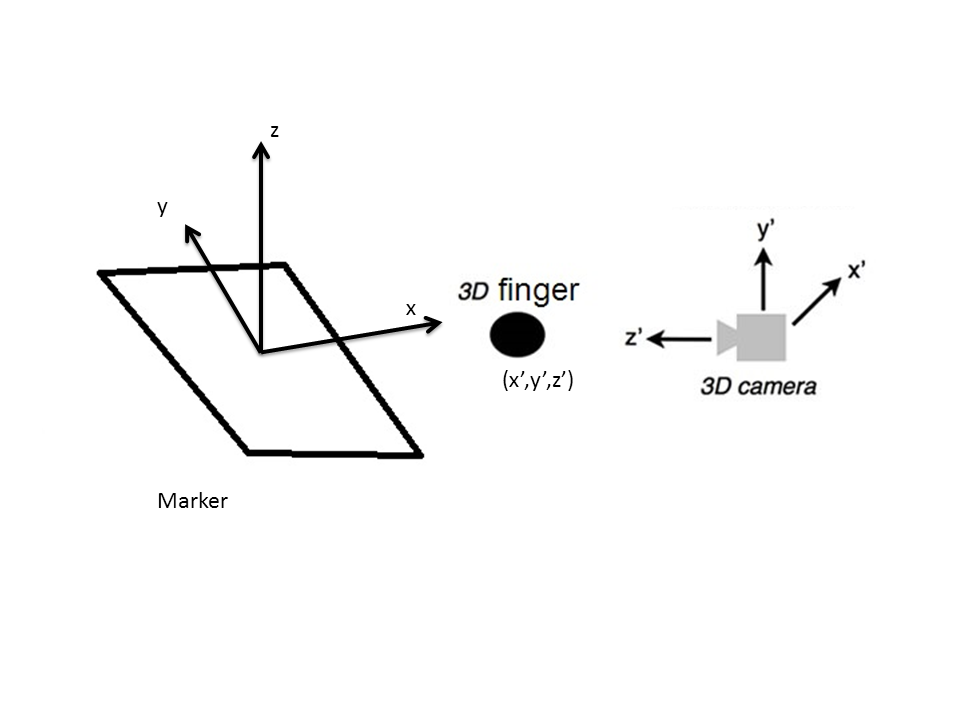

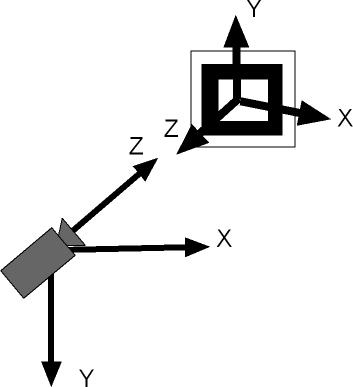

I can succesfully get the marker's origin point of coordinate system(center of marker) using an augmented reality library(aruco). Also,using the same camera, I managed to get the 3D position of my finger with respect to the camera world coordinate system(x',y',z').

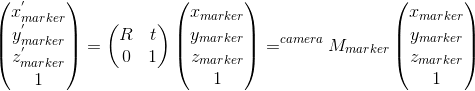

Now what I want is to apply a transformation to the 3D position of the finger(x',y',z') so that I can get a new (x,y,z) with respect to the marker's coordinate system.

Also it is worth mentioning that the camera's coordinate system is left-handed, while the coordinate system on the marker is right-handed.

Here is a picture:

Can you tell me what I have to do?Any opencv functions?Any calculation I could do to get the required result in c++?

Hi. If you found a solution to this problem, please can throw a code ?