Fit ellipse with most points on contour (instead of least squares)

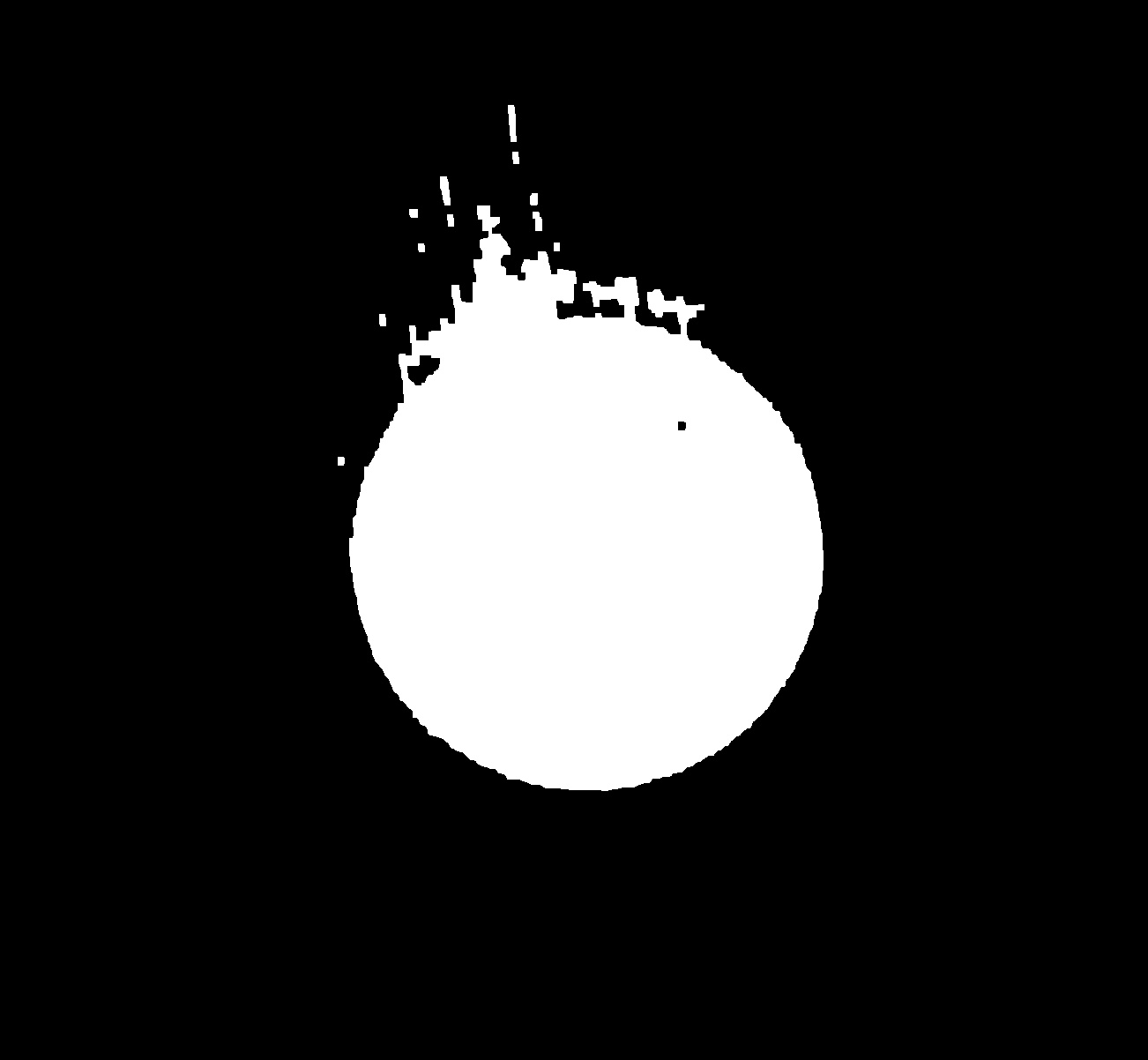

I have a binarized image, which I've already used open/close morphology operations on (this is as clean as I can get it, trust me on this) that looks like so:

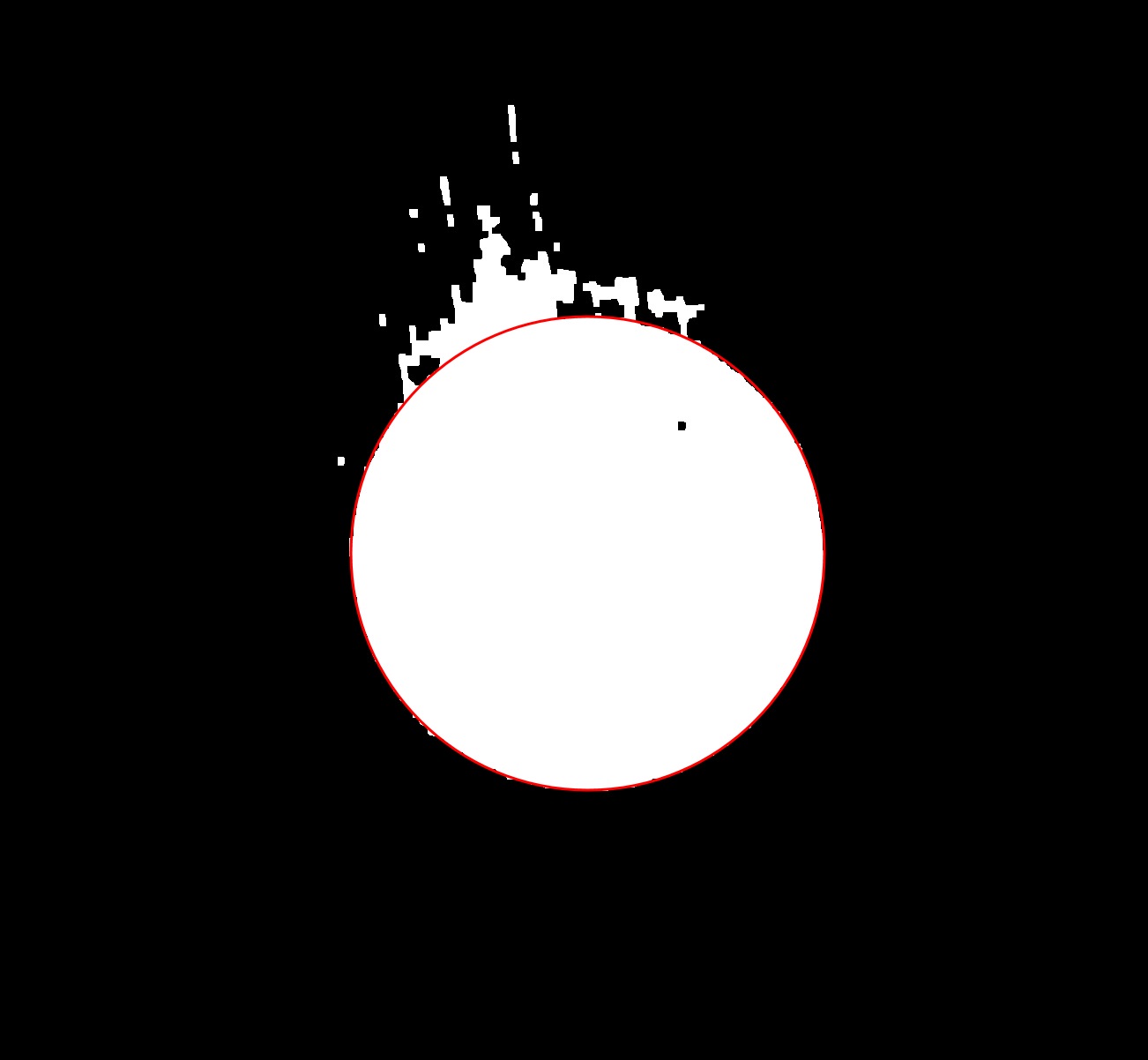

As you can see, there is an obvious ellipse with some distortion on the top. I'm trying to figure out how to fit an ellipse to it, such that it maximizes the number of points on the fitted ellipse that correspond to edges on the shape. That is, I want a result like this:

However, I can't seem to find a way in OpenCV to do this. Using the common tools of fitEllipse (blue line) and minAreaRect (green line), I get these results:

Which obviously do not represent the actual ellipse I'm trying to detect. Any thoughts as to how I could accomplish this? Happy to see examples in Python or C++.

EDIT: Original image and intermediate processing steps below, as requested:

Original image:

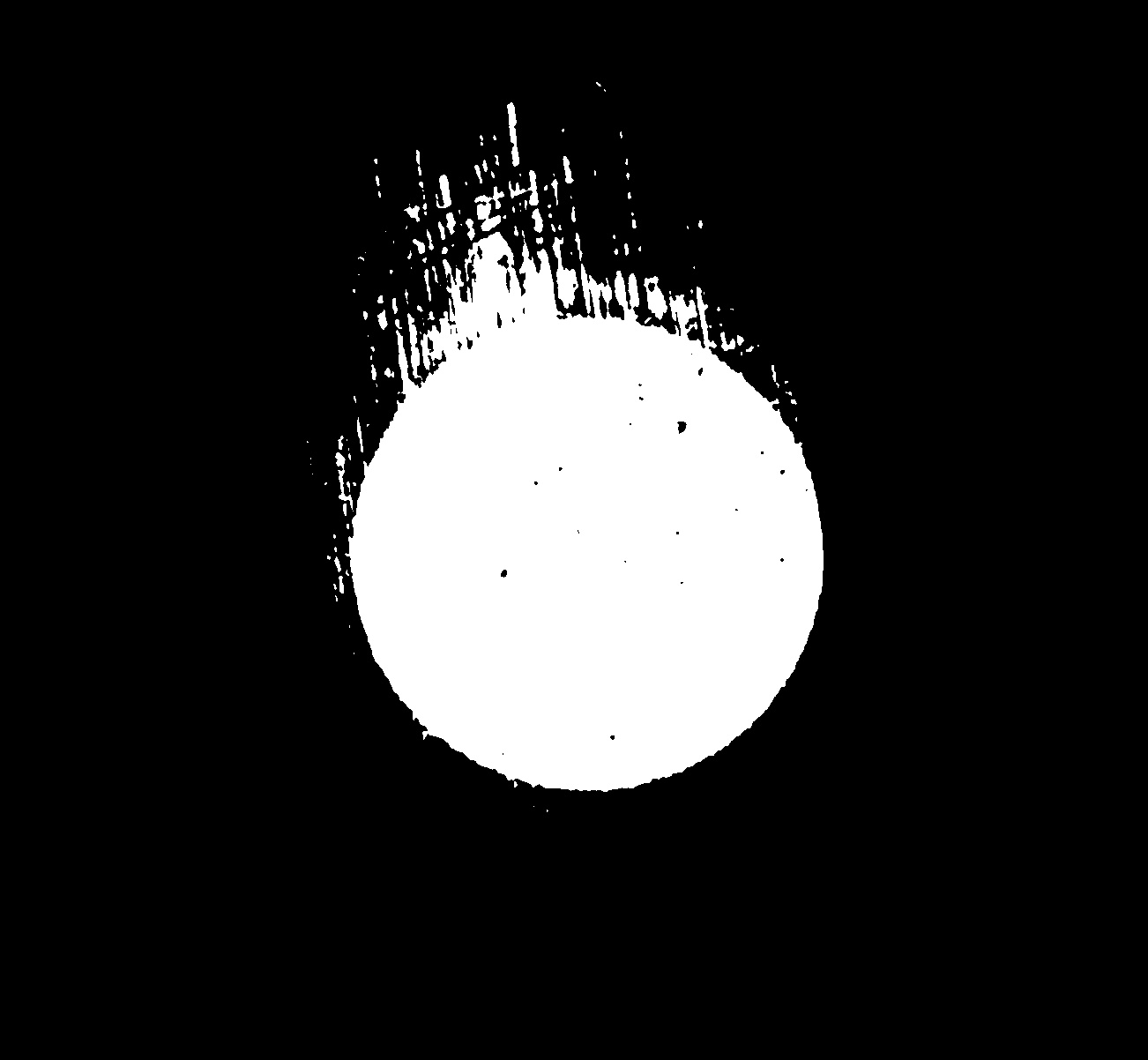

After an Otsu threshold:

After an Otsu threshold:

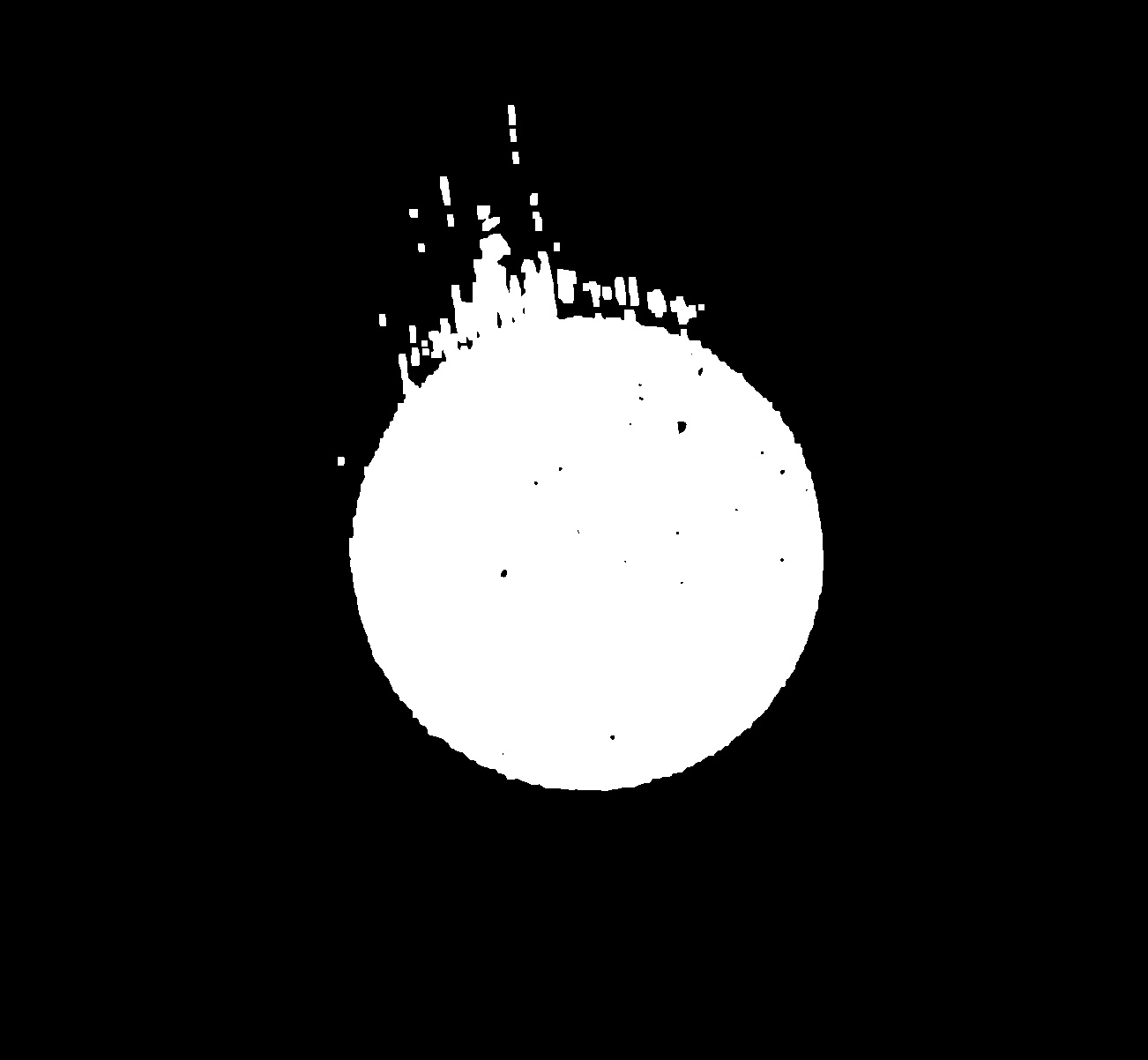

After Opening morph (7x7 kernel):

After Opening morph (7x7 kernel):

And then the image at the very top of this post is after a Closing morph (7x7 kernel). Obviously, this particular image could be improved by manually setting a threshold level. However, the images I have to process could be substantially different in lighting conditions, which is why I must use something adaptive (like Otsu).

Try repeatedly calling the function after rejecting outliers? What you want is iteratively reweighted least-squares.

cv::fitLine()does this, so take a look at that source for inspiration.OK, interesting. How would I determine which points on a contour are outliers, after fitting an ellipse?

@Jordan . Can you post original image?

@supra56 Sure, I edited the post with more images.