Odd effect of blockSize in StereoSGBM

Hey there,

I am adjusting the parameters of my stereo vision pipeline including the stereo matching in form of StereoSGBM. When it comes to setting the parameter blockSize I experience unexpected results. First of all, my interpretation of the parameter:

In my understanding the SGBM algorithm does optimization along multiple scan lines (that's where the G for global originates from?), i.e. we solve the problem of finding the best disparity value for a pixel and the cost function is constructed along the scan lines.

MOST IMPORTANT QUESTION:

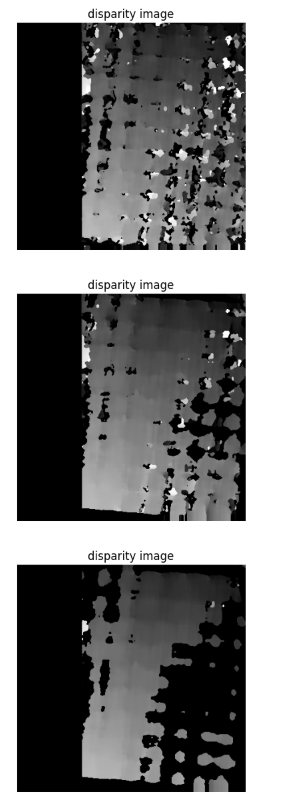

Increasing the blockSize mitigates the effect of noise BUT if I find a solution for a small blockSize this should always be a solution for the problem with a larger value for blockSize. However, in my trials it showed that increasing the blockSize does result in less matched pixels, see images (from the top to bottom blockSize are 15, 21 and 27. HOW COMES?

(The disparity maps are obtained from images of a calibration board.)

(The disparity maps are obtained from images of a calibration board.)

Bonus questions:

Question 1: We use a window(block) to calculate the cost, but we do this for every individual pixel -yes or no?

Question 2: Whats the blocks form, is it blockSize-by-blockSize?

Thank you for your help.

In my experience images of a checkerboard have lots of self-similar areas at different scales, with very low contrast/untextured areas in between those points. Both these aspects of checkerboards can easily mislead the block matching algorithm. If a checkerboard is representative of the typical scene you intend to calculate disparity on, this might be hard to solve without projecting a random texture pattern on the checkerboard to reduce the ambiguity and give texture as an aid to the block matcher. If the checkerboard is NOT representative of the target depth subject, then you probably want to measure the performance on the desired target subject instead. Hope these thoughts help.

Also please study the paper "Stereo Processing by Semiglobal Matching and Mutual Information" by Heiko Hirschmuller. The OpenCV implementation, code in stereosgbm.cpp, is based on this. You'll find most of your answers in the code. If I recall correctly, blocks run from -blockSize to +blockSize offset of the pixel being matched.

FYI: The block size runs from :

int SW2 = SADWindowSize.width/2, SH2 = SADWindowSize.height/2;. I am sifting through the code to find answers to the other questions.