readNetFromTensorflow fails on retrained NN

Hi, I hope someone can help me.

My plan is to train a CNN in Tensorflow and use it in a app that uses OpenCV3.3 . For testing purposes i used the retrain script delivered with Tensorflow and expanded it (Inception V3) with the Flowers. (their Tutorial https://www.tensorflow.org/tutorials/...) I trained two models with it (one mobile the other just a normal one)

The resulting graph can be used in Tensorflow, but it fails to load in Opencv (Python, OpencCv 3.3, Win 7 64 ). The script does not create any Checkpoints so i can't freeze the graph (is it Frozen and can ich check this?) But i used the optimize_for_intereference to remove jpeg decoding.

When i try to load those graphs in OpenCV it throws the following errors:

mobile version:

"...tensorflow\tf_importer.cpp:465: error: (-2) More than one input is Const op in function cv::dnn::experimental_dnn_v1::`anonymous-namespace'::TFImporter::getConstBlob"

non mobile:

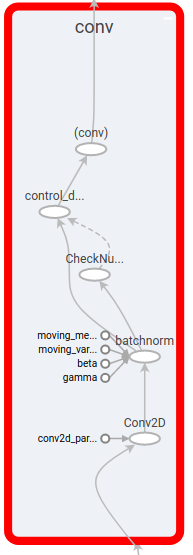

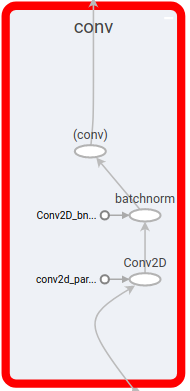

"tensorflow\tf_importer.cpp:447: error: (-2) Input layer not found: conv/batchnorm in function cv::dnn::experimental_dnn_v1::`anonymous-namespace'::TFImporter::connect"

Thanks for any help in advance ;-)

After:

After:

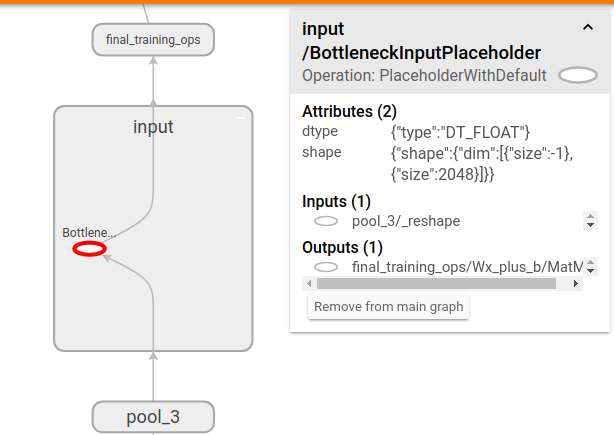

PlaceholderWithDefault

PlaceholderWithDefault preprocessing subgraph

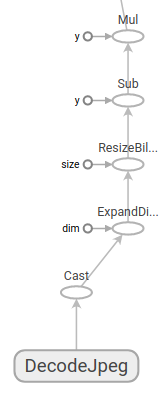

preprocessing subgraph