pattern recognition to detect object position ?

Hello all;

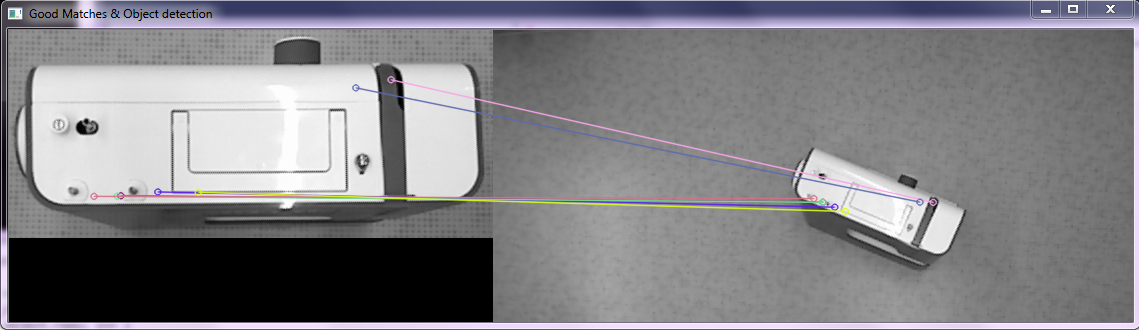

I am trying to program a pattern recognition system using the features2d module also with nonfree module. My main objective is to detect the position of an object in a scene, given 5 models of different positions available. This algorithm must work translation, rotation and scale independent. I am using Surf detector as a first try, adjusting its parameters, and I obtain correct matches when the postion of the model and the position in the scene coincide. This can be seen in the following image:

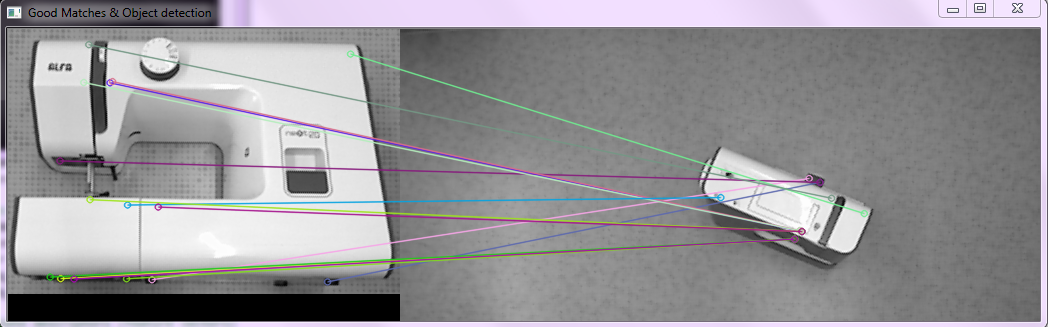

however, when I use the same algorithm with another position, I also obtain matches which obviously are incorrect:

I want to detect the position of the object in the scene, but if I obtain matches in all the cases, it is impossible to know which is the real position. Is this approach correct for what I am intending to do? Any other good idea?

Thank you all very much in advance,

Best regards, Alberto

PD: I attach the code:

int main( int argc, char** argv )

{

Mat img_object = imread( "Pos2Model_Gray.png", CV_LOAD_IMAGE_GRAYSCALE );

Mat img_scene = imread( "Kinect_grayscale_36.png", CV_LOAD_IMAGE_GRAYSCALE );

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 800;

std::vector<KeyPoint> keypoints_object, keypoints_scene;

SurfFeatureDetector detector(minHessian);

detector.detect(img_object, keypoints_object);

detector.detect(img_scene, keypoints_scene);

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_object, descriptors_scene;

extractor.compute( img_object, keypoints_object, descriptors_object );

extractor.compute( img_scene, keypoints_scene, descriptors_scene );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

//-- Quick calculation of max and min distances between keypoints

double max_dist = 0; double min_dist = 100;

for( int i = 0; i < descriptors_object.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist );

printf("-- Min dist : %f \n", min_dist );

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_object.rows; i++ )

if( matches[i].distance < 1.5 *min_dist )

good_matches.push_back( matches[i]);

Mat img_matches;

drawMatches( img_object, keypoints_object, img_scene, keypoints_scene,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypoints_object[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows ); obj_corners[3] = cvPoint( 0, img_object.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

//-- Show detected matches

imshow( "Good Matches & Object detection", img_matches );

waitKey(0);

return 0;

}

It would help us to see your actual code for matching the keypoints.