This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

int main(int, char**)

{

Mat src,edges;

src = imread("15016889798859437.jpg");

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if (minRect.width > 150 & minRect.height > 150) // this line also need to be optimized

{

selected.insert(selected.end(), contours[i].begin(), contours[i].end());

}

}

Rect minRect = boundingRect(selected);

convexHull(selected, selected);

polylines(src, selected, true, Scalar(0, 0, 255), 2);

resize(src, src, Size(), 0.5, 0.5); // you can remove this line to keep the image original size

imshow("result", src);

waitKey(0);

return 0;

}

| 2 | No.2 Revision |

EDIT: improved code. i like it.

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

static std::vector<cv::Point> extremePoints(std::vector<cv::Point>pts)

{

int xmin = 0, ymin = 0, xmax = -1, ymax = -1, i;

Point ptxmin, ptymin, ptxmax, ptymax;

Point pt = pts[0];

ptxmin = ptymin = ptxmax = ptymax = pt;

xmin = xmax = pt.x;

ymin = ymax = pt.y;

for (size_t i = 1; i < pts.size(); i++)

{

pt = pts[i];

if (xmin > pt.x)

{

xmin = pt.x;

ptxmin = pt;

}

if (xmax < pt.x)

{

xmax = pt.x;

ptxmax = pt;

}

if (ymin > pt.y)

{

ymin = pt.y;

ptymin = pt;

}

if (ymax < pt.y)

{

ymax = pt.y;

ptymax = pt;

}

}

std::vector<cv::Point> res;

res.push_back(ptxmin);

res.push_back(ptxmax);

res.push_back(ptymin);

res.push_back(ptymax);

return res;

}

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

int main(int, char**)

{

Mat src,edges;

src, edges;

src = imread("15016889798859437.jpg");

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if (minRect.width > 150 & minRect.height > 150) // this line also need to be optimized

{

selected.insert(selected.end(), contours[i].begin(), contours[i].end());

}

}

Rect minRect = boundingRect(selected);

}

convexHull(selected, selected);

RotatedRect minRect = minAreaRect(selected);

std::vector<cv::Point> corner_points = extremePoints(selected);

std::vector<cv::Point2f> corners;

corners.push_back(corner_points[0]);

corners.push_back(corner_points[1]);

corners.push_back(corner_points[2]);

corners.push_back(corner_points[3]);

sortCorners(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1] - corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

resize(quad, quad, Size(), 0.25, 0.25); // you can remove this line to keep the image original size

imshow("quad", quad);

polylines(src, selected, true, Scalar(0, 0, 255), 2);

resize(src, src, Size(), 0.5, 0.5); // you can remove this line to keep the image original size

imshow("result", src);

waitKey(0);

return 0;

}

---------------->

---------------->

| 3 | No.3 Revision |

EDIT: improved code. i like it.get the result image

---------------->

---------------->

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

static std::vector<cv::Point> extremePoints(std::vector<cv::Point>pts)

{

int xmin = 0, ymin = 0, xmax = -1, ymax = -1, i;

Point ptxmin, ptymin, ptxmax, ptymax;

Point pt = pts[0];

ptxmin = ptymin = ptxmax = ptymax = pt;

xmin = xmax = pt.x;

ymin = ymax = pt.y;

for (size_t i = 1; i < pts.size(); i++)

{

pt = pts[i];

if (xmin > pt.x)

{

xmin = pt.x;

ptxmin = pt;

}

if (xmax < pt.x)

{

xmax = pt.x;

ptxmax = pt;

}

if (ymin > pt.y)

{

ymin = pt.y;

ptymin = pt;

}

if (ymax < pt.y)

{

ymax = pt.y;

ptymax = pt;

}

}

std::vector<cv::Point> res;

res.push_back(ptxmin);

res.push_back(ptxmax);

res.push_back(ptymin);

res.push_back(ptymax);

return res;

}

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

int main(int, char**)

{

Mat src, edges;

src = imread("15016889798859437.jpg");

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if (minRect.width > 150 & minRect.height > 150) // this line also need to be optimized

{

selected.insert(selected.end(), contours[i].begin(), contours[i].end());

}

}

convexHull(selected, selected);

RotatedRect minRect = minAreaRect(selected);

std::vector<cv::Point> corner_points = extremePoints(selected);

std::vector<cv::Point2f> corners;

corners.push_back(corner_points[0]);

corners.push_back(corner_points[1]);

corners.push_back(corner_points[2]);

corners.push_back(corner_points[3]);

sortCorners(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1] - corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

resize(quad, quad, Size(), 0.25, 0.25); // you can remove this line to keep the image original size

imshow("quad", quad);

polylines(src, selected, true, Scalar(0, 0, 255), 2);

resize(src, src, Size(), 0.5, 0.5); // you can remove this line to keep the image original size

imshow("result", src);

waitKey(0);

return 0;

}

---------------->

---------------->

| 4 | No.4 Revision |

EDIT: improved code. code (i used the references below).

http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

http://answers.opencv.org/question/64433

i get the result imageimages

---------------->

---------------->

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

static std::vector<cv::Point> extremePoints(std::vector<cv::Point>pts)

{

int xmin = 0, ymin = 0, xmax = -1, ymax = -1, i;

Point ptxmin, ptymin, ptxmax, ptymax;

Point pt = pts[0];

ptxmin = ptymin = ptxmax = ptymax = pt;

xmin = xmax = pt.x;

ymin = ymax = pt.y;

for (size_t i = 1; i < pts.size(); i++)

{

pt = pts[i];

if (xmin > pt.x)

{

xmin = pt.x;

ptxmin = pt;

}

if (xmax < pt.x)

{

xmax = pt.x;

ptxmax = pt;

}

if (ymin > pt.y)

{

ymin = pt.y;

ptymin = pt;

}

if (ymax < pt.y)

{

ymax = pt.y;

ptymax = pt;

}

}

std::vector<cv::Point> res;

res.push_back(ptxmin);

res.push_back(ptxmax);

res.push_back(ptymin);

res.push_back(ptymax);

return res;

}

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

int main(int, char**)

{

Mat src, edges;

src = imread("15016889798859437.jpg");

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if (minRect.width > 150 & minRect.height > 150) // this line also need to be optimized

{

selected.insert(selected.end(), contours[i].begin(), contours[i].end());

}

}

convexHull(selected, selected);

RotatedRect minRect = minAreaRect(selected);

std::vector<cv::Point> corner_points = extremePoints(selected);

std::vector<cv::Point2f> corners;

corners.push_back(corner_points[0]);

corners.push_back(corner_points[1]);

corners.push_back(corner_points[2]);

corners.push_back(corner_points[3]);

sortCorners(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1] - corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

resize(quad, quad, Size(), 0.25, 0.25); // you can remove this line to keep the image original size

imshow("quad", quad);

polylines(src, selected, true, Scalar(0, 0, 255), 2);

resize(src, src, Size(), 0.5, 0.5); // you can remove this line to keep the image original size

imshow("result", src);

waitKey(0);

return 0;

}

| 5 | No.5 Revision |

EDIT: EDIT2: improved code (i used the references below).reference below) code is still buggy

http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

http://answers.opencv.org/question/64433

i get the result images

---------------->

---------------->

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

static bool lineIntersection(const cv::Point2f &a1, const cv::Point2f &b1, const cv::Point2f &a2,

const cv::Point2f &b2, cv::Point2f &intersection) {

double A1 = b1.y - a1.y;

double B1 = a1.x - b1.x;

double C1 = (a1.x * A1) + (a1.y * B1);

double A2 = b2.y - a2.y;

double B2 = a2.x - b2.x;

double C2 = (a2.x * A2) + (a2.y * B2);

double det = (A1 * B2) - (A2 * B1);

if (true) { //if (!almostEqual(det, 0))

intersection.x = static_cast<float>(((C1 * B2) - (C2 * B1)) / (det));

intersection.y = static_cast<float>(((C2 * A1) - (C1 * A2)) / (det));

return true;

}

return false;

}

struct vector_sorter

{

bool operator ()(const std::vector<cv::Point>& a, const std::vector<cv::Point> extremePoints(std::vector<cv::Point>pts)

{

int xmin = 0, ymin = 0, xmax = -1, ymax = -1, i;

Point ptxmin, ptymin, ptxmax, ptymax;

Point pt = pts[0];

ptxmin = ptymin = ptxmax = ptymax = pt;

xmin = xmax = pt.x;

ymin = ymax = pt.y;

for (size_t i = 1; i < pts.size(); i++)

{

pt = pts[i];

if (xmin & b)

{

double dist_a = norm(a[0] - a[1]);

double dist_b = norm(b[0] - b[1]);

return dist_a > pt.x)

{

xmin = pt.x;

ptxmin = pt;

}

if (xmax < pt.x)

{

xmax = pt.x;

ptxmax = pt;

}

if (ymin > pt.y)

{

ymin = pt.y;

ptymin = pt;

}

if (ymax < pt.y)

{

ymax = pt.y;

ptymax = pt;

}

}

std::vector<cv::Point> res;

res.push_back(ptxmin);

res.push_back(ptxmax);

res.push_back(ptymin);

res.push_back(ptymax);

return res;

}

dist_b;

}

};

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

static std::vector<cv::Point2f> extremePoints(std::vector<cv::Point>pts)

{

convexHull(pts, pts);

vector<vector<Point> > lines;

vector<Point> line_pts;

line_pts.push_back(pts[0]);

line_pts.push_back(pts[pts.size()-1]);

lines.push_back(line_pts);

line_pts.clear();

for (size_t i = 1; i < pts.size(); i++)

{

line_pts.push_back(pts[i]);

line_pts.push_back(pts[i-1]);

lines.push_back(line_pts);

line_pts.clear();

}

sort(lines.begin(), lines.end(), vector_sorter());

Point2f intersection, vtx[4];

std::vector<cv::Point2f> corners;

for (int i = 0; i < 4; i++)

{

for (int j = i + 1; j < 4; j++)

{

lineIntersection(lines[i][0], lines[i][1], lines[j][0], lines[j][1], intersection);

if (intersection.x >= 0 && intersection.y >= 0)

corners.push_back(intersection);

}

}

sortCorners(corners);

return corners;

}

int main(int, char**)

main(int argc, char** argv)

{

Mat src, edges;

src = imread("15016889798859437.jpg");

imread(argv[1]);

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if (minRect.width > 150 & minRect.height > 150) // this line also need to be optimized

{

selected.insert(selected.end(), contours[i].begin(), contours[i].end());

}

}

convexHull(selected, selected);

RotatedRect minRect = minAreaRect(selected);

std::vector<cv::Point> corner_points std::vector<cv::Point2f> corners = extremePoints(selected);

std::vector<cv::Point2f> corners;

corners.push_back(corner_points[0]);

corners.push_back(corner_points[1]);

corners.push_back(corner_points[2]);

corners.push_back(corner_points[3]);

sortCorners(corners);

Rect r = boundingRect(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1] - corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

resize(quad, quad, Size(), 0.25, 0.25); // you can remove this line to keep the image original size

imshow("quad", quad);

polylines(src, selected, true, Scalar(0, 0, 255), 2);

resize(src, src, Size(), 0.5, 0.5); // you can remove this line to keep the image original size

imshow("result", src);

waitKey(0);

return 0;

}

| 6 | No.6 Revision |

EDIT3: Probably i solved the problem on my last change

------------>

------------>

EDIT2: improved code (i used the reference below) code is still buggy

http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

i get the result images

---------------->

---------------->

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

#define EPSILON 1E-5

//! Return the maximum of the provided numbers

static double maximum(double number1, double number2, double number3) {

return std::max(std::max(number1, number2), number3);

}

//! Check if the two numbers are equal (almost)

/*!

* The expression for determining if two real numbers are equal is:

* if (Abs(x - y) <= EPSILON * Max(1.0f, Abs(x), Abs(y))).

*

* @param number1 First number

* @param number2 Second number

*/

static bool almostEqual(double number1, double number2) {

return (std::abs(number1 - number2) <= (EPSILON * maximum(1.0, std::abs(number1), std::abs(number2))));

}

//! Determine the intersection point of two lines, if this point exists

/*! Two lines intersect if they are not parallel (Parallel lines intersect at

* +/- infinity, but we do not consider this case here).

*

* The lines are specified by a pair of points each. If they intersect, then

* the function returns true, else it returns false.

*

* Lines can be specified in the following form:

* A1x + B1x = C1

* A2x + B2x = C2

*

* If det (= A1*B2 - A2*B1) == 0, then lines are parallel

* else they intersect

*

* If they intersect, then let us denote the intersection point with P(x, y) where:

* x = (C1*B2 - C2*B1) / (det)

* y = (C2*A1 - C1*A2) / (det)

*

* @param a1 First point for determining the first line

* @param b1 Second point for determining the first line

* @param a2 First point for determining the second line

* @param b2 Second point for determining the second line

* @param intersection The intersection point, if this point exists

*/

static bool lineIntersection(const cv::Point2f &a1, const cv::Point2f &b1, const cv::Point2f &a2,

const cv::Point2f &b2, cv::Point2f &intersection) {

double A1 = b1.y - a1.y;

double B1 = a1.x - b1.x;

double C1 = (a1.x * A1) + (a1.y * B1);

double A2 = b2.y - a2.y;

double B2 = a2.x - b2.x;

double C2 = (a2.x * A2) + (a2.y * B2);

double det = (A1 * B2) - (A2 * B1);

if (true) { //if (!almostEqual(det, 0))

0)) {

intersection.x = static_cast<float>(((C1 * B2) - (C2 * B1)) / (det));

intersection.y = static_cast<float>(((C2 * A1) - (C1 * A2)) / (det));

return true;

}

return false;

}

struct vector_sorter

{

bool operator ()(const std::vector<cv::Point>& a, const std::vector<cv::Point> & b)

{

double dist_a = norm(a[0] - a[1]);

double dist_b = norm(b[0] - b[1]);

return dist_a > dist_b;

}

};

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

static std::vector<cv::Point2f> extremePoints(std::vector<cv::Point>pts)

{

convexHull(pts, pts);

vector<vector<Point> > lines;

vector<Point> line_pts;

line_pts.push_back(pts[0]);

line_pts.push_back(pts[pts.size()-1]);

lines.push_back(line_pts);

line_pts.clear();

for (size_t i = 1; i < pts.size(); i++)

{

line_pts.push_back(pts[i]);

line_pts.push_back(pts[i-1]);

lines.push_back(line_pts);

line_pts.clear();

}

sort(lines.begin(), lines.end(), vector_sorter());

Point2f intersection, vtx[4];

std::vector<cv::Point2f> corners;

for (int i = 0; i < 4; i++)

{

for (int j = i + 1; j < 4; j++)

{

lineIntersection(lines[i][0], lines[i][1], lines[j][0], lines[j][1], intersection);

if (intersection.x >= 0 && intersection.y >= 0)

corners.push_back(intersection);

}

}

sortCorners(corners);

return corners;

}

int main(int argc, char** argv)

{

Mat src, edges;

src = imread(argv[1]);

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected;

selected_points;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if (minRect.width > 150 & minRect.height > 150) // this line also need to be optimized

{

selected.insert(selected.end(), selected_points.insert(selected_points.end(), contours[i].begin(), contours[i].end());

}

}

vector<Point2f> selected_points_f;

vector<Point2f> corners;

Mat(selected_points).convertTo(selected_points_f, CV_32F);

Mat hull;

convexHull(selected_points_f, hull, true, true);

RotatedRect RRect = minAreaRect(hull);

std::vector<cv::Point2f> corners = extremePoints(selected);

RR_corners;

Point2f four_points[4];

RRect.points(four_points);

RR_corners.push_back(four_points[0]);

RR_corners.push_back(four_points[1]);

RR_corners.push_back(four_points[2]);

RR_corners.push_back(four_points[3]);

for (int j = 0; j < 4; j++)

{

Point2f pt = RR_corners[j];

Point2f nearest_pt = hull.at<Point2f>(j,0);

float dist = norm(pt - nearest_pt);

for (int k = 1; k < hull.rows; k++)

{

Point2f hull_point = hull.at<Point2f>(k, 0);

if (norm(pt - hull_point) < dist)

{

dist = norm(pt - hull_point);

nearest_pt = hull_point;

}

}

corners.push_back(nearest_pt);

}

sortCorners(corners);

Mat(corners).convertTo(selected_points, CV_32S);

Rect r = boundingRect(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1] - corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

imshow("quad", polylines(src, selected_points, true, Scalar(0, 0, 255), 3);

imshow("Source Image", src);

imshow("Result Image", quad);

waitKey(0);

return 0;

}

| 7 | No.7 Revision |

EDIT3: Probably i solved the problem on my last change

------------>

------------>

EDIT2: improved code (i used the reference below) code is still buggy

http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

i get the result images

---------------->

---------------->

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

#define EPSILON 1E-5

//! Return the maximum of the provided numbers

static double maximum(double number1, double number2, double number3) {

return std::max(std::max(number1, number2), number3);

}

//! Check if the two numbers are equal (almost)

/*!

* The expression for determining if two real numbers are equal is:

* if (Abs(x - y) <= EPSILON * Max(1.0f, Abs(x), Abs(y))).

*

* @param number1 First number

* @param number2 Second number

*/

static bool almostEqual(double number1, double number2) {

return (std::abs(number1 - number2) <= (EPSILON * maximum(1.0, std::abs(number1), std::abs(number2))));

}

//! Determine the intersection point of two lines, if this point exists

/*! Two lines intersect if they are not parallel (Parallel lines intersect at

* +/- infinity, but we do not consider this case here).

*

* The lines are specified by a pair of points each. If they intersect, then

* the function returns true, else it returns false.

*

* Lines can be specified in the following form:

* A1x + B1x = C1

* A2x + B2x = C2

*

* If det (= A1*B2 - A2*B1) == 0, then lines are parallel

* else they intersect

*

* If they intersect, then let us denote the intersection point with P(x, y) where:

* x = (C1*B2 - C2*B1) / (det)

* y = (C2*A1 - C1*A2) / (det)

*

* @param a1 First point for determining the first line

* @param b1 Second point for determining the first line

* @param a2 First point for determining the second line

* @param b2 Second point for determining the second line

* @param intersection The intersection point, if this point exists

*/

static bool lineIntersection(const cv::Point2f &a1, const cv::Point2f &b1, const cv::Point2f &a2,

const cv::Point2f &b2, cv::Point2f &intersection) {

double A1 = b1.y - a1.y;

double B1 = a1.x - b1.x;

double C1 = (a1.x * A1) + (a1.y * B1);

double A2 = b2.y - a2.y;

double B2 = a2.x - b2.x;

double C2 = (a2.x * A2) + (a2.y * B2);

double det = (A1 * B2) - (A2 * B1);

if (!almostEqual(det, 0)) {

intersection.x = static_cast<float>(((C1 * B2) - (C2 * B1)) / (det));

intersection.y = static_cast<float>(((C2 * A1) - (C1 * A2)) / (det));

return true;

}

return false;

}

struct vector_sorter

{

bool operator ()(const std::vector<cv::Point>& a, const std::vector<cv::Point> & b)

{

double dist_a = norm(a[0] - a[1]);

double dist_b = norm(b[0] - b[1]);

return dist_a > dist_b;

}

};

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

int main(int argc, char** argv)

{

Mat src, edges;

src = imread(argv[1]);

cvtColor(src, edges, COLOR_BGR2GRAY);

GaussianBlur(edges, edges, Size(5, 5), 1.5, 1.5);

erode(edges, edges, Mat());// these lines may need to be optimized

dilate(edges, edges, Mat());

dilate(edges, edges, Mat());

erode(edges, edges, Mat());

Canny(edges, edges, 50, 150, 3); // canny parameters may need to be optimized

imshow("edges", edges);

vector<Point> selected_points;

vector<vector<Point> > contours;

findContours(edges, contours, RETR_LIST, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); i++)

{

Rect minRect = boundingRect(contours[i]);

if ( (minRect.width > 150 & minRect.height src.cols / 6) | (minRect.height > 150) src.rows / 6) ) // this line also need to be optimized

{

selected_points.insert(selected_points.end(), contours[i].begin(), contours[i].end());

}

}

vector<Point2f> selected_points_f;

vector<Point2f> corners;

Mat(selected_points).convertTo(selected_points_f, CV_32F);

Mat hull;

convexHull(selected_points_f, hull, true, true);

RotatedRect RRect = minAreaRect(hull);

std::vector<cv::Point2f> RR_corners;

Point2f four_points[4];

RRect.points(four_points);

RR_corners.push_back(four_points[0]);

RR_corners.push_back(four_points[1]);

RR_corners.push_back(four_points[2]);

RR_corners.push_back(four_points[3]);

for (int j = 0; j < 4; j++)

{

Point2f pt = RR_corners[j];

Point2f nearest_pt = hull.at<Point2f>(j,0);

float dist = norm(pt - nearest_pt);

for (int k = 1; k < hull.rows; k++)

{

Point2f hull_point = hull.at<Point2f>(k, 0);

if (norm(pt - hull_point) < dist)

{

dist = norm(pt - hull_point);

nearest_pt = hull_point;

}

}

corners.push_back(nearest_pt);

}

sortCorners(corners);

Mat(corners).convertTo(selected_points, CV_32S);

Rect r = boundingRect(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1] - corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

polylines(src, selected_points, true, Scalar(0, 0, 255), 3);

imshow("Source Image", src);

imshow("Result Image", quad);

waitKey(0);

return 0;

}

| 8 | No.8 Revision |

EDIT3: Probably i solved the problem on my last change

------------>

------------>

EDIT2: improved code (i used the reference below) code is still buggy

http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

i get the result images

---------------->

---------------->

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

you can find my trial C++ source code on github

| 9 | No.9 Revision |

EDIT3: Probably i solved the problem on my last change

------------>

------------>

EDIT2: improved code (i used the reference below) code is still buggy

http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

i get the result images

---------------->

---------------->

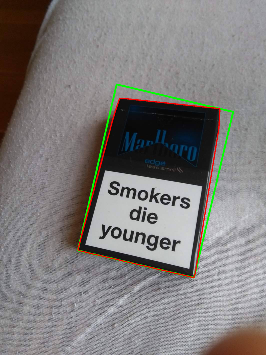

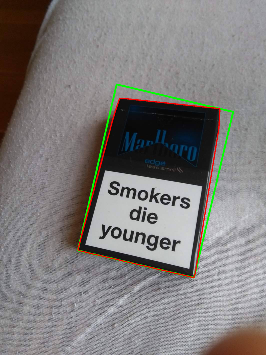

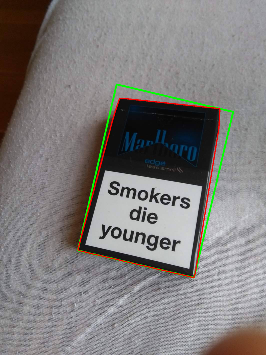

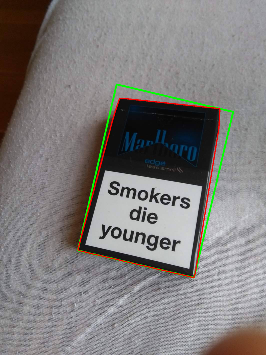

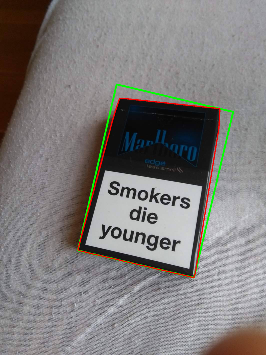

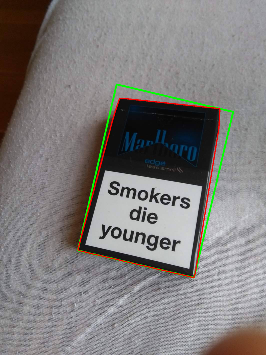

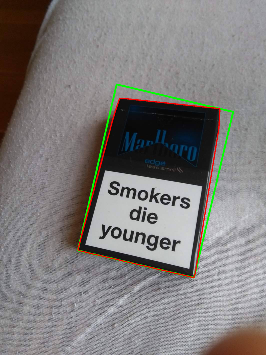

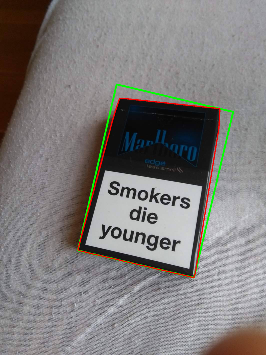

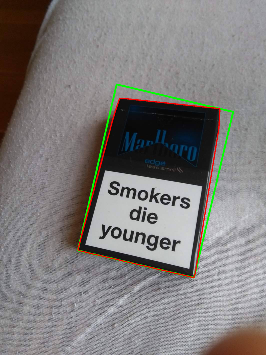

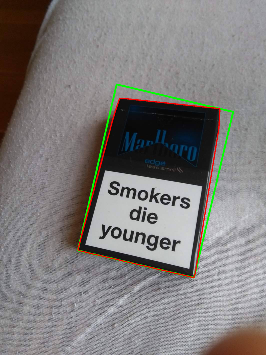

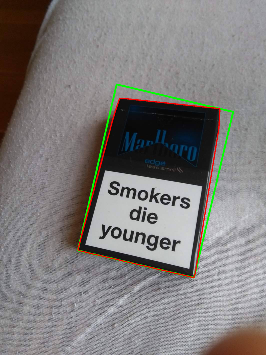

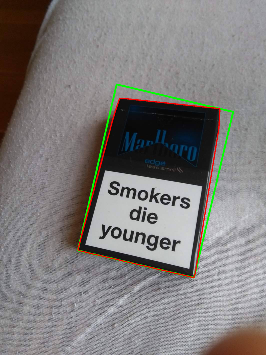

you can find my trial C++ source code on githubgithub for getting some result images below

------------>

------------>

---------------->

---------------->

used referencess:

http://study.marearts.com/2015/03/image-warping-using-opencv.html http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/

| 10 | No.10 Revision |

you can find my trial C++ source code on github for getting some result images below

------------>

------------>

---------------->

---------------->

used referencess:

http://study.marearts.com/2015/03/image-warping-using-opencv.html

http://study.marearts.com/2015/03/image-warping-using-opencv.html http://web.archive.org/web/20150317011143/http://opencv-code.com/tutorials/automatic-perspective-correction-for-quadrilateral-objects/