I am working on stereo calibration with these steps: 1. findChessborad -> cornerSubPix 2. stereoCalibrate to get R T E F 3.undistortPoints and computeCorrespondEpilines 4.stereoRectify to get R1 R2 P1 P2 Q 5.initUndistortRectifyMap to get remap matrix 6 remap

all the steps followed the cpp example given in the opencv package.

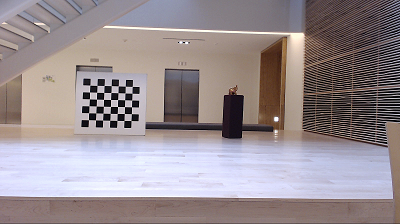

one pair of images I used as below:

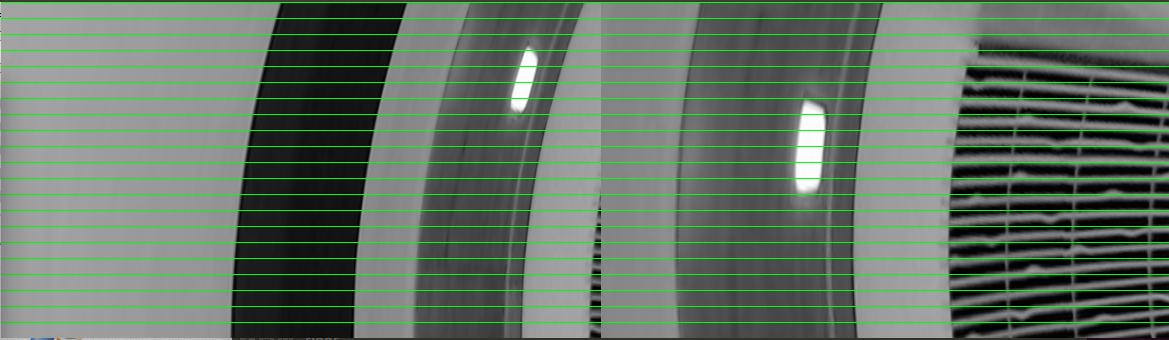

the result I get is:

if I use : findFundamentalMat and stereoRectifyUncalibrated instead of stereorectify to get R1 R2 P1 P2 will get a correct result.

here is my code snapshot:

//FOR STD LIB

include <iostream>

include <string>

include <fstream>

include <iomanip>

include <algorithm>

include <iterator>

include <stdio.h>

include <stdlib.h>

include <ctype.h>

include <sstream>

//FOR OPENCVLIB

include "opencv2/opencv.hpp"

include "opencv2/calib3d.hpp"

include "opencv2/core/core.hpp"

include "opencv2/highgui/highgui.hpp"

include "opencv2/imgproc/imgproc.hpp"

include "opencv/cvaux.hpp"

//using namespace std; //using namespace cv;

define infinite 1e400

define numImagePairs 10

const std::string PathPrefix = "/home/roby/Developer/projects/Calibration_rectify/res/"; typedef std::vector<std::string> ImageNameList; typedef std::vector<cv::point2f> PointList; typedef std::vector<pointlist> LEFT_PointVec; typedef std::vector<pointlist> RIGHT_PointVec; typedef std::vector<std::vector<cv::point3f> > Point3DList; const cv::Size CHECKERBOARD_SIZE = cv::Size(7,6);

define BYTE unsigned char

int main(int argc, const char * argv[]) { ImageNameList rightCameraList; ImageNameList leftCameraList;

ImageNameList goodrightCameraList;

ImageNameList goodleftCameraList;

LEFT_PointVec leftCameraPTvec;

RIGHT_PointVec rightCameraPTvec;

Point3DList objectPoints;

int numGoodImgPairs = numImagePairs;

const float squareSize = 1.f; // Set this to your actual square size

cv::Size imageSize;

//load image name

std::ostringstream ss;

for (int i = 1; i <= numImagePairs; i++) {

ss << i;

rightCameraList.push_back(PathPrefix + "right/right"+ss.str() +".png");

leftCameraList.push_back(PathPrefix + "left/left"+ss.str() +".png");

ss.str(""); //clear stream content

}

for (int i = 0; i < numImagePairs; i++) {

cv::Mat rightimg = cv::imread(rightCameraList[i],0);

cv::Mat leftimg = cv::imread(leftCameraList[i],0);

if (rightimg.size != leftimg.size) {

std::cout<<"Left Image size != Right Image Size"<<std::endl;

return 0;

}else{

imageSize = rightimg.size();

}

rightimg.convertTo(rightimg, CV_8U);

leftimg.convertTo(leftimg, CV_8U);

PointList right_ptList;

PointList left_ptList;

if (cv::findChessboardCorners(rightimg, CHECKERBOARD_SIZE, right_ptList)) {

if (cv::findChessboardCorners(leftimg, CHECKERBOARD_SIZE, left_ptList)) {

cv::cornerSubPix(rightimg, right_ptList, cv::Size(11,11), cv::Size(-1,-1),

cv::TermCriteria(cv::TermCriteria::COUNT+cv::TermCriteria::EPS,

30, 0.01));

cv::cornerSubPix(leftimg, left_ptList, cv::Size(11,11), cv::Size(-1,-1),

cv::TermCriteria(cv::TermCriteria::COUNT+cv::TermCriteria::EPS,

30, 0.01));

rightCameraPTvec.push_back(right_ptList);

leftCameraPTvec.push_back(left_ptList);

goodleftCameraList.push_back(leftCameraList[i]);

goodrightCameraList.push_back(rightCameraList[i]);

}else{

numGoodImgPairs--;

std::cout<<"CHESSBOARD NOT FOUND in LEFT IMG!"<<std::endl;

}

}else{

numGoodImgPairs--;

std::cout<<"CHESSBOARD NOT FOUND in RIGHT IMG!"<<std::endl;

}

}

if (numGoodImgPairs < 2) {

std::cout<<"Error: too little pairs to run the calibration"<<std::endl;

return 0;

}

objectPoints.resize(numGoodImgPairs);

for( int i = 0; i < numGoodImgPairs; i++ )

{

for( int j = 0; j < CHECKERBOARD_SIZE.height; j++ )

for( int k = 0; k < CHECKERBOARD_SIZE.width; k++ )

objectPoints[i].push_back(cv::Point3f(k*squareSize, j*squareSize, 0));

}

cv::Mat cameraMatrix[2], distCoeffs[2];

cameraMatrix[0] = cv::Mat::eye(3, 3, CV_64F);

cameraMatrix[1] = cv::Mat::eye(3, 3, CV_64F);

cv::Mat R, T, E, F;

// FOR MORE INFO ABOUT distCoeffs : Read BOOK [learning OpenCV , Page:411]

double rms = cv::stereoCalibrate(objectPoints, leftCameraPTvec, rightCameraPTvec,

cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, E, F,

cv::CALIB_FIX_ASPECT_RATIO +

cv::CALIB_ZERO_TANGENT_DIST +

cv::CALIB_SAME_FOCAL_LENGTH +

cv::CALIB_RATIONAL_MODEL +

cv::CALIB_FIX_K3 + cv::CALIB_FIX_K4 + cv::CALIB_FIX_K5,

cv::TermCriteria(cv::TermCriteria::COUNT+cv::TermCriteria::EPS, 100, 1e-5) );

std::cout << "done with RMS error=" << rms << std::endl;

// CALIBRATION QUALITY CHECK

// because the output fundamental matrix implicitly

// includes all the output information,

// we can check the quality of calibration using the

// epipolar geometry constraint: m2^t*F*m1=0

double err = 0;

int npoints = 0;

std::vector<cv::Vec3f> lines[2];

for(int i = 0; i < numGoodImgPairs; i++ )

{

int npt = (int)leftCameraPTvec[i].size();

cv::Mat imgpt[2];

imgpt[0] = cv::Mat(leftCameraPTvec[i]);

imgpt[1] = cv::Mat(rightCameraPTvec[i]);

for(int k = 0; k < 2; k++ )

{

cv::undistortPoints(imgpt[k], imgpt[k], cameraMatrix[k], distCoeffs[k], cv::Mat(), cameraMatrix[k]);

cv::computeCorrespondEpilines(imgpt[k], k+1, F, lines[k]);

}

for(int j = 0; j < npt; j++ )

{

double errij = fabs(leftCameraPTvec[i][j].x*lines[1][j][0] +

leftCameraPTvec[i][j].y*lines[1][j][1] + lines[1][j][2]) +

fabs(rightCameraPTvec[i][j].x*lines[0][j][0] +

rightCameraPTvec[i][j].y*lines[0][j][1] + lines[0][j][2]);

err += errij;

}

npoints += npt;

}

std::cout << "average reprojection err = " << err/npoints << std::endl;

// save intrinsic parameters

cv::FileStorage fs(PathPrefix+"data/intrinsics.yml", cv::FileStorage::WRITE);

if( fs.isOpened() )

{

fs << "M1" << cameraMatrix[0] << "D1" << distCoeffs[0] <<

"M2" << cameraMatrix[1] << "D2" << distCoeffs[1];

fs.release();

}

else

std::cout << "Error: can not save the intrinsic parameters\n";

cv::Mat R1, R2, P1, P2, Q;

cv::Rect validRoi[2];

cv::stereoRectify(cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, R1, R2, P1, P2, Q,

cv::CALIB_ZERO_DISPARITY, 1, imageSize, &validRoi[0], &validRoi[1]);

fs.open(PathPrefix+"data/extrinsics.yml", cv::FileStorage::WRITE);

if( fs.isOpened() )

{

fs << "R" << R << "T" << T << "R1" << R1 << "R2" << R2 << "P1" << P1 << "P2" << P2 << "Q" << Q;

fs.release();

}

else

std::cout << "Error: can not save the extrinsic parameters\n";

// OpenCV can handle left-right

// or up-down camera arrangements

bool isVerticalStereo = fabs(P2.at<double>(1, 3)) > fabs(P2.at<double>(0, 3));

//=======================================================================

//

// SHOW RECTIFIED BELOW

//

//======================================================================

// COMPUTE AND DISPLAY RECTIFICATION

bool showRectified = true;

if( !showRectified )

return 0;

cv::Mat rmap[2][2];

// IF BY CALIBRATED (BOUGUET'S METHOD)

bool useCalibrated = true;

if( useCalibrated )

{

// we already computed everything

}

// OR ELSE HARTLEY'S METHOD

else

// use intrinsic parameters of each camera, but

// compute the rectification transformation directly

// from the fundamental matrix

{

std::vector<cv::Point2f> allimgpt[2];

for(int k = 0; k < 2; k++ )

{

for(int i = 0; i < numGoodImgPairs; i++ ){

if (0 == k) {

std::copy(leftCameraPTvec[i].begin(), leftCameraPTvec[i].end(), back_inserter(allimgpt[k]));

}else

std::copy(rightCameraPTvec[i].begin(), rightCameraPTvec[i].end(), back_inserter(allimgpt[k]));

}

}

F = cv::findFundamentalMat(cv::Mat(allimgpt[0]), cv::Mat(allimgpt[1]), cv::FM_8POINT, 0, 0);

cv::Mat H1, H2;

cv::stereoRectifyUncalibrated(cv::Mat(allimgpt[0]), cv::Mat(allimgpt[1]), F, imageSize, H1, H2, 3);

R1 = cameraMatrix[0].inv()*H1*cameraMatrix[0];

R2 = cameraMatrix[1].inv()*H2*cameraMatrix[1];

P1 = cameraMatrix[0];

P2 = cameraMatrix[1];

fs.open(PathPrefix+"data/extrinsics2.yml", cv::FileStorage::WRITE);

if( fs.isOpened() )

{

fs << "R" << R << "T" << T << "R1" << R1 << "R2" << R2 << "P1" << P1 << "P2" << P2 << "Q" << Q;

fs.release();

}

else

std::cout << "Error: can not save the extrinsic parameters\n";

}

//Precompute maps for cv::remap()

cv::initUndistortRectifyMap(cameraMatrix[0], distCoeffs[0], R1, P1, imageSize, CV_16SC2, rmap[0][0], rmap[0][1]);

cv::initUndistortRectifyMap(cameraMatrix[1], distCoeffs[1], R2, P2, imageSize, CV_16SC2, rmap[1][0], rmap[1][1]);

cv::Mat canvas;

double sf;

int w, h;

if( !isVerticalStereo )

{

sf = 600./MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width*sf);

h = cvRound(imageSize.height*sf);

canvas.create(h, w*2, CV_8UC3);

}

else

{

sf = 300./MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width*sf);

h = cvRound(imageSize.height*sf);

canvas.create(h*2, w, CV_8UC3);

}

for(int i = 0; i < numGoodImgPairs; i++ )

{

for(int k = 0; k < 2; k++ )

{

cv::Mat img, rimg, cimg ;

if (0 == k) {

img = cv::imread(goodleftCameraList[i], 0) ;

}else{

img = cv::imread(goodrightCameraList[i],0);

}

cv::remap(img, rimg, rmap[k][0], rmap[k][1], cv::INTER_LINEAR);

cv::cvtColor(rimg, cimg, cv::COLOR_GRAY2BGR);

cv::Mat canvasPart = !isVerticalStereo ? canvas(cv::Rect(w*k, 0, w, h)) : canvas(cv::Rect(0, h*k, w, h));

cv::resize(cimg, canvasPart, canvasPart.size(), 0, 0, cv::INTER_AREA);

// if( useCalibrated )

// {

cv::Rect vroi(cvRound(validRoi[k].x*sf), cvRound(validRoi[k].y*sf),

cvRound(validRoi[k].width*sf), cvRound(validRoi[k].height*sf));

cv::rectangle(canvasPart, vroi, cv::Scalar(0,0,255), 3, 8);

// }

}

if( !isVerticalStereo )

for(int j = 0; j < canvas.rows; j += 16 )

cv::line(canvas, cv::Point(0, j), cv::Point(canvas.cols, j), cv::Scalar(0, 255, 0), 1, 8);

else

for(int j = 0; j < canvas.cols; j += 16 )

cv::line(canvas, cv::Point(j, 0), cv::Point(j, canvas.rows), cv::Scalar(0, 255, 0), 1, 8);

cv::imshow("rectified", canvas);

char c = (char)cv::waitKey();

if( c == 27 || c == 'q' || c == 'Q' )

break;

}

cv::waitKey(0);

return 0;

}