Hello, I got two major question here! I research this problem for month. And I got no exact solution for my situation.

Intro and Question 1.

I'm working on 3D reconstruction project. Apologize for my imperfect English. I have two camera with extension unit to get close range (about 10cm to camera) image. Parallel setup for stereo camera cannot capture fully(for example object on the left image is not shown in right image, two cameras seeing different part of the scene). So I tried to use non parallel cameras(about 60degee between cameras) which able to see same small object from close range. I calibrated my cameras using symetric circles grid pattern (because view area is very small about 1 cm, so it is difficult to make printed chessboard with high quality, rms error was over 20 after calibration). I used general calibration example "stereo_calib.cpp" in opencv samples code. With circles grid pattern I got 1.3 rms error.

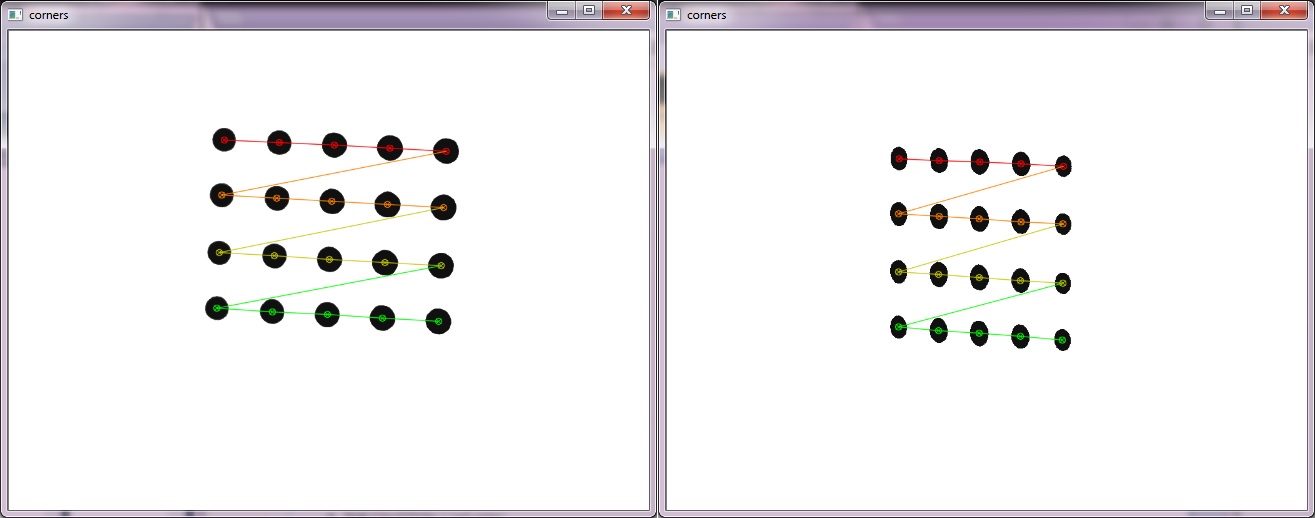

The calibration image is shown in Fig.1.

Fig.1

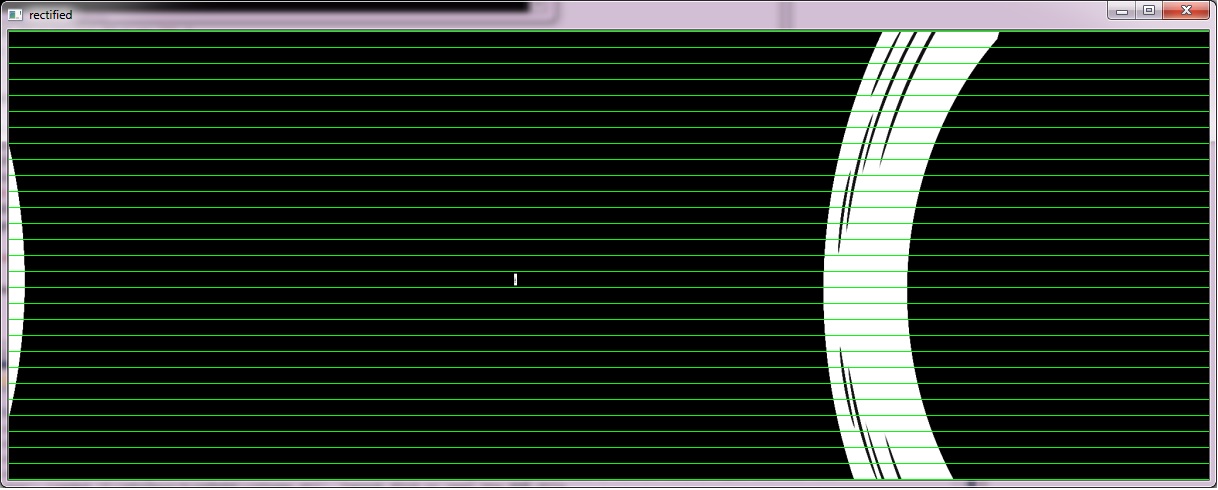

The problem is: When I use "useCalibrated = 1" settings rectification image becomes weird(Fig.2 )

)

Fig.2

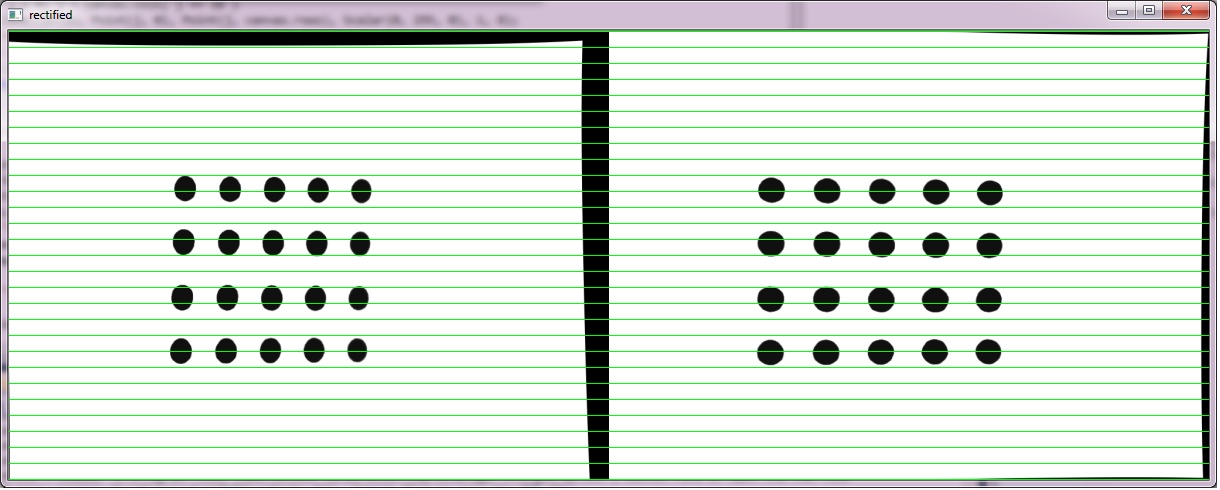

When I use "useCalibrated = 0" the rectification seems good as(which uses fundamental matrix calculation and stereoRectifyUncalibrated are used):

Fig.3

In my opinion the Projection matrix and Rotation matrix result got problem from stereorectify function.

Question-2

Question-2 is I assumed I have good calibration of my two non-paralel camera. Also I assume that I captured left and right image, then apply some preprocess to get clean feature points, and I have two point cloud (left and right) and points in the point cloud are matched points (ready to be triangulated). Can I use general triangulation method? or should I use any transformation and/or rotation before use general triangulation method?

Apologize again for bad English. Any opinion, suggestion, guidance and/or comment will be appreciated.

Thank you for your time!