Hi. I'm working on the task of matching keypoints between video frames. What I do here is:

- do a simple match()

- calculate averade DMatch.distance

- do a radiusMatch() for that averade distance

- calculate average difference of matched points' y coordinates

- filter matches by their points' y coordinate difference compared to average

These steps procude somewhat satisfactory results. However, there are currently two issues:

- Sometimes Match.queryIdx or Match.trainIdx after a radiusMatch() are outside of keypoint vectors' ranges.

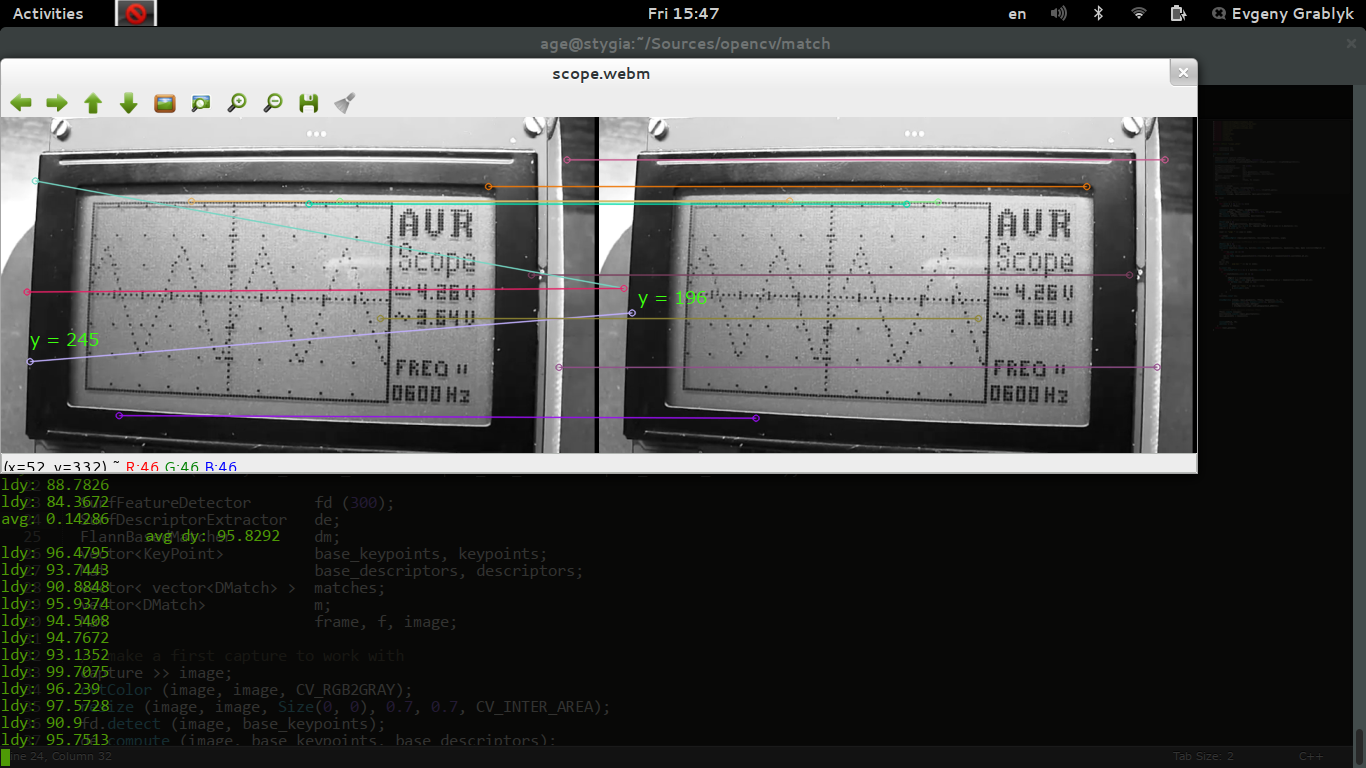

- Image coordinates displayed by the Qt GUI do not match point coordinates in-code; some matches which should have definitely filtered out still pass (see image below)

Any feedback on my approach here would be appreciated as well.

Code:

(note: C++11 lambdas are used; OpenCV 2.4.2)

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/nonfree/features2d.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <iostream>

#include <vector>

#include <algorithm>

#include <array>

#include <stdio.h>

#include <stdlib.h>

#define VFILE "scope.webm"

using namespace cv;

using namespace std;

int main (void)

{

VideoCapture capture (VFILE);

capture.set (CV_CAP_PROP_POS_MSEC, 138180.0);

namedWindow (VFILE, CV_WINDOW_KEEPRATIO | CV_GUI_EXPANDED

| CV_WINDOW_AUTOSIZE);

SurfFeatureDetector fd (100);

SurfDescriptorExtractor de;

FlannBasedMatcher dm;

vector<KeyPoint> base_keypoints, keypoints;

Mat base_descriptors, descriptors;

vector< vector<DMatch> > matches;

vector<DMatch> m;

Mat frame, f, image;

// make a first capture to work with

capture >> image;

cvtColor (image, image, CV_RGB2GRAY);

resize (image, image, Size(0, 0), 0.7, 0.7, CV_INTER_AREA);

fd.detect (image, base_keypoints);

de.compute (image, base_keypoints, base_descriptors);

for (;;)

{

// skip some frames

for (int i = 0; i <= 3; i++)

capture >> frame;

cvtColor (frame, frame, CV_RGB2GRAY);

resize (frame, frame, Size(0, 0), 0.7, 0.7, CV_INTER_AREA);

fd.detect (frame, keypoints);

de.compute (frame, keypoints, descriptors);

// calculate avrage distance for radiusMatch

float avg = 0;

dm.match (base_descriptors, descriptors, m);

for_each (m.begin (), m.end (), [&avg] (DMatch m)

{

avg += m.distance;

});

avg /= ( m.size () * 4 );

cout << "avg: " << avg << endl;

if (avg)

dm.radiusMatch (base_descriptors, descriptors, matches, avg);

// calculate average difference of y coordinates

double dy = 0;

unsigned int el = 0;

for_each (matches.begin (), matches.end (), [base_keypoints, keypoints,

&dy, &el] (vector<DMatch> v)

{

if (v.size () == 0)

return;

if (v[0].trainIdx >= base_keypoints.size ()

|| v[0].queryIdx >= keypoints.size ())

return;

dy += fabs (base_keypoints[v[0].trainIdx].pt.y

- keypoints[v[0].queryIdx].pt.y);

el++;

});

dy /= el;

cout << " avg dy: " << dy << endl;

m.clear ();

for (unsigned int i = 0; i < matches.size(); i++)

{

if (matches[i].size () >= 1)

{

DMatch v = matches[i][0];

double ldy = fabs (base_keypoints[v.trainIdx].pt.y

- keypoints[v.queryIdx].pt.y);

if ((fabs (dy - ldy) <= 5))

{

cout << "ldy: " << ldy << endl;

m.push_back (v);

}

}

}

matches.clear ();

drawMatches (image, base_keypoints, frame, keypoints, m, f,

Scalar::all(-1), Scalar::all(-1), vector<char>(),

DrawMatchesFlags::DEFAULT

| DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

// current frame becomes previous frame

frame.copyTo (image);

descriptors.copyTo (base_descriptors);

base_keypoints = keypoints;

imshow(VFILE, f);

waitKey (-1);

}

return EXIT_SUCCESS;

}

Here "avg dy" is the average difference between keypoints' y coordinates and "ldy" is this difference for each match (keypoint pair).