Use case:

Assume a spacecraft observing the Earth travels along its orbit fast enough such that the motion of the objects being observed (clouds, land, etc) are negligible.

Consider the following Earth observation frames captured by a spacecraft along its orbit.

Frame 1

Frame 2

Frame 3

Goal:

Using only two frames, determine the direction of motion of the spacecraft/camera.

Thought process:

My thought process was to use optical flow to determine the motion of various points between the frames.

I'd then average up all the individual vectors to determine the direction of motion. Presently, I'm just doing this by adding up all the normalized vectors and hoping that with a sufficiently large number of points, the resulting vector will be within a tolerable range of error.

I've adapted the sample application, lkdemo.cpp (v3.4.9), to just accept two inputs for frames (prev and next) and output the prev image with the original points and lines pointing towards where those points would be in next.

Refer to lkdemo.cpp here: https://github.com/opencv/opencv/blob/3.4.9/samples/cpp/lkdemo.cpp#L84

The arguments to goodFeaturesToTrack, cornerSubPix, and calcOpticalFlowPyrLK were not changed.

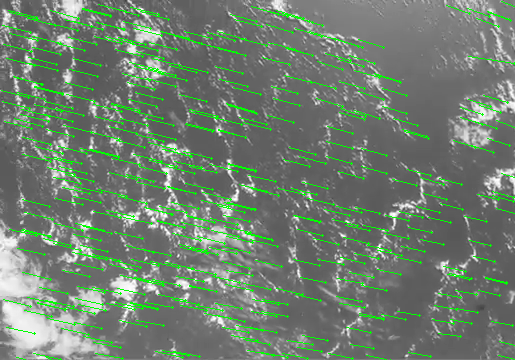

This looks OK for adjacent frames:

Frame 1 -> Frame 2

Frame 2 -> Frame 3

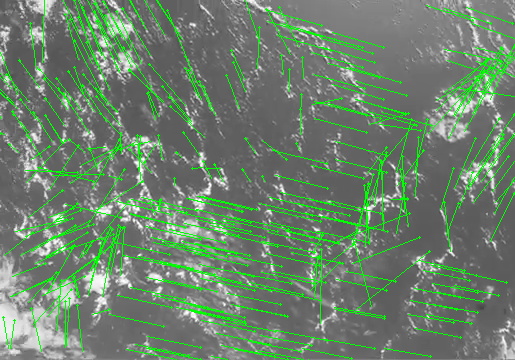

However, in some cases (e.g, skipping a frame), the result doesn't look so good.

Frame 1 -> Frame 3

Calculating an average direction in a case like this usually leads to something very wrong.

Question

Any advice for improving the result in cases like Frame 1 -> Frame 3?

Any input on the overall approach for this particular use case? I'm new to OpenCV and image processing in general.

Thanks.