I am working on a fish detection algorithm for sonar images as a pet open source project using OpenCV and am looking for advice from someone with experience in computer vision about how I can improve its accuracy likely by improving the thresholding/segmentation algorithm in use.

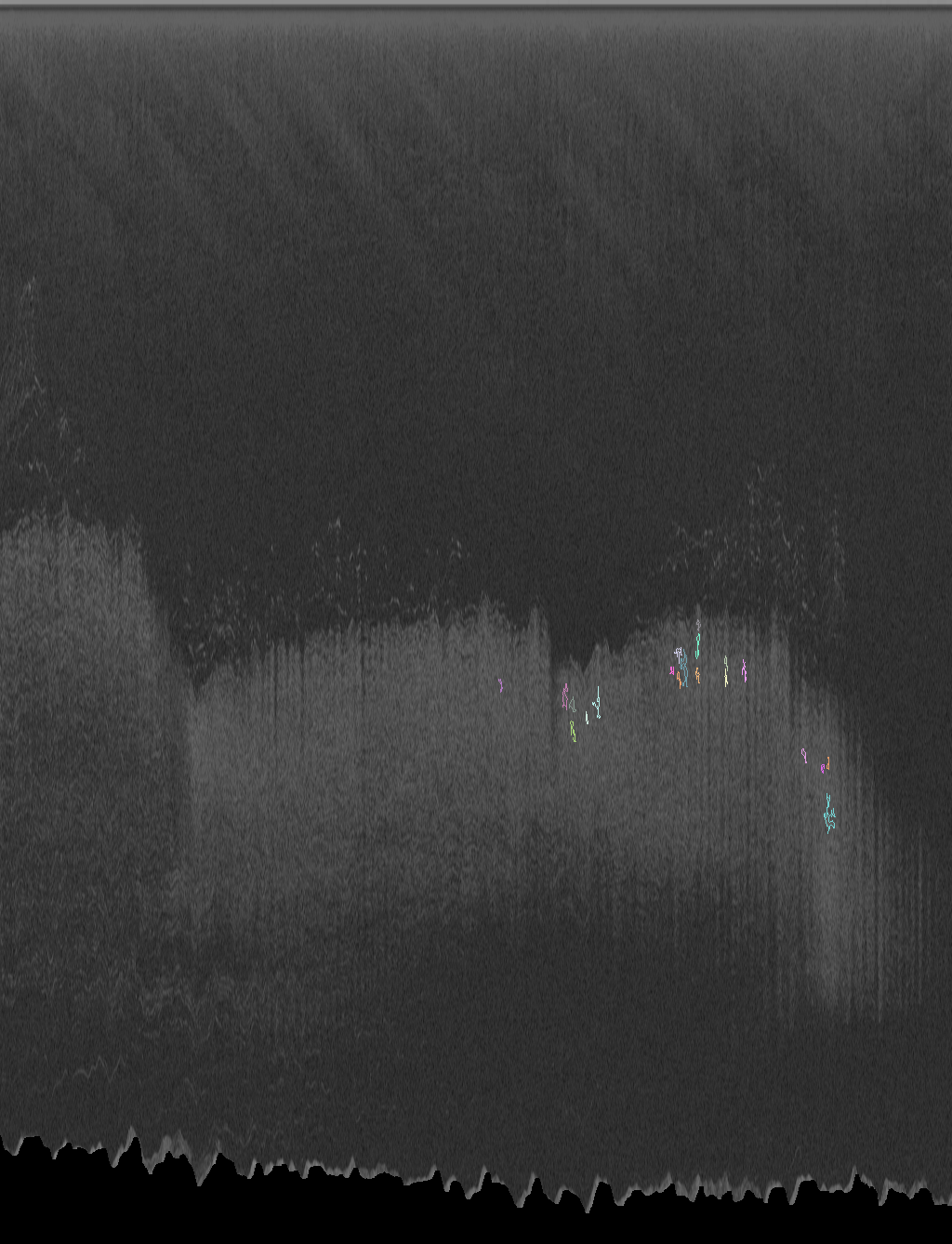

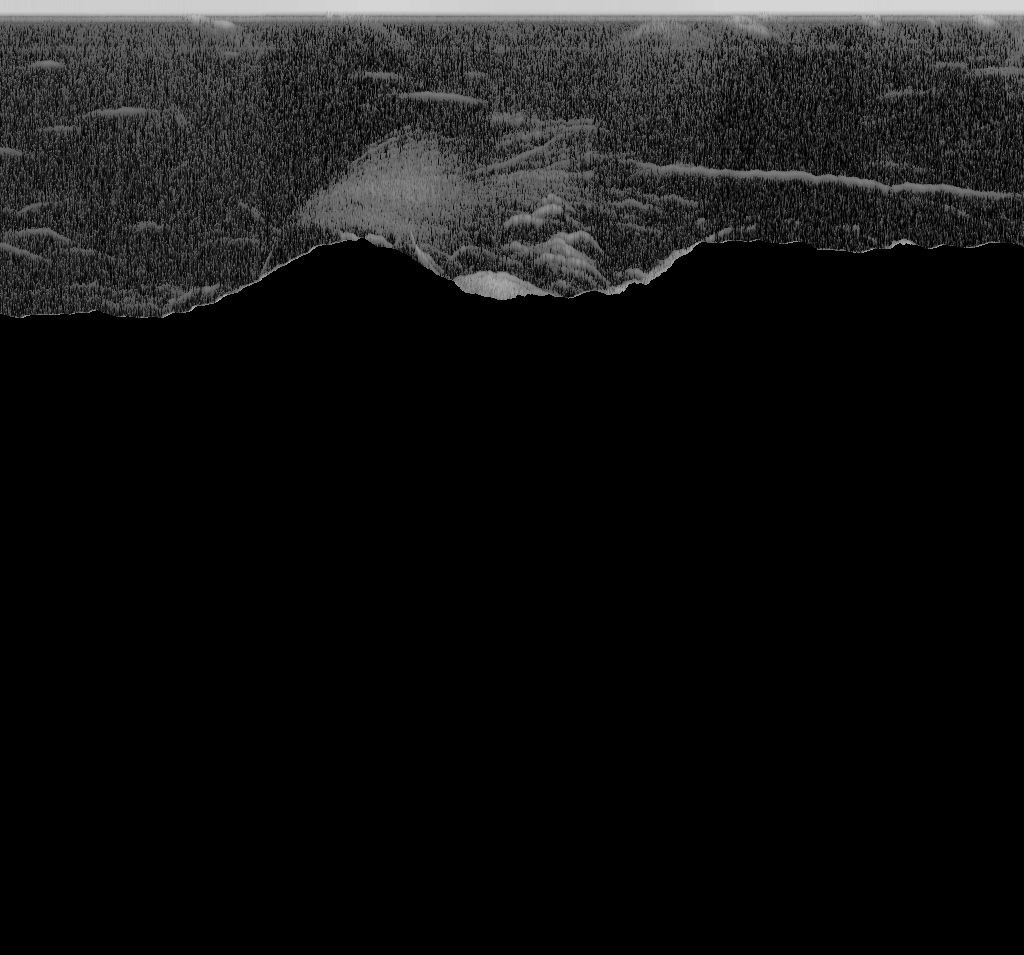

Sonar images look a bit like below and the basic artifacts I want to find in them are:

- Upside down horizontal arches that are likely fish

- Cloud/blob/balls shape artifacts that are likely schools of bait fish

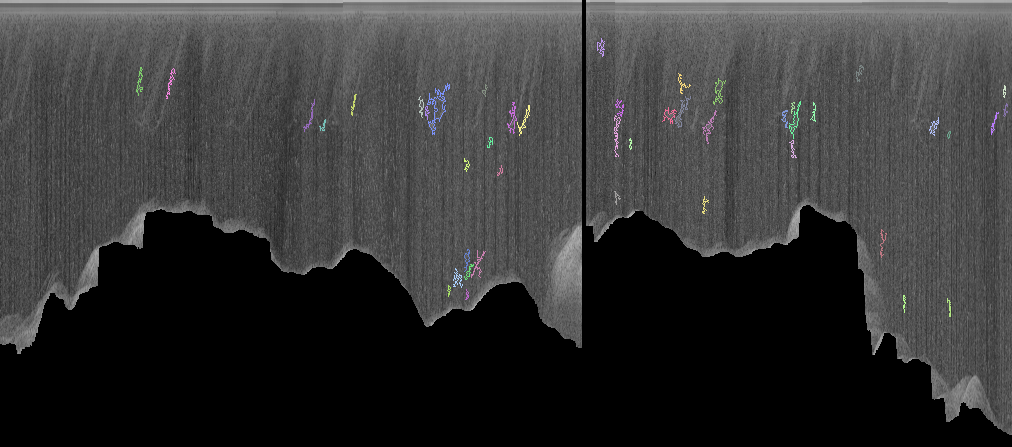

I would really like to extract contours of these cloud and fish-arch artifacts.

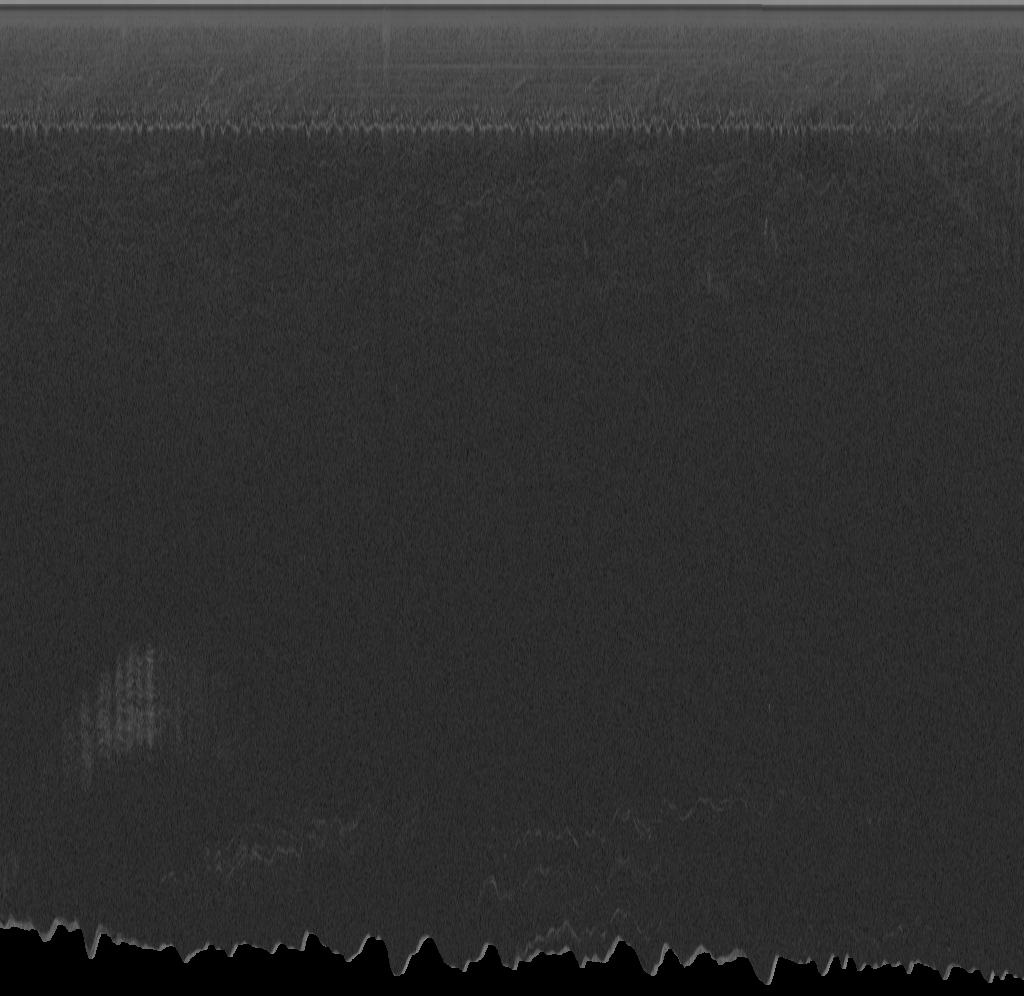

The example code below uses threshold() and findContours(). The results are reasonable in this case as it has been manually tuned for this image but does not work on other sonar images that may require different thresholds or a different thresholding algorithm.

I have tried OSTUs method and it doesn't really work very well for this use case. I think I need a thresholding/segmentation algorithm that uses contrast of localized blobs somehow, does such an algorithm exist in OpenCV or is there some other technique I should look more into?

Thanks, Brendon.

Original image searching for artifacts:

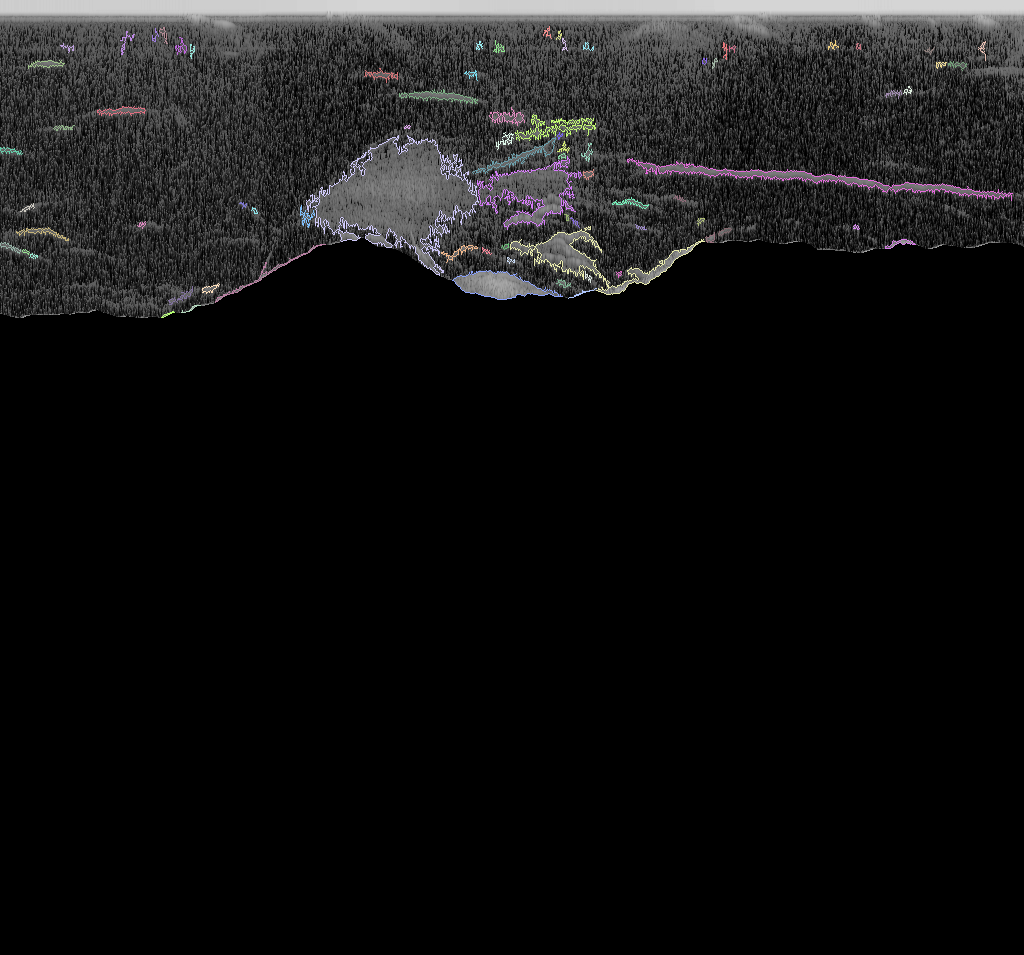

Example output:

import numpy

import random

import cv2

import math

MIN_AREA = 10

MIN_THRESHOLD = 90

def IsContourUseful(contour):

# I have a much more complex version of this in my real code

# This is good enough for demo of the concept and easier to understand

# Filter on area for all items

area = cv2.contourArea(contour)

if area < MIN_AREA:

return False

# Remove any contours close to the top

for i in range(0, contour.shape[0]):

if contour[i][0][1] <= 10:

return False

return True

def FindFishContoursInImageWithoutBottom(image, file_name_base):

ret, thresh = cv2.threshold(image, MIN_THRESHOLD, 255, cv2.THRESH_BINARY)

cv2.imwrite(file_name_base + 'thresholded.png', thresh)

contours, hierarchy = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = [c for c in contours if IsContourUseful(c)]

print ('Found %s interesting contours' % (len(contours)))

# Lets draw each contour with a diff colour so we can see them as separate items

im_colour = cv2.cvtColor(image, cv2.COLOR_GRAY2RGB)

um2 = cv2.UMat(im_colour)

for contour in contours:

colour = (random.randint(100,255), random.randint(100,255), random.randint(100,255))

um2 = cv2.drawContours(um2, [contour], -1, colour, 1)

cv2.imwrite(file_name_base + 'contours.png', um2)

return contours

# Load png and make greyscale as that is what original sonar data looks like

file_name_base = 'fish_image_cropped_erased_bottom'

image = cv2.imread(file_name_base + '.png')

image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

FindFishContoursInImageWithoutBottom(image, file_name_base)