I want to build a device that measures anything you put on it. It is basically a camera under a glass. But the camera is only responses to infra red light (so I have IR LED strips around the view port). The problem is I am facing is a strategy to calibrate the camera. Due to the lens effect of the camera the image is a bit like a fish-eye.

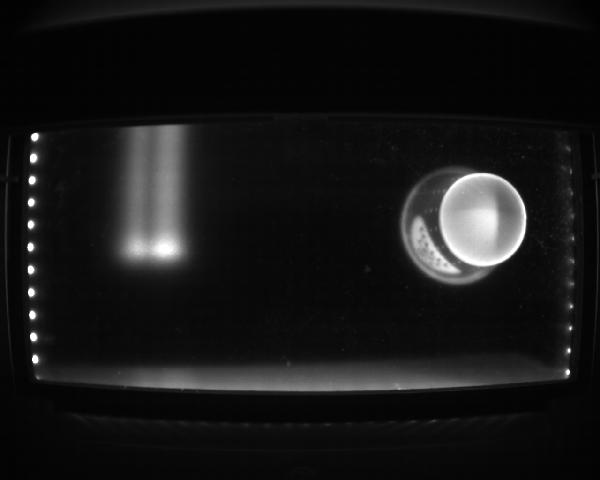

The picture below shows the concept. I have IR LEDS on 4 side of the view port. The viewport is not all the resoulution of the camera. The camera is 1280x1024) and the viewport or ROI is roughly 1100x600 pixels. In this picture you can see a paper coffee cup and light from ceiling (yeah the ceiling hs some IR content in it but that is not the main problem).

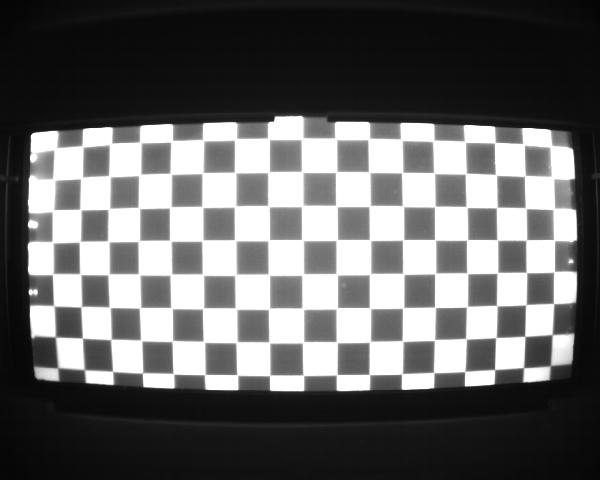

I have printed a large checkerboard with 20mm squares and this is how it looks normally (with reduced camera exposure):

So my question is how should I go with calibrating this setup? I tried the "calibrate.cpp" in the OpenCV examples but it does not work...I mean the "undistorted" image that it yields is no different than the original picture. And it does not detect all the squares...it only detects the squares in the centre (like 7x5).

How would you approach such a problem? Where should I begin? Can I get away with this big checkerboard and only calibrate based on one picture? Or I need smaller checkerboard and I move it around the viewport and make more samples for calibration?

Is it enought that I only feed the viewport to the calibration source code (crop the mat to the ROI)?