I am trying to measure the size of an object in the image, but the code I am working with is only looking for the details in the picture and not the object I want. Here is my code:

edged = cv2.Canny(gray, 50, 20) #50 and 20 are tshld one and two edged = cv2.dilate(edged, None, iterations=1) edged = cv2.erode(edged, None, iterations=1)

cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts)

(cnts, _) = contours.sort_contours(cnts) pixelsPerMetric = None

for c in cnts: # if the contour is not sufficiently large, ignore it if cv2.contourArea(c) < 60: continue

orig = image.copy()

box = cv2.minAreaRect(c)

box = cv2.cv.BoxPoints(box) if imutils.is_cv2() else cv2.boxPoints(box)

box = np.array(box, dtype="int")

box = perspective.order_points(box)

cv2.drawContours(orig, [box.astype("int")], -1, (0, 255, 0), 3)

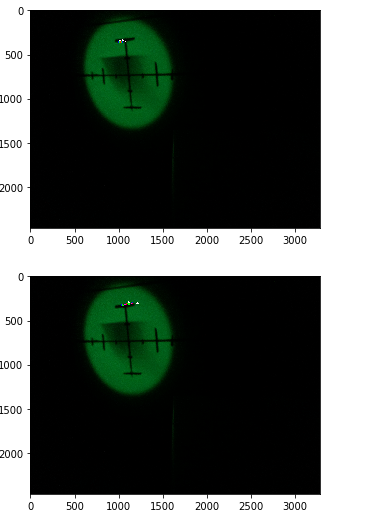

Note: the image below is the result of my code. I want to be able to detect the green target and measure it's dimensions. However, the box is only enclosing small portions of the image and not the entire target. If I should post entire code, let me know!

Thanks!