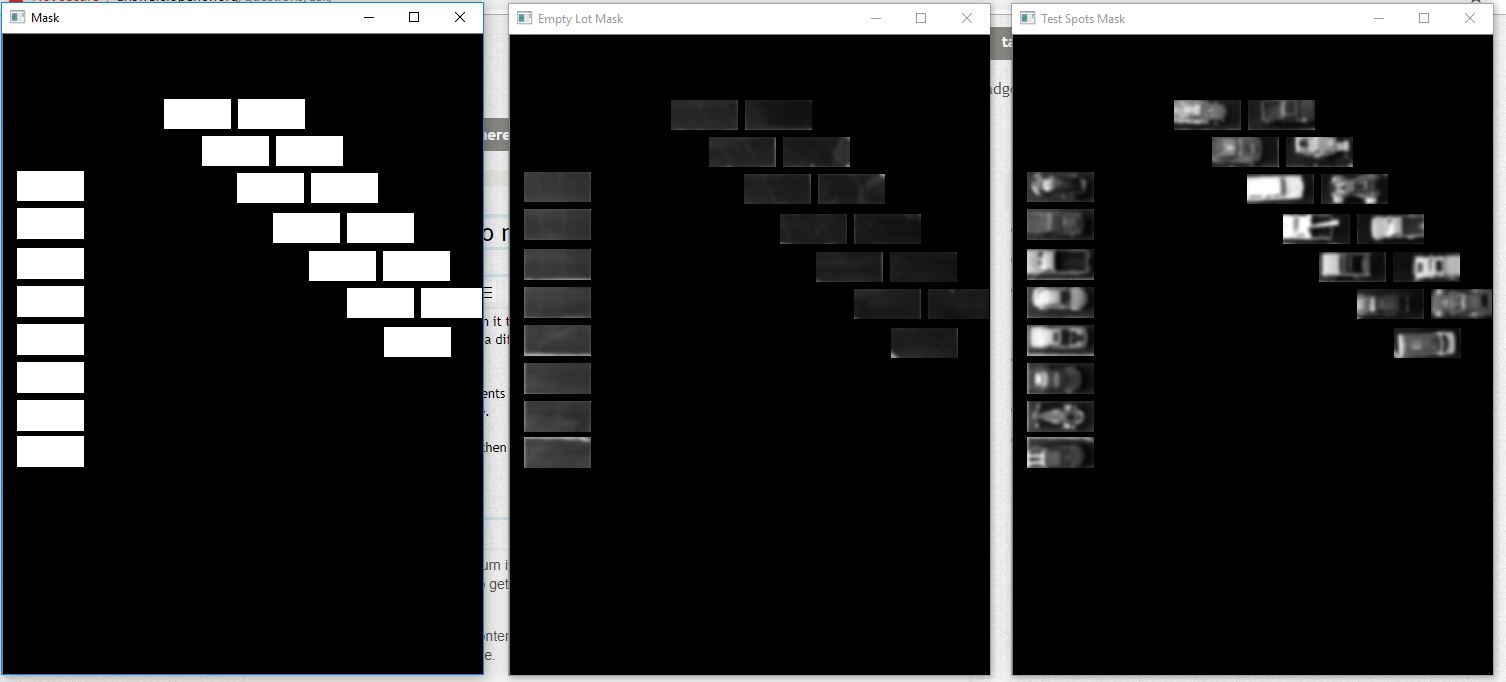

I have managed to bring in a image, turn it to grayscale, create a mask over specific points of interests. I can compare the first image to multiple of images to get a differences and created a bounding box around the test image locations that are different.

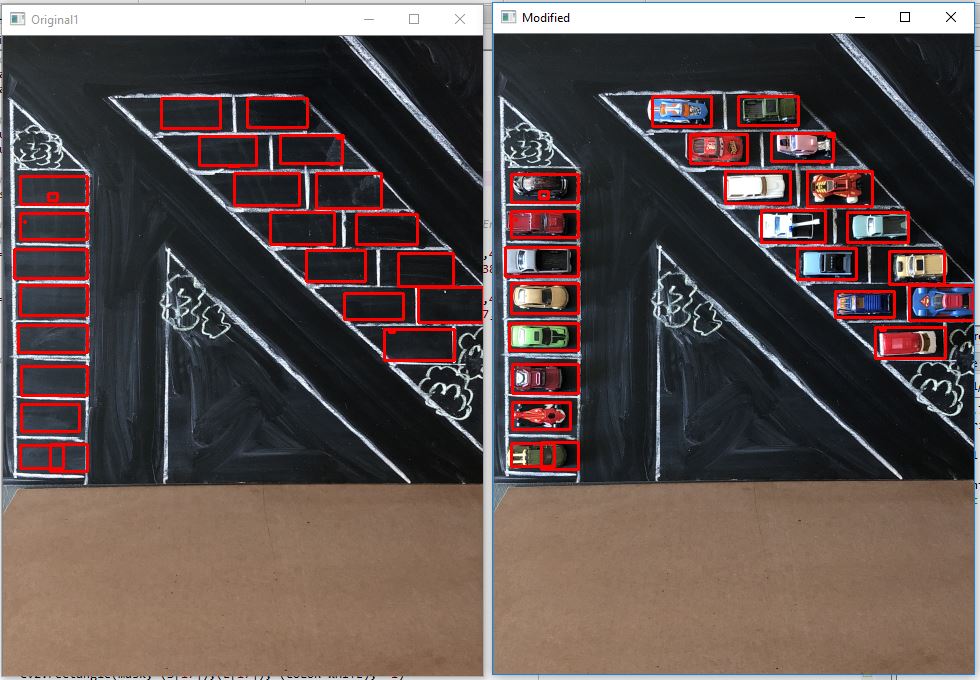

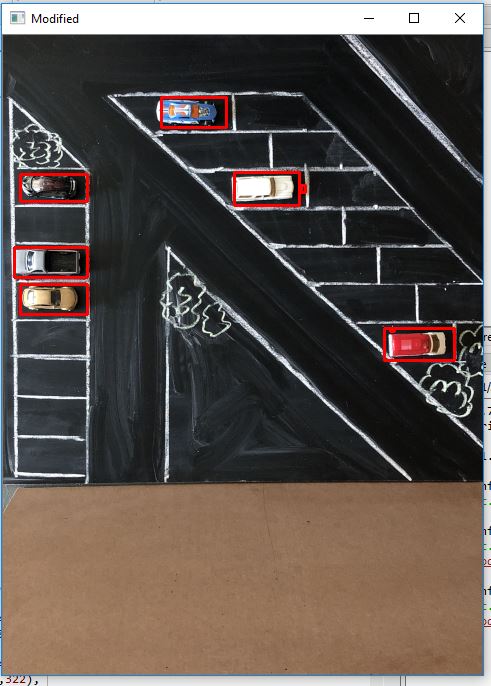

The bounding boxes go around the contents that are different. I want bound the box around the quadrants(specific locations) produced from the difference.

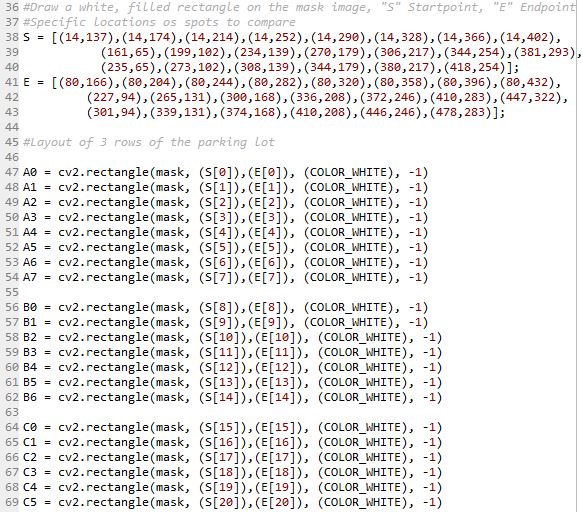

The locations are based off lines 36-69.

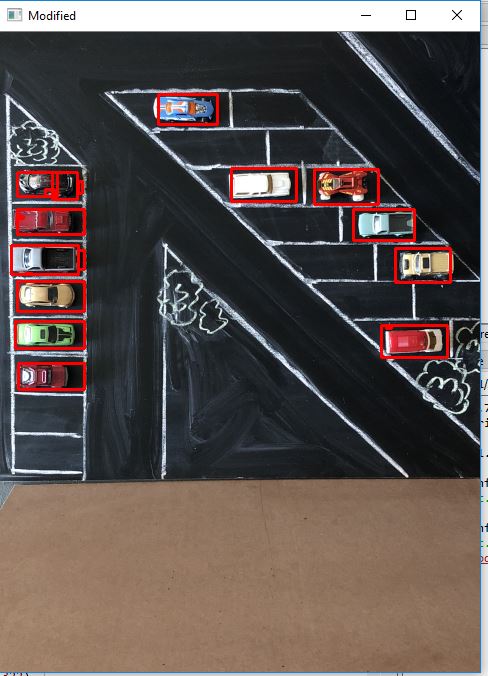

When I to compare Empty lot to Test image, The following bounding boxes occur. This is put on the original picture for clarity.

I want create if then statements for each spot, based on the quadrants to produce a 1 or 0. If there is a red bounding box or a green bounding box.

I put screen shots of the other 2 test images. To show I am getting pretty good comparisons to what is in the spaces.

The following is the code:

import cv2

import numpy as np

from colors import COLOR_WHITE, COLOR_RED #imports colors from colors.py

from skimage.measure import compare_ssim

import imutils

#Get image(Empty Lot) and image(Test Spots in Lot)

img = cv2.imread('IMG_0940.jpg')

img2 = cv2.imread('IMG_0941.jpg')

#Resize both images to same size

r = 480 / img.shape[1]

dim = (480, int(img.shape[0]*r))

small = cv2.resize(img,dim,interpolation = cv2.INTER_AREA)

r1 = 480 / img2.shape[1]

dim = (480, int(img2.shape[0]*r1))

small2 = cv2.resize(img2,dim,interpolation = cv2.INTER_AREA)

#Display the both resized images

#cv2.imshow("Empty Lot",small)

#cv2.imshow("Test Spots in Lot",small2)

#cv2.waitKey(0)

#Convert to gray scale on img, img2

smallgray = cv2.cvtColor(small,cv2.COLOR_BGR2GRAY)

small2gray = cv2.cvtColor(small2,cv2.COLOR_BGR2GRAY)

#Apply GaussianBlur to both img and img2

blur = cv2.GaussianBlur(smallgray,(9,9),0)

blur2 = cv2.GaussianBlur(small2gray,(9,9),0)

#Create the basic black image

mask = np.zeros(blur.shape, dtype="uint8")

#Draw a white, filled rectangle on the mask image, "S" Startpoint, "E" Endpoint

#Specific locations of spots to compare

S = [(14,137),(14,174),(14,214),(14,252),(14,290),(14,328),(14,366),(14,402),

(161,65),(199,102),(234,139),(270,179),(306,217),(344,254),(381,293),

(235,65),(273,102),(308,139),(344,179),(380,217),(418,254)];

E = [(80,166),(80,204),(80,244),(80,282),(80,320),(80,358),(80,396),(80,432),

(227,94),(265,131),(300,168),(336,208),(372,246),(410,283),(447,322),

(301,94),(339,131),(374,168),(410,208),(446,246),(478,283)];

#Layout of 3 rows of the parking lot

A0 = cv2.rectangle(mask, (S[0]),(E[0]), (COLOR_WHITE), -1)

A1 = cv2.rectangle(mask, (S[1]),(E[1]), (COLOR_WHITE), -1)

A2 = cv2.rectangle(mask, (S[2]),(E[2]), (COLOR_WHITE), -1)

A3 = cv2.rectangle(mask, (S[3]),(E[3]), (COLOR_WHITE), -1)

A4 = cv2.rectangle(mask, (S[4]),(E[4]), (COLOR_WHITE), -1)

A5 = cv2.rectangle(mask, (S[5]),(E[5]), (COLOR_WHITE), -1)

A6 = cv2.rectangle(mask, (S[6]),(E[6]), (COLOR_WHITE), -1)

A7 = cv2.rectangle(mask, (S[7]),(E[7]), (COLOR_WHITE), -1)

B0 = cv2.rectangle(mask, (S[8]),(E[8]), (COLOR_WHITE), -1)

B1 = cv2.rectangle(mask, (S[9]),(E[9]), (COLOR_WHITE), -1)

B2 = cv2.rectangle(mask, (S[10]),(E[10]), (COLOR_WHITE), -1)

B3 = cv2.rectangle(mask, (S[11]),(E[11]), (COLOR_WHITE), -1)

B4 = cv2.rectangle(mask, (S[12]),(E[12]), (COLOR_WHITE), -1)

B5 = cv2.rectangle(mask, (S[13]),(E[13]), (COLOR_WHITE), -1)

B6 = cv2.rectangle(mask, (S[14]),(E[14]), (COLOR_WHITE), -1)

C0 = cv2.rectangle(mask, (S[15]),(E[15]), (COLOR_WHITE), -1)

C1 = cv2.rectangle(mask, (S[16]),(E[16]), (COLOR_WHITE), -1)

C2 = cv2.rectangle(mask, (S[17]),(E[17]), (COLOR_WHITE), -1)

C3 = cv2.rectangle(mask, (S[18]),(E[18]), (COLOR_WHITE), -1)

C4 = cv2.rectangle(mask, (S[19]),(E[19]), (COLOR_WHITE), -1)

C5 = cv2.rectangle(mask, (S[20]),(E[20]), (COLOR_WHITE), -1)

# Display constructed mask

cv2.imshow("Mask", mask)

cv2.waitKey(0)

# Mask image(Empty Lot) and image(Test Spots in Lot)

maskedImg = cv2.bitwise_and(blur,mask)

maskedImg2 = cv2.bitwise_and(blur2,mask)

#Display masked images

cv2.imshow("Empty Lot Mask", maskedImg)

cv2.imshow("Test Spots Mask", maskedImg2)

cv2.waitKey(0)

# compute the Structural Similarity Index (SSIM) between the two

# images, ensuring that the difference image is returned

(score, diff) = compare_ssim(maskedImg, maskedImg2, full=True)

diff = (diff * 255).astype("uint8")

#print("SSIM: {}".format(score))

#Threshold the difference image, followed by finding contours to

# obtain the regions of the two input images that differ

thresh = cv2.threshold(diff, 0, 255,

cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

# loop over the contours

for c in cnts:

# compute the bounding box of the contour and then draw the

# bounding box on both input images to represent where the two

# images differ

(x, y, w, h) = cv2.boundingRect(c)

cv2.rectangle(small, (x, y), (x + w, y + h), (COLOR_RED), 2)

cv2.rectangle(small2, (x, y), (x + w, y + h), (COLOR_RED), 2)

# Display the output images

cv2.imshow("Original1", small)

cv2.imshow("Modified", small2)

#Display the Diff and Threshold images

#cv2.imshow("Diff", diff)

#cv2.imshow("Thresh", thresh)

cv2.waitKey(0)

cv2.destroyAllWindows() #Closes all the windows

This is the colors.py to run with code.

COLOR_BLACK = (0, 0, 0)

COLOR_RED = (0, 0, 255)

COLOR_GREEN = (0, 255, 0)

COLOR_BLUE = (255, 0, 0)

COLOR_WHITE = (255, 255, 255)

Any help would be greatly appreciated.

Pictures used would not load here so I put on the website.

Empty Lot: http://i65.tinypic.com/24wzv47.jpg

6 Cars: http://i65.tinypic.com/24wzv47.jpg

12 Cars: http://i65.tinypic.com/2a8px11.jpg

Full Lot: http://i68.tinypic.com/14tsbyd.jpg