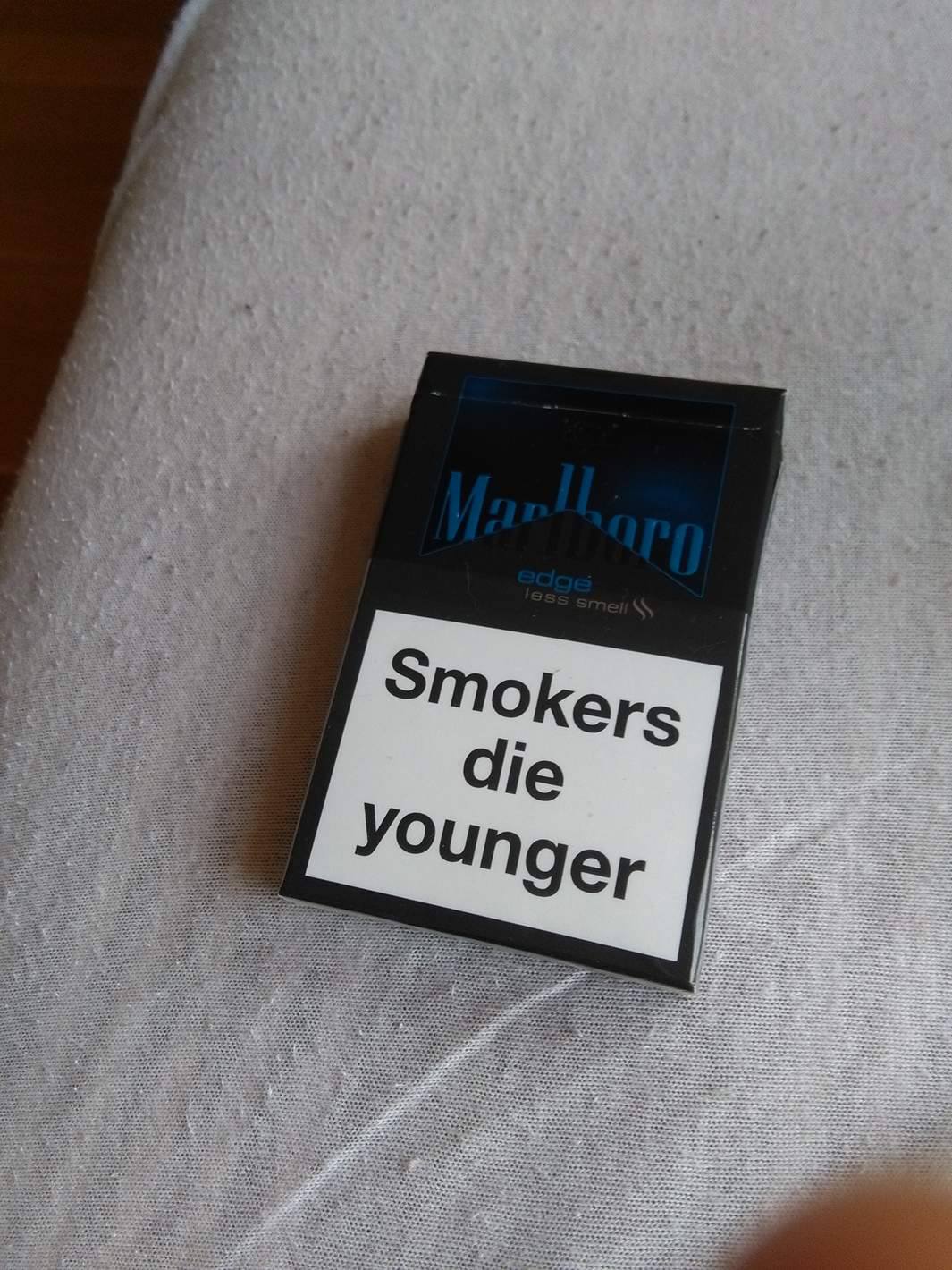

I'm using the following image to extract marlboro picture, but still don't get good results

here is my full code

here is my full code

#include <opencv2\opencv.hpp>

"opencv2/imgproc.hpp"

#include <vector>

"opencv2/imgcodecs.hpp"

#include <numeric>

"opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

void sortCorners(std::vector<cv::Point2f>& corners)

{

std::vector<cv::Point2f> top, bot;

cv::Point2f center;

// Get mass center

for (int i = 0; i < corners.size(); i++)

center += corners[i];

center *= (1. / corners.size());

for (int i = 0; i < corners.size(); i++)

{

if (corners[i].y < center.y)

top.push_back(corners[i]);

else

bot.push_back(corners[i]);

}

corners.clear();

if (top.size() == 2 && bot.size() == 2) {

cv::Point2f tl = top[0].x > top[1].x ? top[1] : top[0];

cv::Point2f tr = top[0].x > top[1].x ? top[0] : top[1];

cv::Point2f bl = bot[0].x > bot[1].x ? bot[1] : bot[0];

cv::Point2f br = bot[0].x > bot[1].x ? bot[0] : bot[1];

corners.push_back(tl);

corners.push_back(tr);

corners.push_back(br);

corners.push_back(bl);

}

}

double angle(cv::Point pt1, cv::Point pt2, cv::Point pt0) {

double dx1 = pt1.x - pt0.x;

double dy1 = pt1.y - pt0.y;

double dx2 = pt2.x - pt0.x;

double dy2 = pt2.y - pt0.y;

return (dx1*dx2 + dy1*dy2) / sqrt((dx1*dx1 + dy1*dy1)*(dx2*dx2 + dy2*dy2) + 1e-10);

}

void find_squares(Mat& image, vector<vector<Point> >& squares)

{

// blur will enhance edge detection

Mat blurred(image);

Mat dst;

medianBlur(image, dst, 9);

Mat gray0(dst.size(), CV_8U), gray;

vector<vector<Point> > contours;

// find squares in every color plane of the image

for (int c = 0; c < 3; c++)

{

int main()

ch[] = { c, 0 };

mixChannels(&dst, 1, &gray0, 1, ch, 1);

// try several threshold levels

const int threshold_level = 2;

for (int l = 0; l < threshold_level; l++)

{

// Use Canny instead of zero threshold level!

// Canny helps to catch squares with gradient shading

if (l == 0)

{

Canny(gray0, gray, 10, 20, 3); //

// Dilate helps to remove potential holes between edge segments

dilate(gray, gray, Mat(), Point(-1, -1));

}

else

{

gray = gray0 >= (l + 1) * 255 / threshold_level;

}

// Find contours and store them in a list

findContours(gray, contours, CV_RETR_LIST, CV_CHAIN_APPROX_SIMPLE);

// Test contours

vector<Point> approx;

for (size_t i = 0; i < contours.size(); i++)

{

// approximate contour with accuracy proportional

// to the contour perimeter

approxPolyDP(Mat(contours[i]), approx, arcLength(Mat(contours[i]), true)*0.02, true);

// Note: absolute value of an area is used because

// area may be positive or negative - in accordance with the

// contour orientation

if (approx.size() == 4 &&

fabs(contourArea(Mat(approx))) > 1000 &&

isContourConvex(Mat(approx)))

{

double maxCosine = 0;

for (int j = 2; j < 5; j++)

{

double cosine = fabs(angle(approx[j % 4], approx[j - 2], approx[j - 1]));

maxCosine = MAX(maxCosine, cosine);

}

if (maxCosine < 0.3)

squares.push_back(approx);

}

}

}

}

}

cv::Mat debugSquares(std::vector<std::vector<cv::Point> > squares, cv::Mat image)

{

for (int i = 0; i< squares.size(); i++) {

// draw contour

cv::drawContours(image, squares, i, cv::Scalar(255, 0, 0), 1, 8, std::vector<cv::Vec4i>(), 0, cv::Point());

// draw bounding rect

cv::Rect rect = boundingRect(cv::Mat(squares[i]));

cv::rectangle(image, rect.tl(), rect.br(), cv::Scalar(0, 255, 0), 2, 8, 0);

// draw rotated rect

cv::RotatedRect minRect = minAreaRect(cv::Mat(squares[i]));

cv::Point2f rect_points[4];

minRect.points(rect_points);

for (int j = 0; j < 4; j++) {

cv::line(image, rect_points[j], rect_points[(j + 1) % 4], cv::Scalar(0, 0, 255), 1, 8); // blue

}

}

return image;

}

int main(int, char**)

{

int largest_area = 0;

int largest_contour_index = 0;

cv::Rect bounding_rect;

RotatedRect minRect;

Mat src = imread("src.jpg");

imread("15016889798859437.jpg");

Mat thr(src.rows, src.cols, CV_8UC1);

greyMat;

cvtColor(src, greyMat, COLOR_BGR2GRAY);

//2-convert image from gray scale to black and white using adaptive thresholding

Mat blackAndWhiteMat;

adaptiveThreshold(greyMat, blackAndWhiteMat, 255, CV_ADAPTIVE_THRESH_MEAN_C, CV_THRESH_BINARY_INV, 13, 1);

Mat dst(src.rows, src.cols, CV_8UC1, Scalar::all(0));

Mat gray(src.rows, src.cols, CV_8UC1);

Mat blur;

cvtColor(src, thr, CV_BGR2GRAY); //Convert to gray

GaussianBlur(thr, thr, Size(1, 1), 0);

threshold(thr, thr, 110, 255, THRESH_BINARY);

Canny(thr, gray, 30, 125);

vector<Vec4i> hierarchy;

vector<vector<cv::Point>> contours; // Vector for storing contour

vector<Vec4i> hierarchy;

findContours(gray, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE); // Find the contours in the image

find_squares(src, contours);

for (int i = 0; i< contours.size(); i++) // iterate through each contour.

{

double a = contourArea(contours[i], false); // Find the area of contour

if (a>largest_area) {

largest_area = a;

largest_contour_index = i; //Store the index of largest contour

bounding_rect = boundingRect(contours[i]); // Find the bounding rectangle for biggest contour

minRect = minAreaRect(contours[i]);

}

}

std::vector<cv::Point2f> corners;

Point2f corner_points[4];

minRect.points(corner_points);

corners.push_back(corner_points[0]);

corners.push_back(corner_points[1]);

corners.push_back(corner_points[2]);

corners.push_back(corner_points[3]);

sortCorners(corners);

cv::Mat quad = cv::Mat::zeros(norm(corners[1]- corners[2]), norm(corners[2] - corners[3]), CV_8UC3);

std::vector<cv::Point2f> quad_pts;

quad_pts.push_back(cv::Point2f(0, 0));

quad_pts.push_back(cv::Point2f(quad.cols, 0));

quad_pts.push_back(cv::Point2f(quad.cols, quad.rows));

quad_pts.push_back(cv::Point2f(0, quad.rows));

cv::Mat transmtx = cv::getPerspectiveTransform(corners, quad_pts);

cv::warpPerspective(src, quad, transmtx, quad.size());

imshow("quad", quad);

Scalar color(255, 255, 255);

drawContours(dst, contours, largest_contour_index, color, CV_FILLED, 8, hierarchy); // Draw the largest contour using previously stored index.

rectangle(src, bounding_rect, Scalar(0, 255, 0), 1, 8, 0);

imshow("Image", src);

cv::Mat result; // segmentation result (4 possible values)

cv::Mat bgModel, fgModel; // the models (internally used)

// GrabCut segmentation

cv::grabCut(src, // input image

result, // segmentation result

bounding_rect,// rectangle containing foreground

foreground

bgModel, fgModel, // models

1, // number of iterations

cv::GC_INIT_WITH_RECT); // use rectangle

// Get the pixels marked as likely foreground

cv::compare(result, cv::GC_PR_FGD, result, cv::CMP_EQ);

// Generate output image

cv::Mat foreground(src.size(), CV_8UC3, cv::Scalar(255, 255, 255));

src.copyTo(foreground, result); // bg pixels not copied

// draw rectangle on original image

cv::rectangle(src, bounding_rect, cv::Scalar(255, 255, 255), 1);

resize(src, src, Size(), 0.5, 0.5);

cv::namedWindow("Image");

cv::imshow("Image", src);

// display result

cv::namedWindow("Segmented Image");

resize(foreground, foreground, Size(), 0.5, 0.5);

cv::imshow("Segmented Image", foreground);

waitKey();

return 0;

}

here is my full code

here is my full code

here is my full code

here is my full code