Hello everyone,

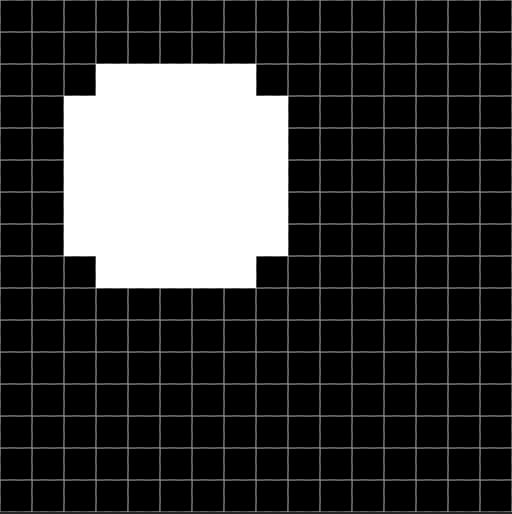

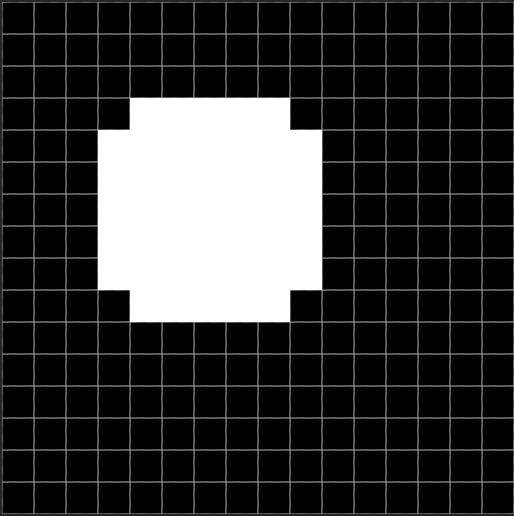

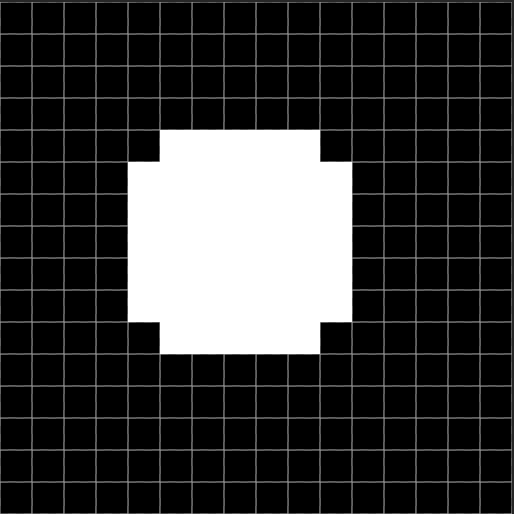

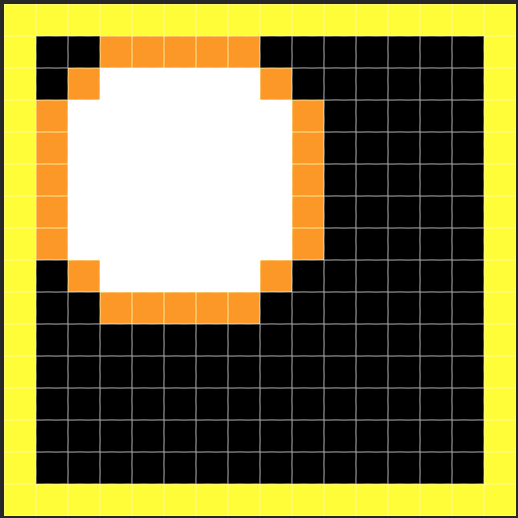

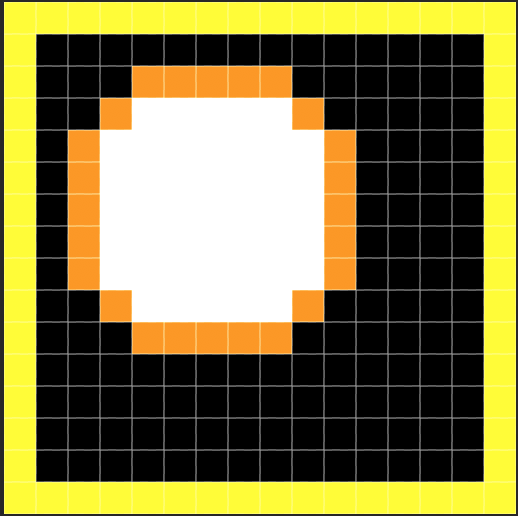

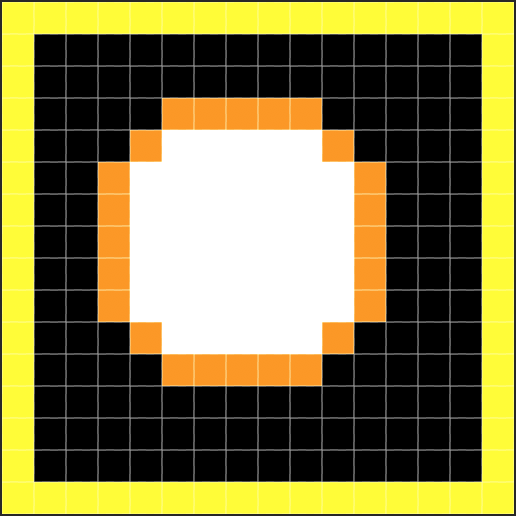

I have a situation where I'm trying to detect the center of a blob; to keep things rather simple, I've got a 16 x 16 pixel image (black background with a 7 x 7 pixel white blob). The 7x7 pixel blob is chipped at its corner (see attached images). Now comes the issue, when I run the SimpleBlob detection algorithm on image one, it returns the center as (5,5) as expected whereas when I perform the same step on the other two images which ought to return centers (6, 6) and (7,7) respectively fails returning (6.7307, 6.7307) for image 2 and (7.243, 7.243) for image 3. It seems there's something obviously wrong because all 3 images are basically the same size in image and blob besides their 1x1 pixel offset. On further investigation stepping into the detection code, I noticed that for image 1 which successfully detects the center it detects two centers (whilst iterating over the contours), the first center is the actual center (5,5) the second is (7.5, 7.5) which corresponds to the bottom right corner of the blob (this corner is chipped off and consequently doesn't belong to the blob which is white) hence this second point is filtered out by Color for not matching the blobColor which seems correct. On the other hand when I execute the detection on the second image for instance, it also detects two centers during the iteration over the resulting contours, the first is at (6,6) as expected the second is at (7.5, 7.5) which is weird, because judging from the result of the first image, the bottom right corner is (7.5, 7.5) so I was expecting (8.5, 8.5) for the second image which would have been filtered out by blobColor as not successful leaving me with just the current center as (6,6). But because I end up with two centers I believe this plays into how the final center gets calculated ending up with (6.7307, 6.7307) which is wrong.

I looked closely at how the value for the location (center) is being calculated and realised its highly dependent on the moments; I'm not too familar with the moments calculation as I couldn't quite get my head around it but somewhere down the line it seems it's calculating the wrong value, does anyone know why this could be happening in my situation? Would appreciate if someone could give this a look.

p.s: Note that the images I'm using are in .png 8bit with no transparency on (for all it's worth). I have also attached images that shows the corresponding contours for the 3 images used during my test (the contours were based on the results gotten during debugging, I highlighted the image to give a better idea of what's happening).