Hi there, I'm trying to convert depth map to normal map. I have found this answer: link but I have to do something wrong. Here is my code:

h, w = np.shape(grayImg)

normals = np.zeros((h, w, 3))

for x in range(1, h-1):

for y in range(1, w-1):

dzdx = (float(depthValue(grayImg[x+1, y])) - float(depthValue(grayImg[x-1, y]))) / 2.0

dzdy = (float(depthValue(grayImg[x, y+1])) - float(depthValue(grayImg[x, y-1]))) / 2.0

d = (-dzdx, -dzdy, 1.0)

n = normalizeVector(d)

normals[x,y] = n * 0.5 + 0.5

normals *= 255

normals = normals.astype('uint8')

plt.imshow(normals)

plt.show()

normals = cv2.cvtColor(normals, cv2.COLOR_BGR2RGB)

cv2.imwrite("images/N_{0}".format(fileName), normals)

The function depthValue() does nothing more than return grayscale value of an image (I was trying to convert every value of pixel to non-linear value like from this link but the effect was even worst).

Here is normalizeVector():

def normalizeVector(v):

length = np.sqrt(v[0]**2 + v[1]**2 + v[2]**2)

v = v/length

return v

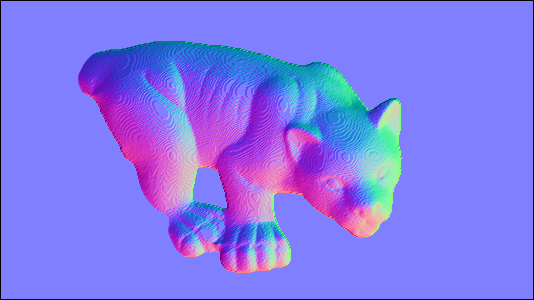

Here are my results:

The colors are in wrong order and they are much paler than from original output image from link above. Could you tell me what am I doing wrong?