why may detectMultiScale() give too many points out of the interested object?

I trained my pc with opencv_traincascade all one day long to detect 2€ coins using more than 6000 positive images similar to the following:

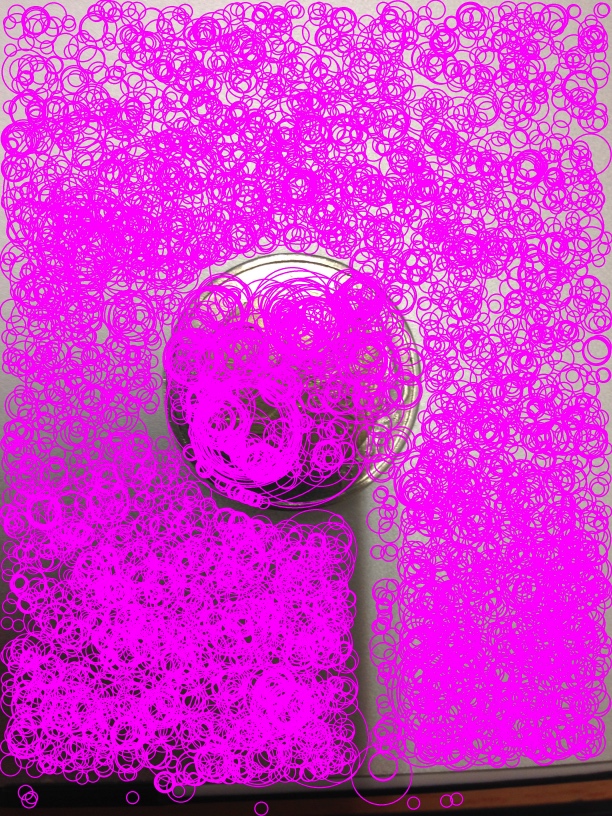

Now, I have just tried to run a simple OpenCV program to see the results and to check the file cascade.xml. The final result is very disappointing:

There are many points on the coin but there are also many other points on the background. Could it be a problem with my positive images used for training? Or maybe, am I using the detectMultiScale() with wrong parameters?

Here's my code:

#include "opencv2/opencv.hpp"

using namespace cv;

int main(int, char**) {

Mat src = imread("2c.jpg", CV_LOAD_IMAGE_COLOR);

Mat src_gray;

std::vector<cv::Rect> money;

CascadeClassifier euro2_cascade;

cvtColor(src, src_gray, CV_BGR2GRAY );

equalizeHist(src_gray, src_gray);

if ( !euro2_cascade.load( "/Users/lory/Desktop/cascade.xml" ) ) {

printf("--(!)Error loading\n");

return -1;

}

euro2_cascade.detectMultiScale( src_gray, money, 1.1, 0, 0, cv::Size(10, 10),cv::Size(2000, 2000) );

for( size_t i = 0; i < money.size(); i++ ) {

cv::Point center( money[i].x + money[i].width*0.5, money[i].y + money[i].height*0.5 );

ellipse( src, center, cv::Size( money[i].width*0.5, money[i].height*0.5), 0, 0, 360, Scalar( 255, 0, 255 ), 4, 8, 0 );

}

//namedWindow( "Display window", WINDOW_AUTOSIZE );

imwrite("result.jpg",src);

}

I have also tried to reduce the number of neighbours but the effect is the same, just with many less points...

Could it be a problem the fact that in positive images there are those 4 corners as background around the coin? I generated png images with Gimp from a shot video showing the coin, so I don't know why opencv_createsamples puts those 4 corners.

UPDATE

I also tried to create a LBP cascade.xml but this is quite strange: in fact, if I use, in the abve OpenCV program, an image used as training, then the detection is good:

Instead, if I use another image (for example, taken by my smartphone) there there's nothing detected. What does it mean this? Have I made any error during training?

Maybe a stupid question: all your positive images look like the image with the coin and the trees ?

If yes, if you want to detect coins, you should have to use positive images with only coins. Or you can provide the coordinates (if you did not already done that) of the coins in the image in the positive image lists.

@Eduardo yes, all my positive images look like the one I posted in my question...there's the coin and the background. yes, opencv_createsamples should provide the coordinates as you said for each image containing a coin...as explained here (http://www.memememememememe.me/traini...) and in many other tutorial...

Can you provide the command line you used for opencv_traincascade ? Also the ouput log of opencv_traincascade if you have it. It could help some other people to help you.

My bad, I thought all your positive images have the coin + a random background, that's why I was confused and asked the question to be sure to understand completely.

Your positive images are images with only the coins and

opencv_createsamplesjust combined them with random background and with some image warping. Some people say this way of doing is not optimal but this is another thing and should not be related to your issue.@Eduardo all the commands I ran were the ones discussed here

http://www.memememememememe.me/traini...

This is the only "useful" tutorial I found whichi I followed strictly. Now I'm trying with -LBP flag but I don't know if it will improve things. Anyway, yes, as you said I had 100 photos showing only a 2€ coin which were then combined with random backgrounds by executing opencv_createsamples

Let me know if there's a way I can get my aim...it's for my thesis.

I tried myself to train a classifier to detect coin. The results are:

The detections are not very stable unfortunately (a coin can be detected and the next frame not and the next frame yes etc.).

In my opinion, you have to redo your training as it should be possible to have better results.

Unfortunately, I don't have the magical recipe to train a good classifier as I am not an expert in this field.

What I did:

opencv_createsamplesto warp (with no background) the original images to have around 700 positive samples at the endopencv_createsamples -vec-numStages 20 -minHitRate 0.999 -maxFalseAlarmRate 0.5You have to try and test many times with different parameters to understand what happen under the hood in my opinion.

@Eduardo thank you very much for you comment Eduardo, I will try with another training at once. Anyway, I had already tried with a LBP training. Would you like to have a look at my updated question? Because If I use an image which had been used for training, then the detection is quite goog...this does not happen with an arbitrary image :(

I would say that maybe you have overfitted your data ? Also, the object is round and I don't know exactly how to make the training invariant to the background.

Some possible useful links:

Making the training invariant to the background can ONLY be done by collecting real test images in the background conditions in which your classifier will have to work...

@StevenPuttemans I don't know exactly what you mean but it's impossibile to collect real test images in the background conditions in which a classifier will have to work...people could lay the coin everywhere...