De-duplicate images of faces

I have a group of images of faces (.jpg and .png). Some of the images are taken from the same source but have been cropped, rotated, flipped, resized, compressed, color modified, brightened, darkened, etc., and so are not exact duplicates. So the freeware I currently use will not find these 'transformed' near-duplicate images.

Since the images are 'almost,' but not quite, identical, I hope facial recognition software can help identify the duplicates. Also, I need a system that, once each face has been compared with each of the others, can tell me which files match so that I can compare the files and decide which one of the pair to keep and which one to discard.

I'm not a programmer, but my son can do the programming. I am trying to find possible tools he can use to implement a solution. (He says tools that can be integrated with Linux would be the easiest but that he can work with most anything.)

I've read the facerec_tutorial but don't really understand it. Can the opencv facial recognition software find the matches and then output some sort of data that can be used for human comparison of the files? If not, what tools would he need to use in order to take the output from the recognition software and make it useable so that a human could examine the images?

Ah, being a newbie, I can't yet answer my own question, so, in answer to berak's reply below, there are about 150,000 images that need to be deduplicated.

The approach with face detection is probably to complicated. The modifications rather point to a feature based approach (Sift, Surf, ...)

i don't think opencv's face reco can help you here. it is doing 'supervised' learning (you give it a few imgs of person A, a few of person B, etc. and then it will predict, if it was A or B. unfortunately, shown an img of person C, it will still say A or B, because that's all it knows. )

but your case seems to be the 'unsupervised' one (you don't know the identity), so all you can do is check each img against each other for similarity.

btw, how many images are there ?

try phash first.

" I can't yet answer my own question," - oh, just make a comment, we can convert it to an answer, if nessecary

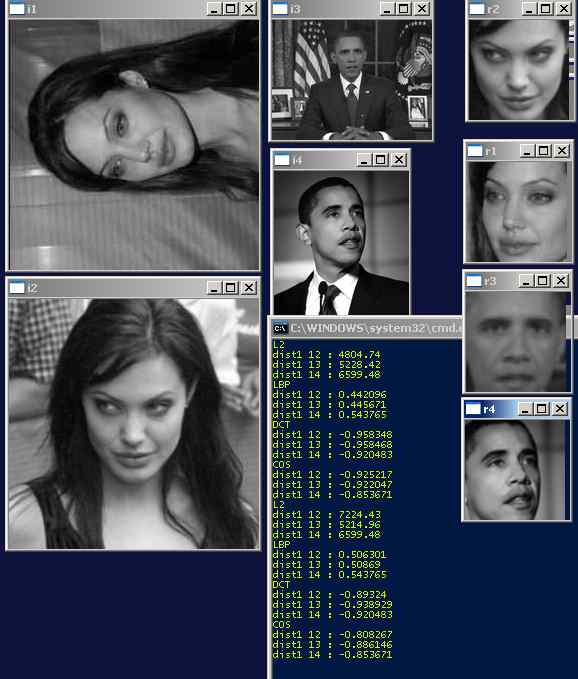

I used the phash demo on two sets (pair) of .jpg files. Each set contained the original image and a cropped image. The first pair was heavily cropped and I didn't expect good results. The second pair was a 'typical' crop, cropping off both sides of the image and leaving only the face in the middle. I got no matches either time. Here are the results from the 'typical' image:

Select 2 JPEG or BMP images to compare and click Submit. -Images may be saved for statistical analysis and to improve the pHash algorithms. Images will never be redistributed. -Algorithm: RADISH (radial hash); DCT hash; Marr/Mexican hat wavelet -RADISH: pHash determined your images are not similar with PCC = 0.366513. Threshold set to 0.85.

-DCT: pHash determined your images are not similar with hamming distance = 30.000000. Threshold set to 26.00. -Marr/Mexican: pHash determined your images are not similar with normalized hamming distance = 0.468750. Threshold set to 0.40.

Then I cropped the image lightly, maybe 10% of the total area and got a report that the images are similar. Here is the result I got on just one test: -pHash determined your images are similar with PCC = 0.993786. Threshold set to 0.85.

I then rotated the image 45 degrees (a typical rotation in the project I'm working on) and got a report that the images are not similar: _pHash determined your images are not similar with PCC = 0.308770. Threshold set to 0.85.

So I believe that, as currently configured, phash will not work for my project, unless ...

"Face detection" images can be extracted from the original images I'm working with and those extracted images rotated to the horizontal on the eyes, then resized, then compared with each other using phash.

Is there any way that opencv has tools that can detect then extract faces from images so that those images can then be compared?

@VHarris, off course! Use the viola and jones cascade classifier to find faces in the image. Then cut out the found detections and run an eye detector on top of that. Both face and eye models are inside OpenCV. Then use the center point of your eye detections to align the faces.

Just want to make sure I've got this right so far. 1) use face_cascade to find the face 2) use eye_cascade to find the eyes within the face 3) use the center point of the eye detections to align the face. How is this typically done? And once the faces are aligned, how are the faces extracted from the image for use by pHash? 4) use phash to compare the images