guidance for mapping 2D image onto 3D geometry

Dear,

I try to perform one of following tasks:

a.) use an image as texture onto a 3D surface or b.) warp an image in such a way, that its shape represents a 3D shape

Available input:

- 3D geometry --> as point cloud or triangulated mesh

- 3D object coordinates of a set of reference points

- 2D image coordinates of the same reference points

Boundary conditions:

- image from only one single camera (no stereo)

- uncalibrated camera

- camera has fixed focal length

- reference points are distributed onto the 3D surface

Current Approach:

- perform camera calibration --> get intrinsic parameters and distortions coefficiants

- solvePnP --> use results from camera calibration

What's next?

Programming language: python

I think this has been done many times before, but I maybe do not have the right search key words.

For camera calibration I followed this tutorial: https://opencv-python-tutroals.readth...

Basically...to get a start...I am in search for a "cooking receipt", that would guide me through the necessary steps. Any help is much welcome.

Thank you,

Philipp

Edit ...Attachments:

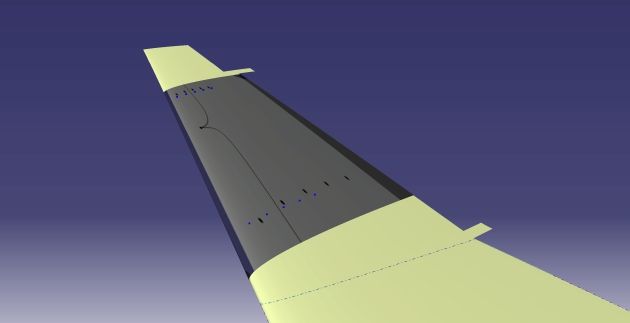

generic example of camera view

generic example of camera view

generic example of mapped image onto 3D surface as texture (mapping does not fit very well)

generic example of mapped image onto 3D surface as texture (mapping does not fit very well)

2D-3D refernce coordinates & 3D point cloud of geometry available as csv-file ...how to best upload?

a point cloud isn't a surface. you'll have to process your point cloud into a mesh surface or other surface description first. that is, if you want to put texture on triangles. if you only want to give your cloud's points some color, that's simpler: project them onto the image, sample image.

also, DO NOT use "tutroals". it's out of date by five years and all of that content is in docs.opencv.org anyway.

Question revised.

@ crackwitz: Yes, The point cloud is used to build a mesh. Optionally I can already use a generated mesh as input. The task is not to create a coloured point cloud, but to accurately map the image onto the 3D surface using the known 2D-3D-coordinates of the reference points.

ok, so you can use solvePnP() to get a transformation, but from there on it is probably "texture mapping", for which you probably should use some 3d editor like blender, not try to write opencv code

@ berak: The image is from an experimental measurement. The 3D surface is from CAD. Numerical data (X-Y-Z coordinates) shall be overlayed with the image in 3D-space...My hope is to get all this together in python using openCV.

@ berak: It is possible to do UV-texture mapping in the CAD-software (CATIA)....but it's very unhandy & approximate, since it is shifting/manipulating the image (=texture) per mouse and parameter settings. OK for one image, but there are a lot.

it probably gets better if you show your image and a rendered mesh/cloud ?

Question is updated. The 2D/3D coordinates are available as *.csv-files. How to best upload here? The uploaded result does not map the image correctly to the 3D surface...the black dots in the image should match the blue dots of the 3D geometry...But hopefully the idea is more clear. Thank you.