ArUco: Getting the coordinates of a marker in the coordinate system of another marker

Hi,

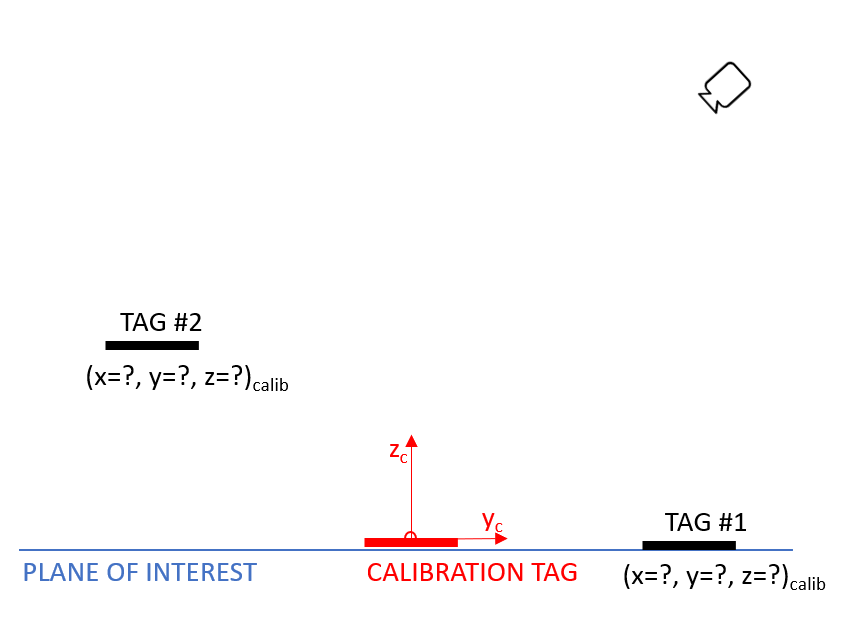

I have a question regarding pose estimation of ArUco markers and coordinate system translation. What I would basically like to accomplish is to get the coordinates of a marker using a coordinate system that is based on another marker.

Let me explain. The plan is to use one stationary marker as a calibration point. After getting the rvec and tvec vectors of that calibration marker I would then like to use this information to calculate the coordinates of the others, in the calibration marker’s coordinate system. (note that the camera and calibration tag are stationary, while the others move)

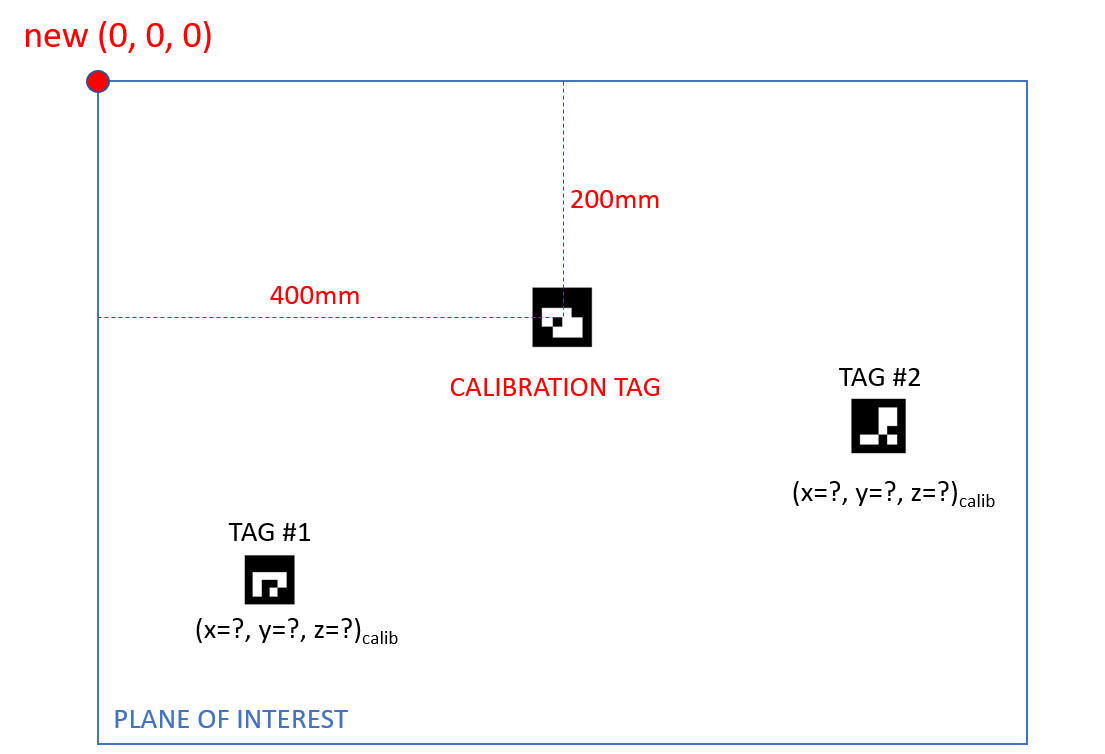

The end goal is to get the x and y coordinates of the markers in mm on the plane of interest. I could then move the origin point, to suite my application, with some simple addition/subtraction as the absolute position of my calibration marker is known.

I’ve already figured out how to get the coordinates of markers in the camera’s coordinate system and how to get the camera’s coordinates in the coordinate system of a marker, but I haven’t been able to understand how can I get the coordinates of a marker, in the coordinate system of another marker.

I’m not really familiar with all of these computer vision concepts, so I have been reading things that have been previously suggested (like this, and this), but I haven’t been able to find a solution yet.

I would really appreciate if you could verify that what I’m trying to do is possible and to point me in the right direction.

Thanks

you need to turn your rvec and tvec info into good old 4x4 matrices that express this transformation. the rest is "easy". read up on some computer graphics concepts such as transformation matrices, projective spaces, homogeneous coordinates. they're 4-element vectors and 4x4 matrices. the basics are: divide a vector by 4th component to get it back into "normal" space. multiply matrices to get a complex transformation. the identity matrix is the basis for lots of things. look at a matrix that translates, one that scales, one that rotates.

if you have matrices expressing marker-space-to-camera-space transformations, let's say M1 and M2, the transformation from marker 1 space to marker 2 space is M2^-1 * M1. point in M1 space is transformed into camera space ("forward" M1 transformation), then transformed into M2 space (inverted M2 transformation). what you have now can tell you for any point in M1 space, where in M2 space it is. apply this to (0,0,0) to get M1's origin in M2 space. you can apply it to basis vectors too.

Thank you for the response. I think I have something, please correct me if I’m wrong.

So, I can firstly take the tvec and rvec of my calibration tag and create the 4x4 matrix you were talking about, which consists of rordigues(rvec), tvec, padded with 0 0 0 1 at the last line.

This gives me a change of basis matrix from the camera coordinate system to the calib_tag one, which I’ll name M_calib.

I do the same for one of the other markers (tag#2 for example) and I get M2 and its inverse. I then multiply the coordinates of the tag#2 (tvec of tag#2) with the matrices that I got:

M^-1_2 * M_calib * (x y z 1) = (x' y' z' 1)

And at the end I should get the coordinates of the tag#2, expressed in the coordinate system of the calibration tag.

I tried what I was suggesting but it unfortunately didn't work. Is anyone able to see what I did wrong?