Calibrating camera only for one plane

I want to build a device that measures anything you put on it. It is basically a camera under a glass. But the camera is only responses to infrared light (so I have IR LED strips around the viewport). The problem is I am facing is a strategy to calibrate the camera. Due to the lens effect of the camera, the image is a bit like a fish-eye.

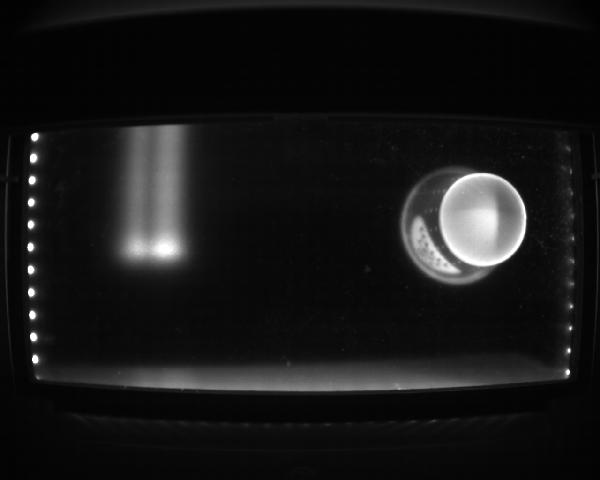

The picture below shows the concept. I have IR LEDS on 4 sides of the viewport. The viewport is not all the resolution of the camera. The camera is 1280x1024) and the viewport or ROI is roughly 1100x600 pixels. In this picture, you can see a paper coffee cup and light from the ceiling (yeah the ceiling hs some IR content in it but that is not the main problem).

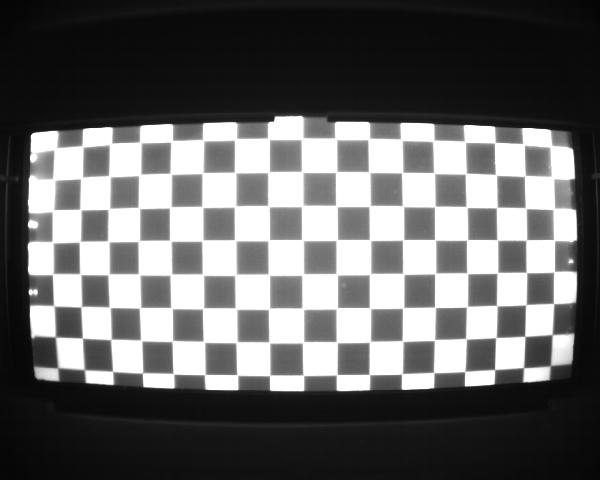

I have printed a large checkerboard with 20mm squares and this is how it looks normally (with reduced camera exposure):

So my question is how should I go with calibrating this setup? I tried the "calibrate.cpp" in the OpenCV examples but it does not work...I mean the "undistorted" image that it yields is no different than the original picture.

The reason here is I can not lift the checkerboard from the glass that camera sits under it...because of IR lights are not strong enough...if I lift the checkerboard just 10cm from the glass then the squares will not be visible to the camera.

I know that for calibration one would need points in infinity:

image_points = P * 3d_points, where P = intrinsic * extrinsic

Here, I can not have the extrinsic parameter.

How would you approach such a problem? Where should I begin?

My own idea is to manually select squares in the corner then since their size and numbers will be known I somehow correct the camera with that numbers? But I have no idea what functions out of opencv can be helpful in this case.

Use this

9x6chessboard pattern.Calibrate as usual, that is using many images with various orientations, positions.

I can not lift the checkerboard from the glass over the camera...if I move it up or orient it the squares will not be visible.

If I understand what you are trying to do, I have done something like this before. Basically the idea is to do an iterative process where you compute a homography + a distortion estimate to predict where you will find chessboard corners in the image, and you "search" radially outward from your starting point, keeping corner points that are close (in image space) to the predicted location, and then you compute a new distortion estimate from your larger set of points. Then using that distortion estimate you compute a new H (using the undistorted image locations and world (grid) locations). Step and repeat until you have found "all" of the points in the pattern.

If that all makes sense, the key OpenCV call that I made was this:

// The purpose of this function is to generate distortion estimates only, so // we are using a fixed intrinsic guess (FIX_FOCAL_LENGTH..ASPECT_RATIO..._PRINCIPAL_POINT // so we will get consistent distortion parameters as we iterate

auto lError = cv::calibrateCamera(lWorldPointsList, lImagePointsList, arImage.size(),

arCamMat, arDist, lRvecs, lTvecs,

cv::CALIB_USE_INTRINSIC_GUESS |

cv::CALIB_FIX_FOCAL_LENGTH |

cv::CALIB_FIX_ASPECT_RATIO |

cv::CALIB_FIX_PRINCIPAL_POINT);

So my arCamMat was estimated, but close to actual. I ignore the rvec/tvec return values, and I was only interested in getting distortion estimates. I started with a H based on 4 corner points (one chessboard square) and after about 5 iterations my distortion parameters were good enough that a homography would map world points to undistorted image points very well.

I was just using this as a robust way to get correspondences, and I had a way to precisely move the target further / closer to the camera (so I had known Z depths for several different positions), and then I stuffed the correspondences / world coords (with varying Z values) into calibrateCamera to get a full camera model. Cheap optics, OV5640 sensor, RMS error appx 0.5 pixels. Good enough for what I was doing.

It's hard to follow the code above because the carriage returns are ignored for whatever reason. the main point of the code snippet is that I used an estimated intrinsics and all of the cv:CALIB_USE_INTRINSIC_GUESS (and related) parameters in order to get calibrateCamera to compute dist coeffs from a single image.

As I mentioned, I ended up doing a full camera calibration, but if you get good distortion estimates, you should be able to just use a homography + distortion estimates in order to make measurements in image space (at the height of the original checkerboard).

If you want to undistort and correct the perspective in the image (so that 1 pixel = 0.1mm or whatever) then call cv::undistort on the image followed by cv::getPerspectiveTransform + cv::warpPerspective

I hope that all makes sense. I'm pretty sure it would do what you want, but maybe someone else has a better approach. (For me the difficult part was reliably traversing the grid (in distorted image coords) in order to make sure I had good correspondences between image coords and chessboard grid coords. There is probably a better way to do it, but at the time the standard opencv "findChessboardCorners" didn't work for my case (I think it had trouble with the distortion). If you can get that to work for you (and a way to assign world point correspondences) then that would be easiest. Then just call calibrateCamera as above to get distortion coefficients.

sorry for the rambling responses...maybe it will be helpful.

@swebb_denver

Probably you should add it as an answer. It will be more readable.