Faster RCNN no results

I am trying to run Faster-RCNN Inception v2 model in OpenCV 3.4.6 (c++) using object_detection.cpp sample.

Model doesn't work. DetectionOutput layer returns one detection with empty *data.

This is how I run the app (following https://github.com/opencv/opencv/tree...)

./object-detection --model=../models/faster_rcnn_inception.pb --config=../models/faster_rcnn_inception.pbtxt --classes=../models/coco.classes --width=300 --height=300 --scale=0.00784 --rgb --mean="127.5 127.5 127.5" --input=video.mp4 --nms 0.01

yolo classes with rcnn ? no. (however, it won't change anything, i guess)

That is the name of the file, because I tested yolo first. Those are coco class names. It doesn't change anything, those are labels only.

How we can reproduce it? Is it a public model? Can you provide at least an image sample?

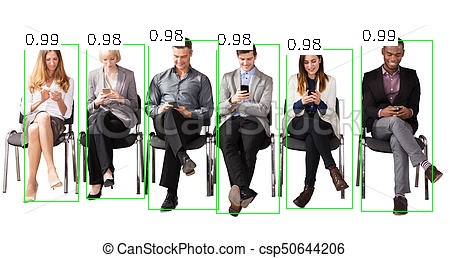

I use the model that is linked. I think that input is not a problem here e.g for such image it doesn't work either. Yolo model works fine.