Detection of people from above with Thermal Camera

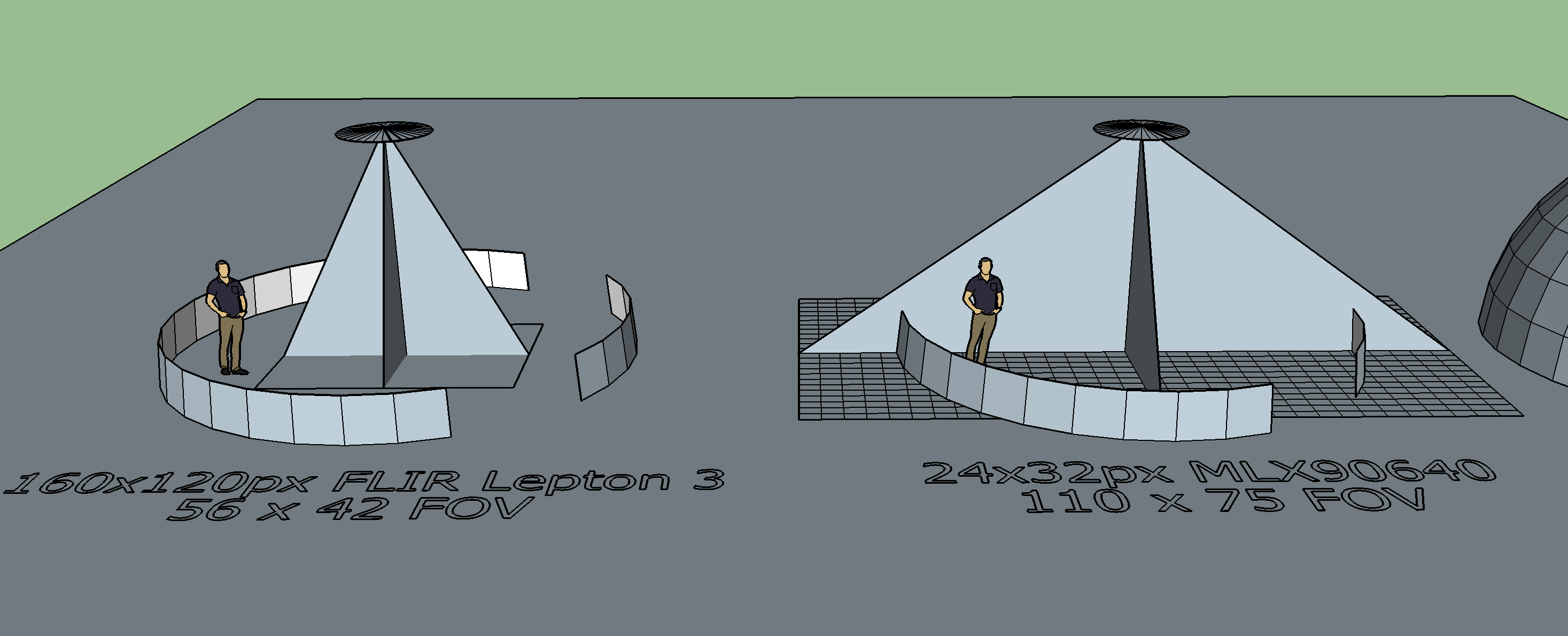

I have a MLX90640 thermal camera 13' above the ground. it has 32x24 resolution with a 110 x 75 Field of View.

I am trying to narrow the temperature range from -40 - 300 to a better range of 6 - 20. i think I am doing this right but am not sure.

I am trying to determine if contour detection or blob detection would be better for counting the number of people in the room. I am new to OpenCV and am not sure if I am using the image filters in the best way.

I posted the code below. Any advice or recommendations are appreciated.

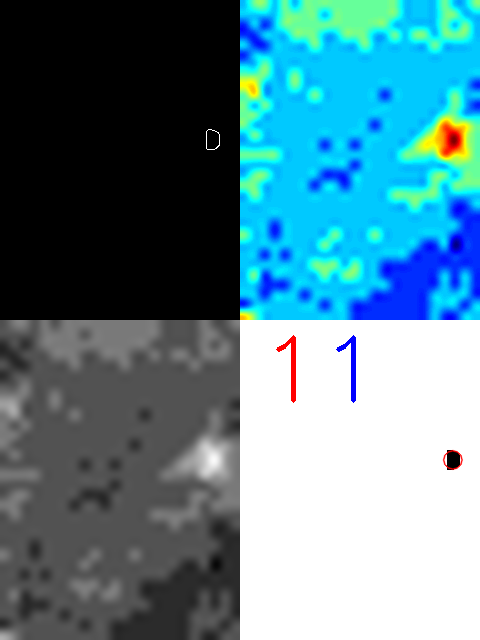

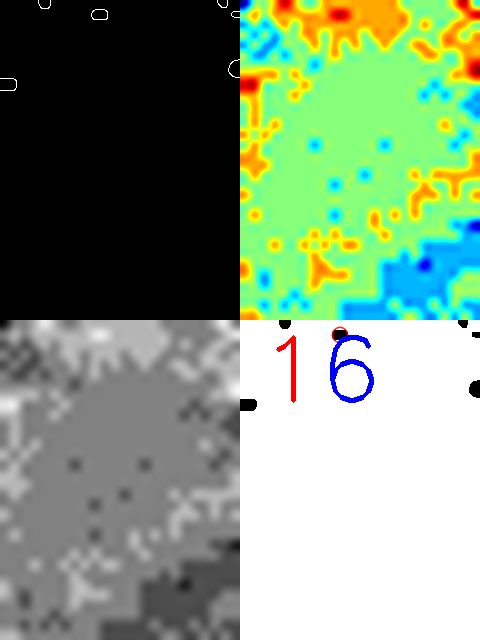

Image of just one person.

The sensor sometimes gives back strange data like extra hotspots such as in the image below. It is still with the same one person but the temps have fluctuated. The blob detector counts 1 while the contours count 6.

import sys

import os

import time

import colorsys

import numpy as np

import cv2

import datetime

from PIL import Image

sys.path.insert(0, "./build/lib.linux-armv7l-3.5")

import MLX90640 as mlx

img = Image.new( 'L', (24,32), "black")

def irCounter():

mlx.setup(8) #set frame rate of MLX90640

f = mlx.get_frame()

mlx.cleanup()

# get max and min temps from sensor

v_min = min(f)

v_max = max(f)

# Console output for testing

textTime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S') # get timestamp

print(textTime)

print(min(f))

print(max(f))

print("")

for x in range(24):

row = []

for y in range(32):

val = f[32 * (23-x) + y]

row.append(val)

img.putpixel((x, y), (int(val)))

# convert raw temp data to numpy array

imgIR = np.array(img)

## Threshold the -40C to 300 C temps to a more human range

# Sensor seems to read a bit cold, calibrate in final setting

rangeMin = 6 # low threshold temp in C

rangeMax = 20 # high threshold temp in C

# Apply thresholds based on min and max ranges

depth_scale_factor = 255.0 / (rangeMax-rangeMin)

depth_scale_beta_factor = -rangeMin*255.0/(rangeMax-rangeMin)

depth_uint8 = imgIR*depth_scale_factor+depth_scale_beta_factor

depth_uint8[depth_uint8>255] = 255

depth_uint8[depth_uint8<0] = 0

depth_uint8 = depth_uint8.astype('uint8')

# increase the 24x32 px image to 240x320px for ease of seeing

bigIR = cv2.resize(depth_uint8, dsize=(240,320), interpolation=cv2.INTER_CUBIC)

# Normalize the image

normIR = cv2.normalize(bigIR, bigIR, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

# Apply a color heat map

colorIR = cv2.applyColorMap(normIR, cv2.COLORMAP_JET)

# Use a bilateral filter to blur while hopefully retaining edges

brightBlurIR = cv2.bilateralFilter(normIR,9,150,150)

# Threshold the image to black and white

retval, threshIR = cv2.threshold(brightBlurIR, 210, 255, cv2.THRESH_BINARY)

# Define kernal for erosion and dilation and closing operations

kernel = np.ones((5,5),np.uint8)

erosionIR = cv2.erode(threshIR,kernel,iterations = 1)

dilationIR = cv2.dilate(erosionIR,kernel,iterations = 1)

closingIR = cv2.morphologyEx(dilationIR, cv2.MORPH_CLOSE, kernel)

# Detect edges with Canny detection, currently only for visual testing not counting

edgesIR = cv2.Canny(closingIR,50,70, L2gradient=True)

# Detect countours

contours, hierarchy = cv2.findContours(closingIR, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

# Get the number of contours ( contours count ...

You should try with a visible camera with a roi of 32x24 and same field of view (fisheye camera and use resize) => I think it's not possible

@LBerger

I have a 1080p visual spectrum camera that I could mount for testing. Do you mean that I should take the image at 1080p then downsample to 32x24?

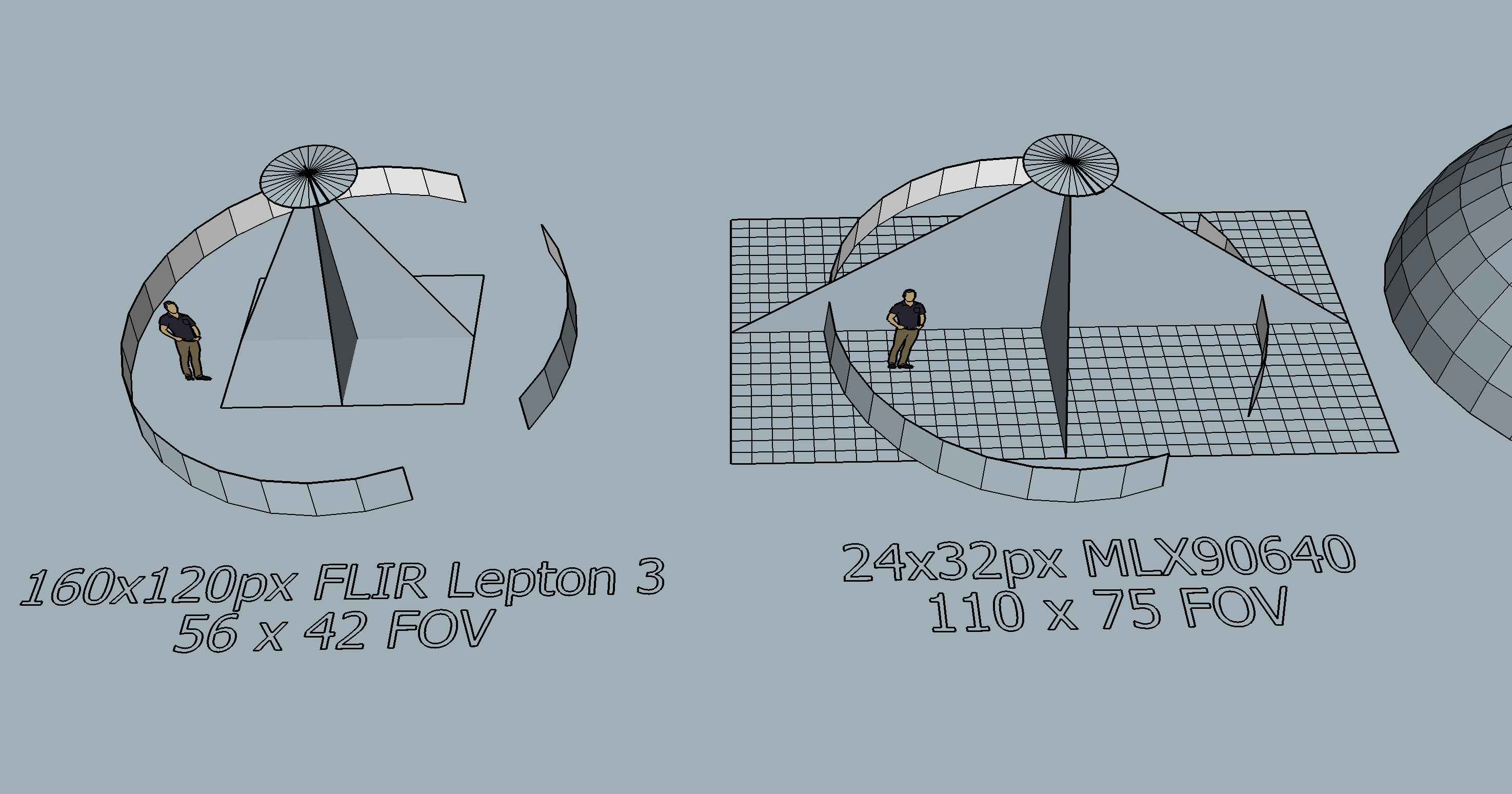

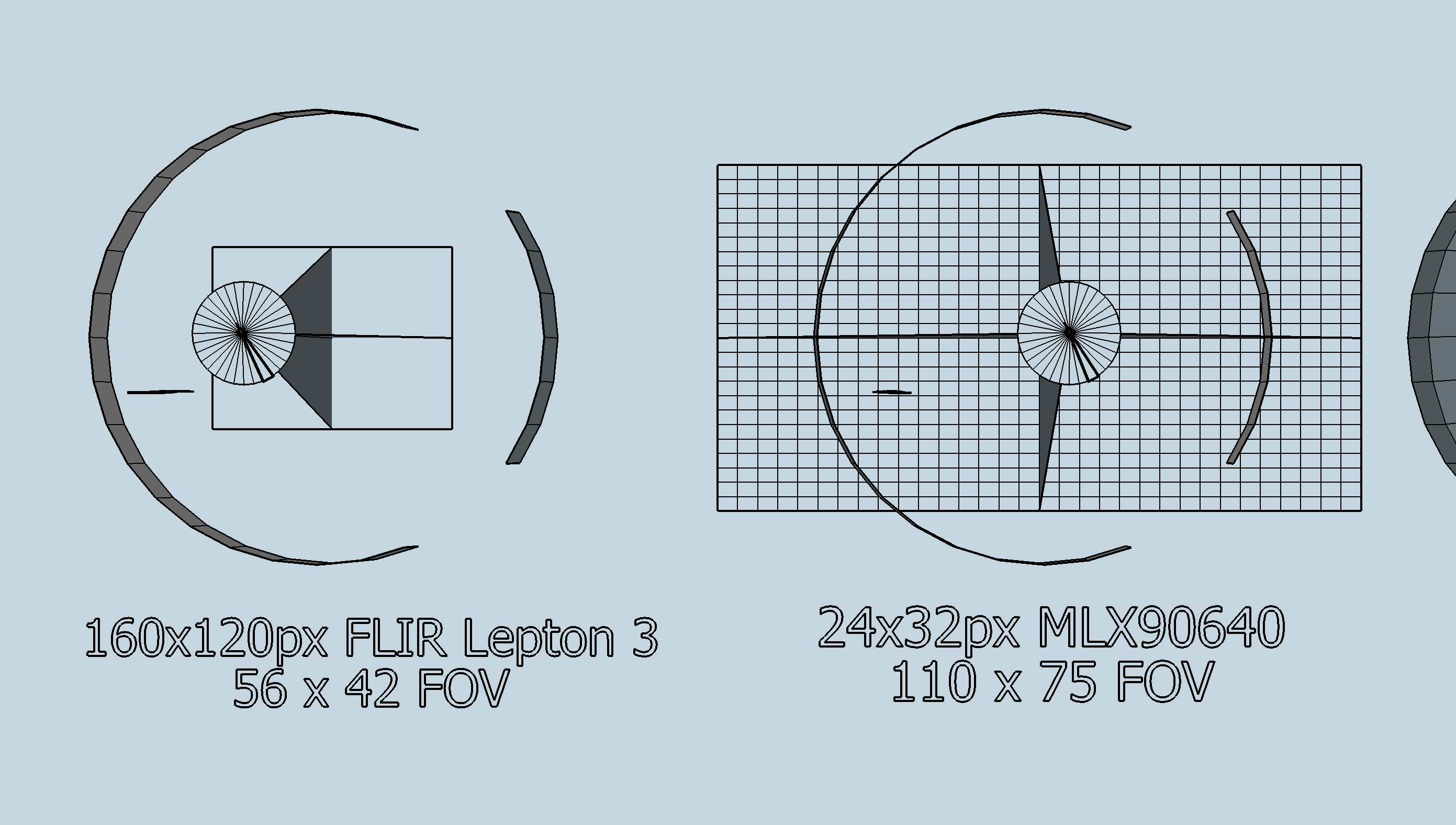

I also have the option of a Flir Lepton 3, it offers 160 x 120 resolution. But it has a much narrower field of view.

I edited the post with images showing both sensors FOV. I would love to have the resolution of the Flir, but don't know how to cover the area that I need as shown in the new images.

Thank you for the advice.

You can detect that something is changing using absdiff. Now "sensor sometimes gives back strange data" it could be internal shutter