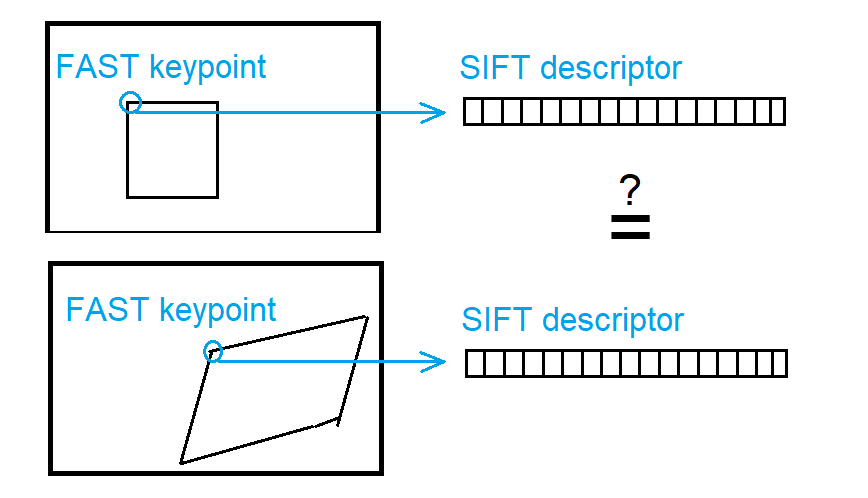

But when does it gain this characteristic? If I were to detect keypoints with the FastFeatureDetector and get descriptors with the SiftDescriptorExtractor, would the descriptors be the same for the same keypoint seen from a different viewpoint?

Yes, the descriptors should be the same (or almost the same) for the same point seen from a different view. Outliers are still existent, which you have to filter later! The descriptors are therefor generated only on very significant points (local extrema in a pyramid of difference of gaussians).

The classical SIFT descriptors is formed by a vector containing the values of orientation histogram entries.

It is very well explained in the original paper by Lowe and on diverse websites (even on wikipedia: https://en.wikipedia.org/wiki/Scale-i...)

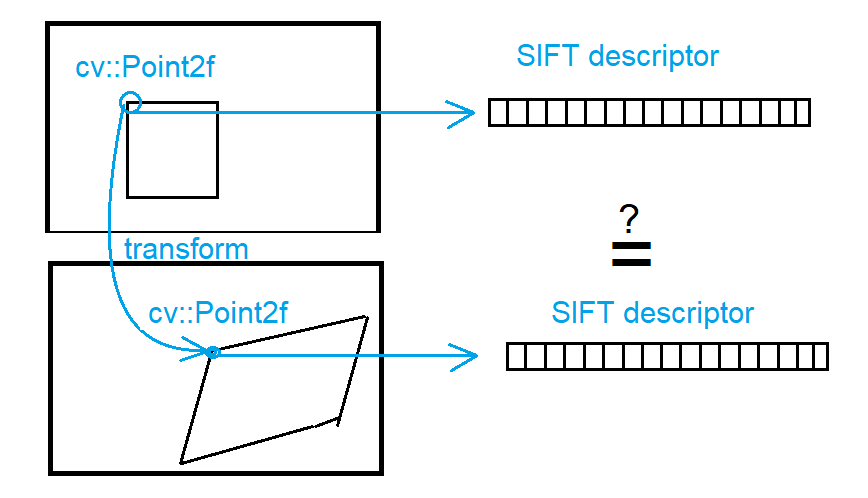

If I convert cv::KeyPoint into cv::Point2f to transform the keypoints into other viewpoint and then convert the transformed cv::Point2f it back to cv::KeyPoint, would the information in the cv::KeyPoint be the same, as if the keypoint at the same place was detected by SiftFeatureDetector?

If you convert KeyPoint to Point2f some information gets lost.

cv::Keypointhas additional attributes. These are angle, class_id, octave, response, and size, which are useful for matching (https://docs.opencv.org/3.2.0/d2/d29/...). They are thrown away when converting to Point2f with the method cv::Keypoint::convert but still, for me sometimes it was necessary. You can try to keep these values in different vectors.