Cannot Reproduce Results of Feature Matching with FLANN Tutorial

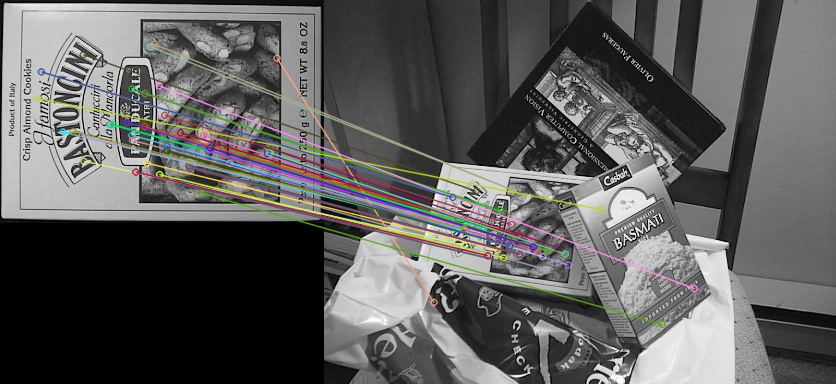

I installed OpenCV 3.3.1 on OS 10.11.6 using MacPorts. I copied the code from the tutorial from https://docs.opencv.org/3.1.0/d5/d6f/.... I copied the images from https://github.com/opencv/opencv/blob... (and box). I built and ran the executable, but got poor feature matching that did not look like the image shown on the tutorial page.

Is the tutorial code possibly out of sync with the sample result images, or is there a problem with OpenCV 3.3.1 in MacPorts?

The reason I'm asking is that I was writing my own OpenCV code to perform feature matching, but could not get good performance, even when tuning threshold parameters, trying different matching algorithms, etc.--so I backed up from my code to the tutorial, and discovered I'm still seeing the same poor results. I get unreliable feature matches that look like a lot more like random correspondences.

Can someone verify whether the tutorial is working as advertised? I'm trying to isolate what my problem is.

latest master, win, --

56 good keypoints only, so not working as advertised.Okay, that's a lot like what I'm seeing. And my "good" matches aren't all really corresponding features, either.

six ?

@LBerger, indeed, 6. i can't count on wednesdays, simply.

loop is wrong ?

it should be for( int i = 0; i < matches.size(); i++ ) ? may be I'm tired

yes, probably.

, though it's the same number. (since img1 is the small one, so less kp in img1 and the number of matches is min(kp1, kp2))it might not even find a match for each original keypoint.

May be but don't change FlannBasedMatcher in BFMatcher matcher(detector->defaultNorm(),true);

@DaleWD may be you should post an issue and give a link to this post

funny, the python version gives better results (more matches)

is it about the default values(params) of the matcher ?

It's really weird. I tried SIFT and SURF, I tried the BFMatcher with k-nearest neighbors + Lowe ratio test, tried different SIFT contrast thresholds, tried changing layers per octave, different matchers--I can't seem to make any combination of them work well.

I would post an issue, but I'm at work right now. Thanks for verifying it's not entirely me...