Generalized Hough Transform (Guil) - Improving Speed

I am using OpenCV 3.1 with VS2012 C++/CLI running on Win10 on a Intel i7 with 16G RAM (in short, a pretty fast rig).

I am trying to utilize the Generalized Hough Transform and the Guil variant (to handle translation, rotation, and scale). I am following this example: https://github.com/Tetragramm/opencv/... My code used all the default settings shown in the example.

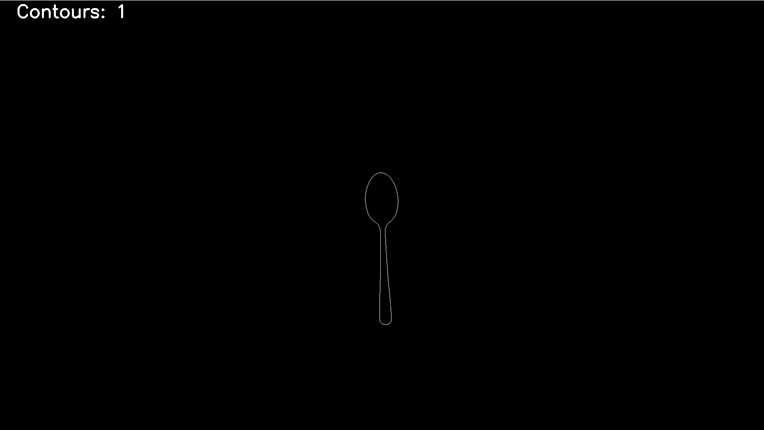

To test things out, I used the following image (minus the text) as both the loaded image and the query image:

This is a full HD image from which the contours have been extracted. When I ran the GHT-Guil "detect" function, it took 336 seconds (i.e., more than 5 minutes) to return.

Is this expected? Is there anything I can do to speed it up?

I also have an NVidia GTX760 card and can implement the GPU version of the Guil call. Is there any information on what kind of speed up I should expect if I do so?

Thanks for any information.