This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

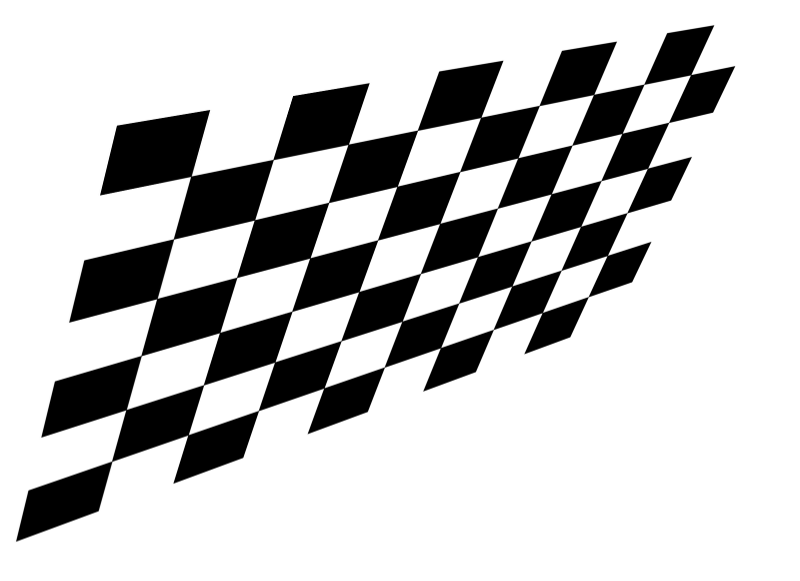

Have a look in the code below, for sure it needs some optimization but I guess it will provide you with an idea how to deal with your problem. Moreover, I used another image with a different perspective but I do not think that this changes a lot.

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main()

{

// Load image

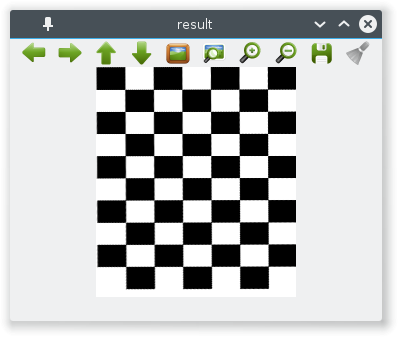

Mat img = imread("chessboard.png");

// Check if image is loaded successfully

if(!img.data || img.empty())

{

cout << "Problem loading image!!!" << endl;

return EXIT_FAILURE;

}

imshow("src", img);

// Convert image to grayscale

Mat gray;

cvtColor(img, gray, COLOR_BGR2GRAY);

// Convert image to binary

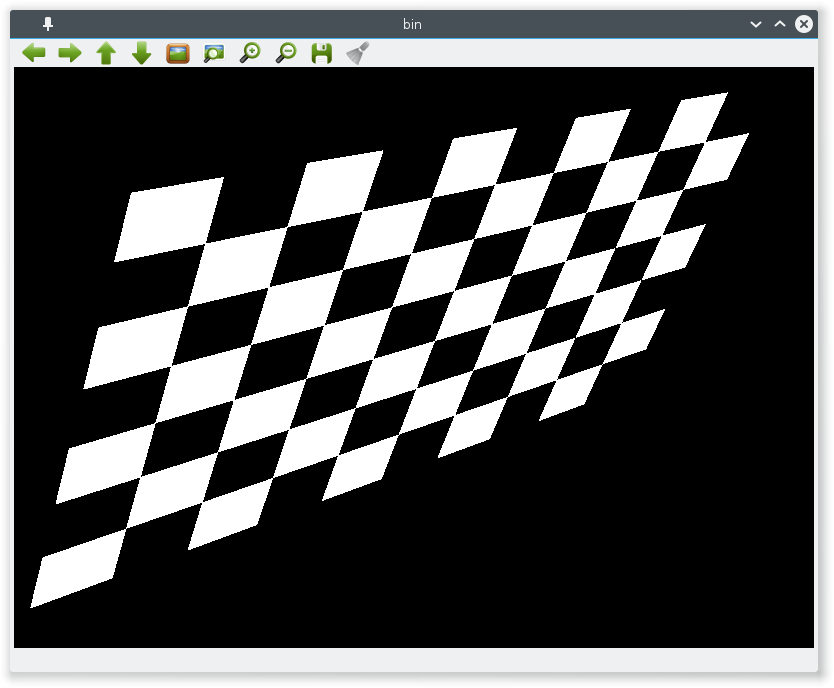

Mat bin;

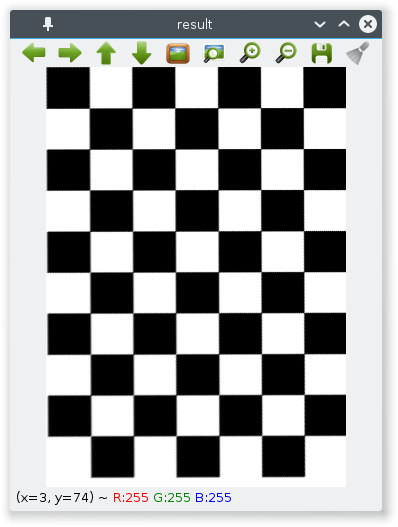

threshold(gray, bin, 50, 255, CV_THRESH_BINARY_INV | CV_THRESH_OTSU);

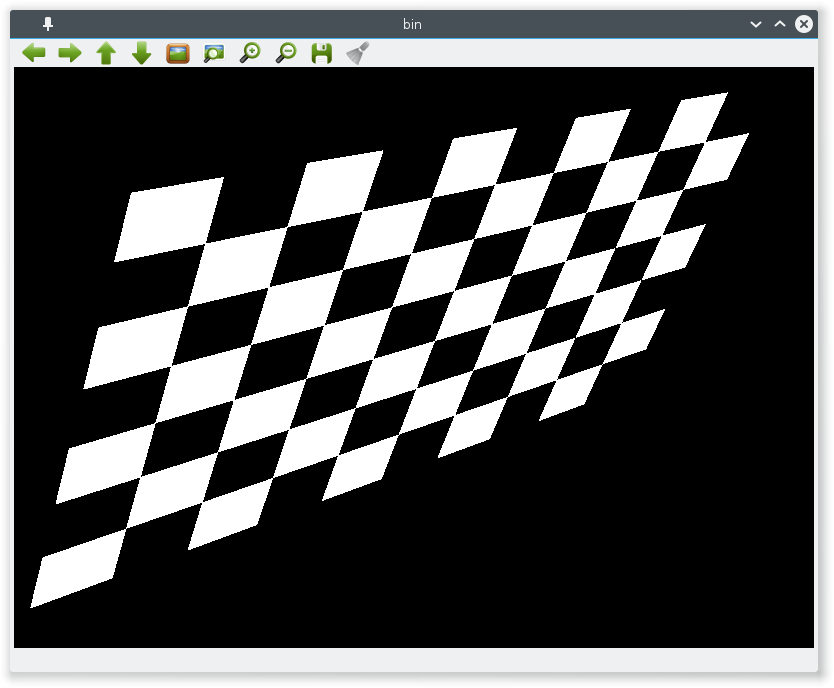

imshow("bin", bin);

// Dilate a bit in order to fill any gap between the joints

Mat kernel = Mat::ones(2, 2, CV_8UC1);

dilate(bin, bin, kernel);

// imshow("dilate", bin);

// Find external contour

vector<Vec4i> hierarchy;

std::vector<std::vector<cv::Point> > contours;

cv::findContours(bin.clone(), contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(0, 0));

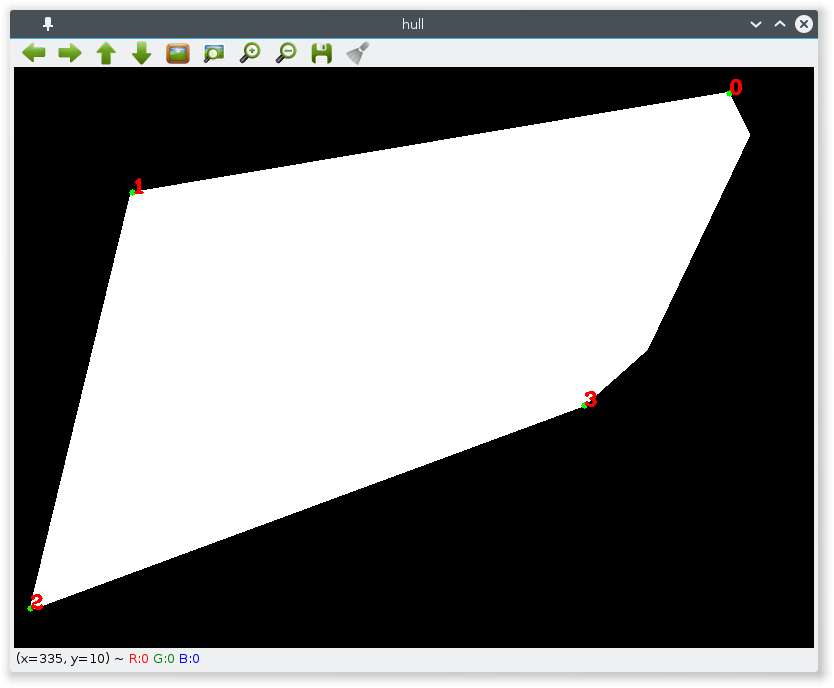

// Find the convex hull object of the external contour

vector<vector<Point> >hull( contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ convexHull( Mat(contours[i]), hull[i], false ); }

// We'll put the labels in this destination image

cv::Mat dst = Mat::zeros(bin.size(), CV_8UC3);

// Draw the contour as a solid blob filling also any convexity defect with the extracted hulls

for (size_t i = 0; i < contours.size(); i++)

drawContours( dst, hull, i, Scalar(255, 255, 255), CV_FILLED/*1*/, 8, vector<Vec4i>(), 0, Point() );

// Extract the new blob and the approximation curve that represents it

Mat bw;

cvtColor(dst, bw, CV_BGR2GRAY);

cv::findContours(bw.clone(), contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(0, 0));

// The array for storing the approximation curve

std::vector<cv::Point> approx;

Mat src = img.clone();

for (size_t i = 0; i < contours.size(); i++)

{

// Approximate contour with accuracy proportional

// to the contour perimeter with approxPolyDP. In this,

// third argument is called epsilon, which is maximum

// distance from contour to approximated contour. It is

// an accuracy parameter. A wise selection of epsilon is

//needed to get the correct output.

double epsilon = cv::arcLength(cv::Mat(contours[i]), true) * 0.02; // epsilon = 2% of arc length

cv::approxPolyDP(

cv::Mat(contours[i]),

approx,

epsilon,

true

);

cout << "approx: " << approx.size() << endl;

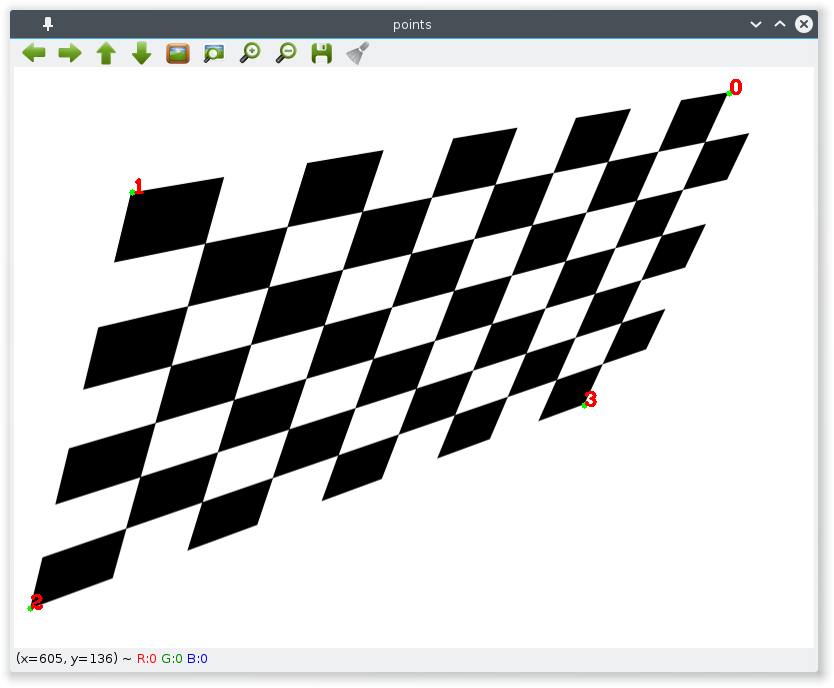

// visuallize result

for(size_t j = 0; j < approx.size(); j++)

{

string text = to_string(static_cast<int>(j));

circle(src, approx[j], 3, Scalar(0, 255, 0), CV_FILLED);

circle(dst, approx[j], 3, Scalar(0, 255, 0), CV_FILLED);

putText(src, text, approx[j], FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 0, 255 ), 2);

putText(dst, text, approx[j], FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 0, 255 ), 2);

}

}

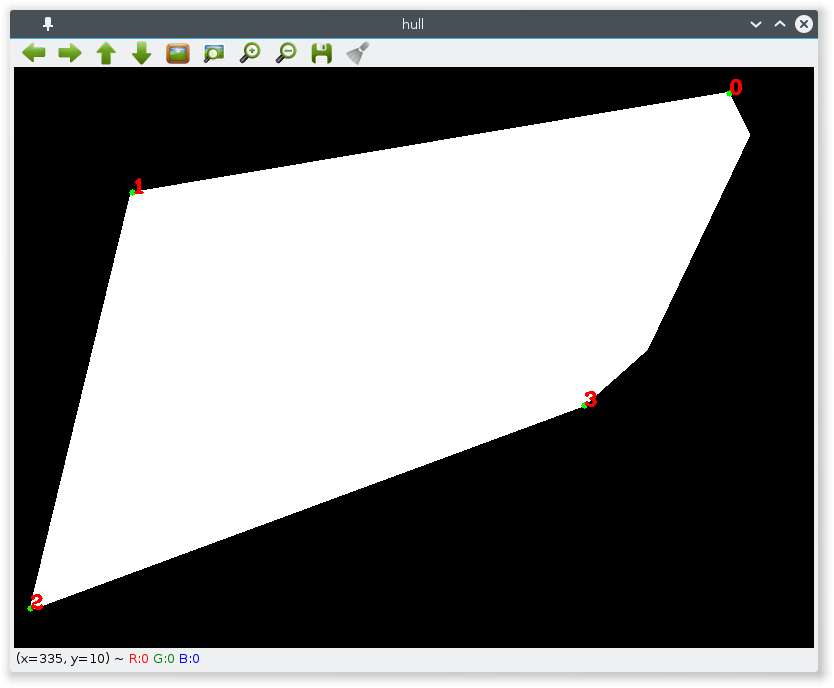

imshow("hull", dst);

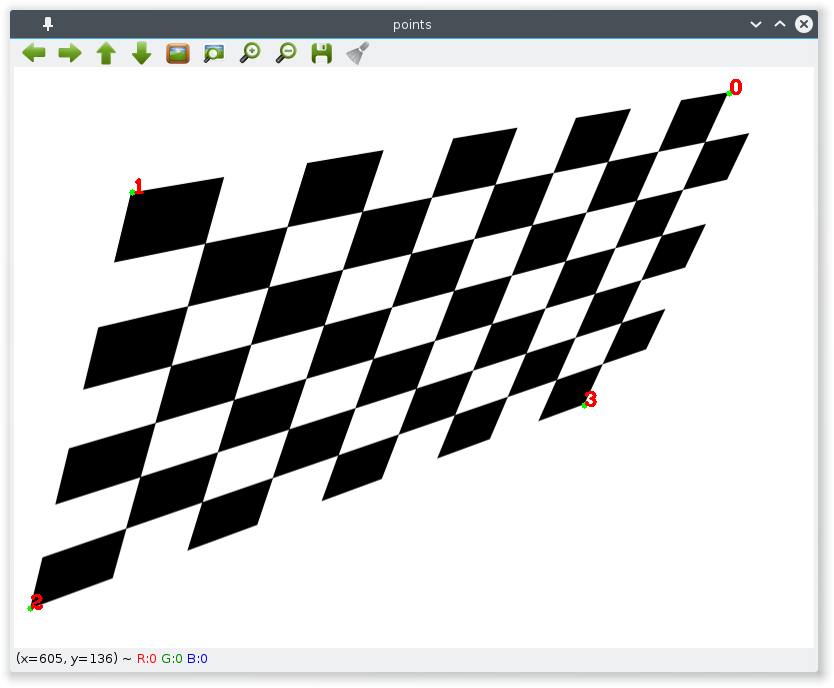

imshow("points", src);

// find a more automated way to deal with the points here and extract the perspective, this is done in a hurry

vector<Point2f> p,q;

p.push_back(approx[2]);

q.push_back(Point2f(0,0));

p.push_back(approx[1]);

q.push_back(Point2f(200,0));

p.push_back(approx[0]);

q.push_back(Point2f(200,200));

p.push_back(approx[3]);

q.push_back(Point2f(0,200));

Mat rotation = cv::getPerspectiveTransform(p,q);

Mat result;

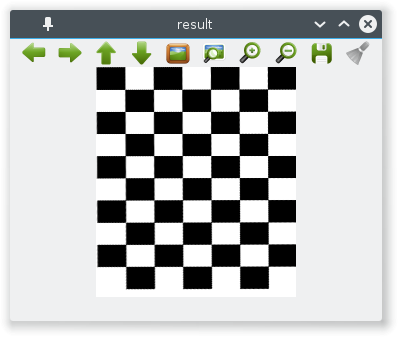

cv::warpPerspective(img, result, rotation, Size(200,230));

imshow("result",result);

waitKey(0);

return 0;

}

| 2 | No.2 Revision |

Have a look in the code below, for sure it needs some optimization but I guess it will provide you with an idea how to deal with your problem. Moreover, I used another image with a different perspective but I do not think that this changes a lot.

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main()

{

// Load image

Mat img = imread("chessboard.png");

// Check if image is loaded successfully

if(!img.data || img.empty())

{

cout << "Problem loading image!!!" << endl;

return EXIT_FAILURE;

}

imshow("src", img);

// Convert image to grayscale

Mat gray;

cvtColor(img, gray, COLOR_BGR2GRAY);

// Convert image to binary

Mat bin;

threshold(gray, bin, 50, 255, CV_THRESH_BINARY_INV | CV_THRESH_OTSU);

imshow("bin", bin);

// Dilate a bit in order to fill any gap between the joints

Mat kernel = Mat::ones(2, 2, CV_8UC1);

dilate(bin, bin, kernel);

// imshow("dilate", bin);

// Find external contour

vector<Vec4i> hierarchy;

std::vector<std::vector<cv::Point> > contours;

cv::findContours(bin.clone(), contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(0, 0));

// Find the convex hull object of the external contour

vector<vector<Point> >hull( contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ convexHull( Mat(contours[i]), hull[i], false ); }

// We'll put the labels in this destination image

cv::Mat dst = Mat::zeros(bin.size(), CV_8UC3);

// Draw the contour as a solid blob filling also any convexity defect with the extracted hulls

for (size_t i = 0; i < contours.size(); i++)

drawContours( dst, hull, i, Scalar(255, 255, 255), CV_FILLED/*1*/, 8, vector<Vec4i>(), 0, Point() );

// Extract the new blob and the approximation curve that represents it

Mat bw;

cvtColor(dst, bw, CV_BGR2GRAY);

cv::findContours(bw.clone(), contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(0, 0));

// The array for storing the approximation curve

std::vector<cv::Point> approx;

Mat src = img.clone();

for (size_t i = 0; i < contours.size(); i++)

{

// Approximate contour with accuracy proportional

// to the contour perimeter with approxPolyDP. In this,

// third argument is called epsilon, which is maximum

// distance from contour to approximated contour. It is

// an accuracy parameter. A wise selection of epsilon is

//needed to get the correct output.

double epsilon = cv::arcLength(cv::Mat(contours[i]), true) * 0.02; // epsilon = 2% of arc length

cv::approxPolyDP(

cv::Mat(contours[i]),

approx,

epsilon,

true

);

cout << "approx: " << approx.size() << endl;

// visuallize result

for(size_t j = 0; j < approx.size(); j++)

{

string text = to_string(static_cast<int>(j));

circle(src, approx[j], 3, Scalar(0, 255, 0), CV_FILLED);

circle(dst, approx[j], 3, Scalar(0, 255, 0), CV_FILLED);

putText(src, text, approx[j], FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 0, 255 ), 2);

putText(dst, text, approx[j], FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 0, 255 ), 2);

}

}

imshow("hull", dst);

imshow("points", src);

// find a more automated way to deal with the points here and extract the perspective, this is done in a hurry

vector<Point2f> p,q;

p.push_back(approx[2]);

q.push_back(Point2f(0,0));

p.push_back(approx[1]);

q.push_back(Point2f(200,0));

q.push_back(Point2f(300,0));

p.push_back(approx[0]);

q.push_back(Point2f(200,200));

q.push_back(Point2f(300,370));

p.push_back(approx[3]);

q.push_back(Point2f(0,200));

q.push_back(Point2f(0,370));

Mat rotation = cv::getPerspectiveTransform(p,q);

Mat result;

cv::warpPerspective(img, result, rotation, Size(200,230));

Size(300,420));

imshow("result",result);

waitKey(0);

return 0;

}