This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

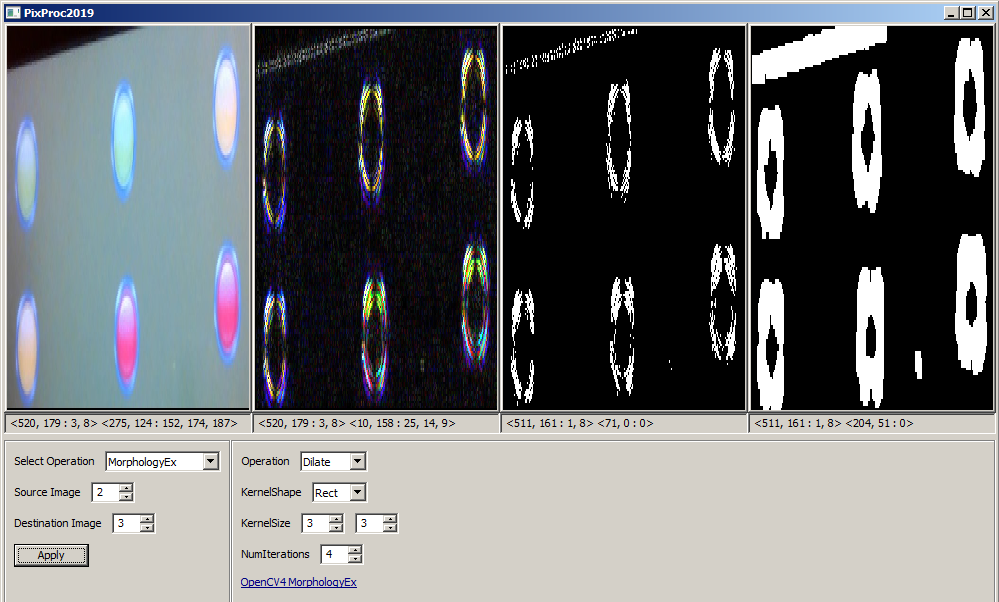

Here are the steps that seem to work well from left to right in the image below.

original image

apply Sobel filter with 5x5 kernel and first order derivatives in both x and y

convert to mono and threshold about 80

dilation with Rect 3x3 kernel for 4 iterations

From, here you can filter contours by aspect ratio and size so only the powdered donuts are left.

And here are the results on all three.

| 2 | No.2 Revision |

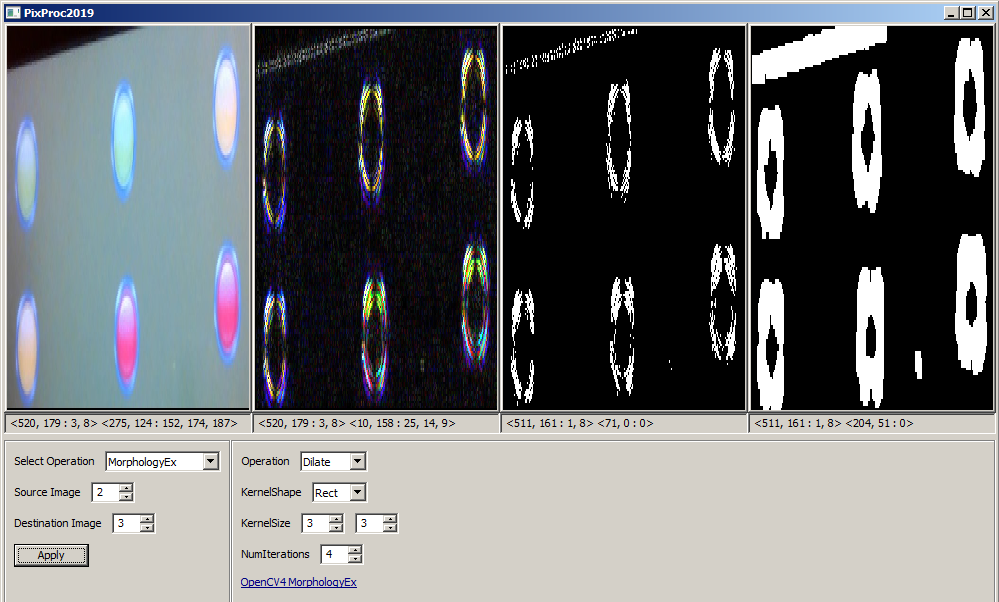

Here are the steps that seem to work well from left to right in the image below.

original image

apply Sobel filter with 5x5 kernel and first order derivatives in both x and y

convert to mono and threshold about 80

dilation with Rect 3x3 kernel for 4 iterations

From, here you can filter contours by aspect ratio and size so only the powdered donuts are left.

And here are the results on all three.

Here was my simple white balance trick. After finding the locations of the lights, pick an area in between 2 lights that should be background. Use OpenCV mean to get the average rgb values in this background area. A "white" background should have r==b==g but some of these images are blue-green. So compute an offset or delta that will change this average so that rgb are all equal and apply this delta to the whole image.

Scalar colorAvg = mean(srcImg, maskImg);

float channelAvg = (colorAvg[0] + colorAvg[1] + colorAvg[2]) / 3;

Scalar delta(channelAvg - colorAvg[0], channelAvg - colorAvg[1], channelAvg - colorAvg[2]);

balanceImg = srcImg + delta;

enter code here

Then using the found locations of the lights, set up a mask that is a semicircle on the bottom half of the light (first image below). The reason for this is to avoid the glare on the top half of the light. Then we can use OpenCV mean to get the average rgb values in that masked area for each different light. Then I apply this logic to the rgb mean: if (g > r && g > b) then green else if (r > 1.3 * max(b, g)) then red else yellow