This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

yolov3 has "region proposals", so each row in your output Mat's represent a candidate detection. the 1st 4 numbers are [center_x, center_y, width, height], followed by (N-4) class probabilities.

i only have yolov3-tiny here, so the output varies a little (only 2 output layers), but it should work in the same way for the "larger" one :

import org.opencv.core.Core;

import org.opencv.core.*;

import org.opencv.dnn.*;

import org.opencv.utils.*;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import java.util.ArrayList;

import java.util.List;

public class Yolov3 {

private static List<String> getOutputNames(Net net) {

List<String> names = new ArrayList<>();

List<Integer> outLayers = net.getUnconnectedOutLayers().toList();

List<String> layersNames = net.getLayerNames();

outLayers.forEach((item) -> names.add(layersNames.get(item - 1)));

return names;

}

public static void main(String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

String modelWeights = "c:/data/mdl/yolo/yolov3-tiny.weights";

String modelConfiguration = "c:/data/mdl/yolo/yolov3-tiny.cfg";

Net net = Dnn.readNetFromDarknet(modelConfiguration, modelWeights);

Mat image = Imgcodecs.imread("dog.jpg");

Size sz = new Size(416, 416);

Mat blob = Dnn.blobFromImage(image, 0.00392, sz, new Scalar(0), true, false);

net.setInput(blob);

List<Mat> result = new ArrayList<>();

List<String> outBlobNames = getOutputNames(net);

net.forward(result, outBlobNames);

outBlobNames.forEach(System.out::println);

result.forEach(System.out::println);

float confThreshold = 0.6f;

List<Integer> clsIds = new ArrayList<>();

List<Float> confs = new ArrayList<>();

List<Rect> rects = new ArrayList<>();

for (int i = 0; i < result.size(); ++i)

{

// each row is a candidate detection, the 1st 4 numbers are

// [center_x, center_y, width, height], followed by (N-4) class probabilities

Mat level = result.get(i);

for (int j = 0; j < level.rows(); ++j)

{

Mat row = level.row(j);

Mat scores = row.colRange(5, level.cols());

Core.MinMaxLocResult mm = Core.minMaxLoc(scores);

float confidence = (float)mm.maxVal;

Point classIdPoint = mm.maxLoc;

if (confidence > confThreshold)

{

int centerX = (int)(row.get(0,0)[0] * image.cols());

int centerY = (int)(row.get(0,1)[0] * image.rows());

int width = (int)(row.get(0,2)[0] * image.cols());

int height = (int)(row.get(0,3)[0] * image.rows());

int left = centerX - width / 2;

int top = centerY - height / 2;

clsIds.add((int)classIdPoint.x);

confs.add((float)confidence);

rects.add(new Rect(left, top, width, height));

}

}

}

// Apply non-maximum suppression procedure.

float nmsThresh = 0.5f;

MatOfFloat confidences = new MatOfFloat(Converters.vector_float_to_Mat(confs));

Rect[] boxesArray = rects.toArray(new Rect[0]);

MatOfRect boxes = new MatOfRect(boxesArray);

MatOfInt indices = new MatOfInt();

Dnn.NMSBoxes(boxes, confidences, confThreshold, nmsThresh, indices);

// Draw result boxes:

int [] ind = indices.toArray();

for (int i = 0; i < ind.length; ++i)

{

int idx = ind[i];

Rect box = boxesArray[idx];

Imgproc.rectangle(image, box.tl(), box.br(), new Scalar(0,0,255), 2);

System.out.println(box);

}

Imgcodecs.imwrite("out.png", image);

}

}

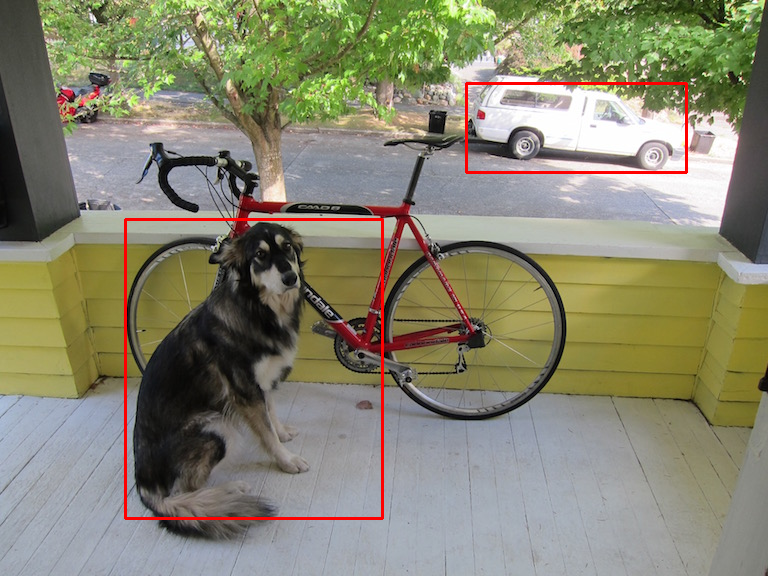

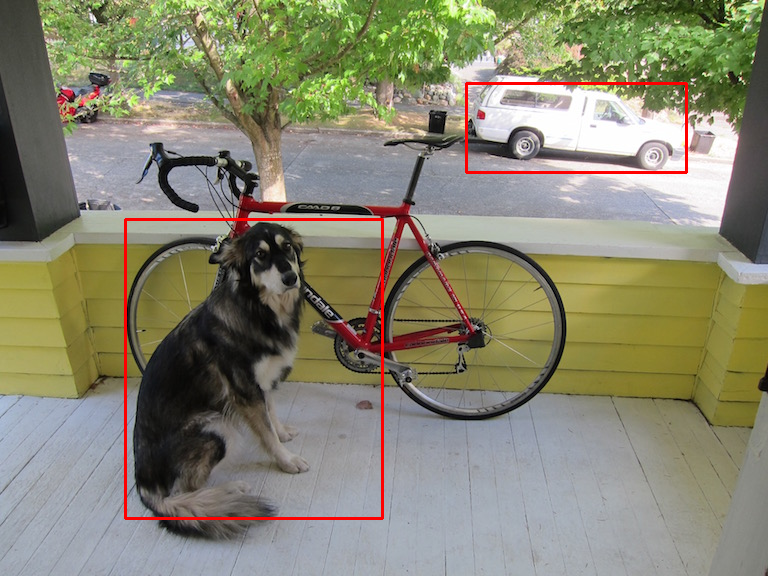

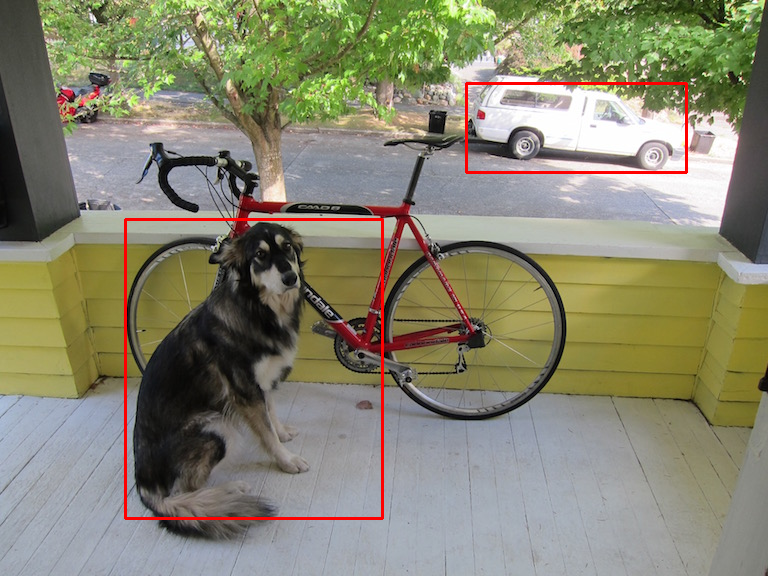

(it missed the bike, but probably better threshold values and a better model can mend that)

| 2 | No.2 Revision |

yolov3 has "region proposals", so each row in your output Mat's represent represents a candidate detection.

the 1st 4 numbers are [center_x, center_y, width, height], followed by (N-4) class probabilities.

i only have yolov3-tiny here, so the output varies a little (only 2 output layers), but it should work in the same way for the "larger" one :

import org.opencv.core.Core;

import org.opencv.core.*;

import org.opencv.dnn.*;

import org.opencv.utils.*;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import java.util.ArrayList;

import java.util.List;

public class Yolov3 {

private static List<String> getOutputNames(Net net) {

List<String> names = new ArrayList<>();

List<Integer> outLayers = net.getUnconnectedOutLayers().toList();

List<String> layersNames = net.getLayerNames();

outLayers.forEach((item) -> names.add(layersNames.get(item - 1)));

return names;

}

public static void main(String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

String modelWeights = "c:/data/mdl/yolo/yolov3-tiny.weights";

String modelConfiguration = "c:/data/mdl/yolo/yolov3-tiny.cfg";

Net net = Dnn.readNetFromDarknet(modelConfiguration, modelWeights);

Mat image = Imgcodecs.imread("dog.jpg");

Size sz = new Size(416, 416);

Mat blob = Dnn.blobFromImage(image, 0.00392, sz, new Scalar(0), true, false);

net.setInput(blob);

List<Mat> result = new ArrayList<>();

List<String> outBlobNames = getOutputNames(net);

net.forward(result, outBlobNames);

outBlobNames.forEach(System.out::println);

result.forEach(System.out::println);

float confThreshold = 0.6f;

List<Integer> clsIds = new ArrayList<>();

List<Float> confs = new ArrayList<>();

List<Rect> rects = new ArrayList<>();

for (int i = 0; i < result.size(); ++i)

{

// each row is a candidate detection, the 1st 4 numbers are

// [center_x, center_y, width, height], followed by (N-4) class probabilities

Mat level = result.get(i);

for (int j = 0; j < level.rows(); ++j)

{

Mat row = level.row(j);

Mat scores = row.colRange(5, level.cols());

Core.MinMaxLocResult mm = Core.minMaxLoc(scores);

float confidence = (float)mm.maxVal;

Point classIdPoint = mm.maxLoc;

if (confidence > confThreshold)

{

int centerX = (int)(row.get(0,0)[0] * image.cols());

int centerY = (int)(row.get(0,1)[0] * image.rows());

int width = (int)(row.get(0,2)[0] * image.cols());

int height = (int)(row.get(0,3)[0] * image.rows());

int left = centerX - width / 2;

int top = centerY - height / 2;

clsIds.add((int)classIdPoint.x);

confs.add((float)confidence);

rects.add(new Rect(left, top, width, height));

}

}

}

// Apply non-maximum suppression procedure.

float nmsThresh = 0.5f;

MatOfFloat confidences = new MatOfFloat(Converters.vector_float_to_Mat(confs));

Rect[] boxesArray = rects.toArray(new Rect[0]);

MatOfRect boxes = new MatOfRect(boxesArray);

MatOfInt indices = new MatOfInt();

Dnn.NMSBoxes(boxes, confidences, confThreshold, nmsThresh, indices);

// Draw result boxes:

int [] ind = indices.toArray();

for (int i = 0; i < ind.length; ++i)

{

int idx = ind[i];

Rect box = boxesArray[idx];

Imgproc.rectangle(image, box.tl(), box.br(), new Scalar(0,0,255), 2);

System.out.println(box);

}

Imgcodecs.imwrite("out.png", image);

}

}

(it missed the bike, but probably better threshold values and a better model can mend that)

| 3 | No.3 Revision |

yolov3 has "region proposals", so each row in your output Mat's represents a candidate detection.

the 1st 4 numbers are [center_x, center_y, width, height], followed by (N-4) class probabilities.

first we need to collect all candidates from all outputs (scales), then we can apply NMS to retain only the most promising ones.

i only have yolov3-tiny here, so the output varies a little (only (it has only 2 output layers), but it should work in the same way for the "larger" one :

import org.opencv.core.Core;

import org.opencv.core.*;

import org.opencv.dnn.*;

import org.opencv.utils.*;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import java.util.ArrayList;

import java.util.List;

public class Yolov3 {

private static List<String> getOutputNames(Net net) {

List<String> names = new ArrayList<>();

List<Integer> outLayers = net.getUnconnectedOutLayers().toList();

List<String> layersNames = net.getLayerNames();

outLayers.forEach((item) -> names.add(layersNames.get(item - 1)));

return names;

}

public static void main(String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

String modelWeights = "c:/data/mdl/yolo/yolov3-tiny.weights";

String modelConfiguration = "c:/data/mdl/yolo/yolov3-tiny.cfg";

Net net = Dnn.readNetFromDarknet(modelConfiguration, modelWeights);

Mat image = Imgcodecs.imread("dog.jpg");

Size sz = new Size(416, 416);

Mat blob = Dnn.blobFromImage(image, 0.00392, sz, new Scalar(0), true, false);

net.setInput(blob);

List<Mat> result = new ArrayList<>();

List<String> outBlobNames = getOutputNames(net);

net.forward(result, outBlobNames);

outBlobNames.forEach(System.out::println);

result.forEach(System.out::println);

float confThreshold = 0.6f;

List<Integer> clsIds = new ArrayList<>();

List<Float> confs = new ArrayList<>();

List<Rect> rects = new ArrayList<>();

for (int i = 0; i < result.size(); ++i)

{

// each row is a candidate detection, the 1st 4 numbers are

// [center_x, center_y, width, height], followed by (N-4) class probabilities

Mat level = result.get(i);

for (int j = 0; j < level.rows(); ++j)

{

Mat row = level.row(j);

Mat scores = row.colRange(5, level.cols());

Core.MinMaxLocResult mm = Core.minMaxLoc(scores);

float confidence = (float)mm.maxVal;

Point classIdPoint = mm.maxLoc;

if (confidence > confThreshold)

{

int centerX = (int)(row.get(0,0)[0] * image.cols());

int centerY = (int)(row.get(0,1)[0] * image.rows());

int width = (int)(row.get(0,2)[0] * image.cols());

int height = (int)(row.get(0,3)[0] * image.rows());

int left = centerX - width / 2;

int top = centerY - height / 2;

clsIds.add((int)classIdPoint.x);

confs.add((float)confidence);

rects.add(new Rect(left, top, width, height));

}

}

}

// Apply non-maximum suppression procedure.

float nmsThresh = 0.5f;

MatOfFloat confidences = new MatOfFloat(Converters.vector_float_to_Mat(confs));

Rect[] boxesArray = rects.toArray(new Rect[0]);

MatOfRect boxes = new MatOfRect(boxesArray);

MatOfInt indices = new MatOfInt();

Dnn.NMSBoxes(boxes, confidences, confThreshold, nmsThresh, indices);

// Draw result boxes:

int [] ind = indices.toArray();

for (int i = 0; i < ind.length; ++i)

{

int idx = ind[i];

Rect box = boxesArray[idx];

Imgproc.rectangle(image, box.tl(), box.br(), new Scalar(0,0,255), 2);

System.out.println(box);

}

Imgcodecs.imwrite("out.png", image);

}

}

(it missed the bike, but probably better threshold values and a better model can mend that)