This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

Don't know what has changed between SURF version used when the tutorial was written and now but I would not rely on the image result since it dates back to 2011.

You should post your query and train images.

Keypoint matching need texture information and will perform very badly with uniform scene. SURF features are not invariant to viewpoint changes. Also, Lowe ratio test should be used for matching. Despite the theoretical rotation / scale invariance, in reality from my experience you will observe a degradation for the feature matching.

With the following code:

//-- Step 1: Detect the keypoints using SURF Detector, compute the descriptors

int minHessian = 400;

Ptr<SURF> detector = SURF::create();

detector->setExtended(true);

detector->setHessianThreshold(minHessian);

std::vector<KeyPoint> keypoints_1, keypoints_2;

Mat descriptors_1, descriptors_2;

detector->detectAndCompute( img_1, Mat(), keypoints_1, descriptors_1 );

detector->detectAndCompute( img_2, Mat(), keypoints_2, descriptors_2 );

//-- Step 2: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< std::vector<DMatch> > knn_matches;

matcher.knnMatch( descriptors_1, descriptors_2, knn_matches, 2 );

std::vector<DMatch> good_matches;

for (size_t i = 0; i < knn_matches.size(); i++)

{

if (knn_matches[i].size() > 1)

{

float ratio_dist = knn_matches[i][0].distance / knn_matches[i][1].distance;

if (ratio_dist < 0.75)

{

good_matches.push_back(knn_matches[i][0]);

}

}

}

//-- Draw only "good" matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

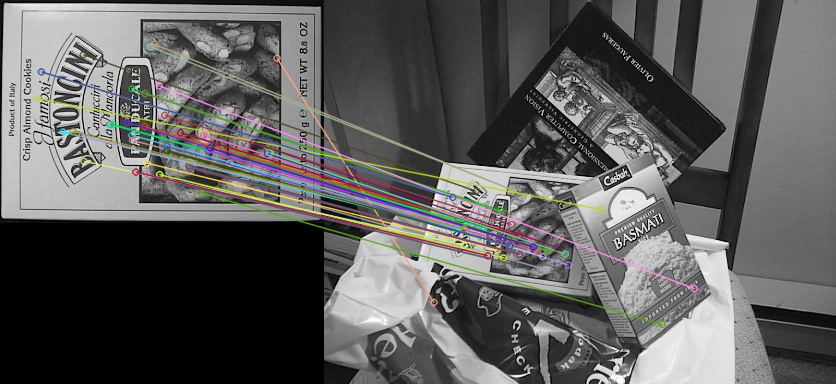

Result image (setExtended(false), 64-bits descriptor):

Result image (setExtended(true), 128-bits descriptor):