This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

Yes it can be done if you know the transformation between the color and the depth frame.

A 3D point expressed in the depth frame can be transformed into the color frame using the homogeneous transformation between the color and the depth frame (can be estimated by calibration, the color and the depth frame must be static otherwise the calibration must be redo).

Then, you can back-project the 3D point expressed in the color frame into the image plane using the color intrinsic parameters.

| 2 | No.2 Revision |

Yes it can be done if you know the transformation between the color and the depth frame.

A 3D point expressed in the depth frame can be transformed into the color frame using the homogeneous transformation between the color and the depth frame (can be estimated by calibration, the color and the depth frame must be static otherwise the calibration must be redo).

Then, you can back-project the 3D point expressed in the color frame into the image plane using the color intrinsic parameters.parameters to get the RGB values.

| 3 | No.3 Revision |

Yes it can be done if you know the transformation between the color and the depth frame.

A 3D point expressed in the depth frame can be transformed into the color frame using the homogeneous transformation between the color and the depth frame (can be estimated by calibration, the color and the depth frame must be static otherwise the calibration must be redo).

Then, you can back-project project the 3D point expressed in the color frame into the image plane using the color intrinsic parameters to get the RGB values.

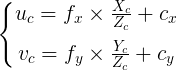

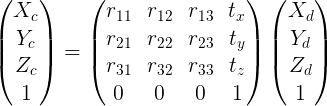

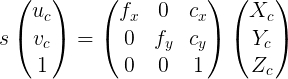

The relevant equations below.

The color pixel corresponding to the 3D point from the pointcloud can then be obtained (here without taking into account the distortion):